Guide to the best sex robots on the market. The top sexbot companies and AI sex doll manufacturers building the ideal sex partner.

Get the latest international news and world events from around the world.

Embodied AI, superintelligence and the master algorithm

😮

In the next year and a half, we’re going to see increasing adoption of technologies, which will trigger a broader industry shift, much as Tesla triggered the transition to EVs.

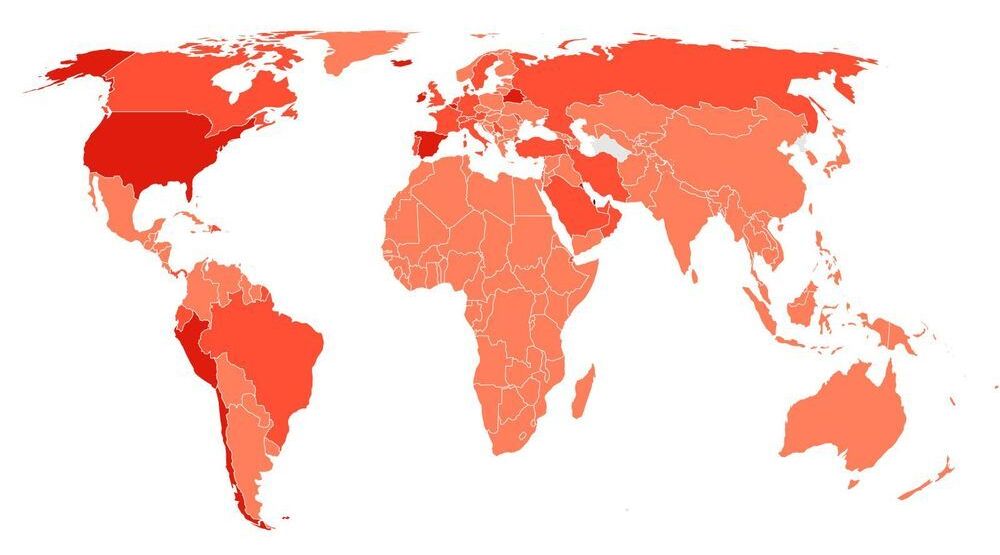

Cats’ immune system can deal with SARS-CoV-2, shows study

Circa 2020

On 8 May 2020, the Institute of Agrifood Research and Technology (IRTA) reported the case of the first cat infected with SARS-CoV-2 in Spain. It was a 4-year-old cat called Negrito, who lived with a family affected by COVID-19, with one case of death.

Coinciding with these facts, the animal presented severe respiratory difficulties and was taken to a veterinary hospital in Badalona (Barcelona), where it was diagnosed with hypertrophic cardiomyopathy. Due to a terminal condition the hospital decided to do a humanitarian euthanasia.

The necropsy, performed at the High Biosafety Level Laboratories of the Animal Health Research Center (CReSA) at IRTA, confirmed that Negrito suffered from feline hypertrophic cardiomyopathy and had no other lesions or symptoms compatible with a coronavirus infection.

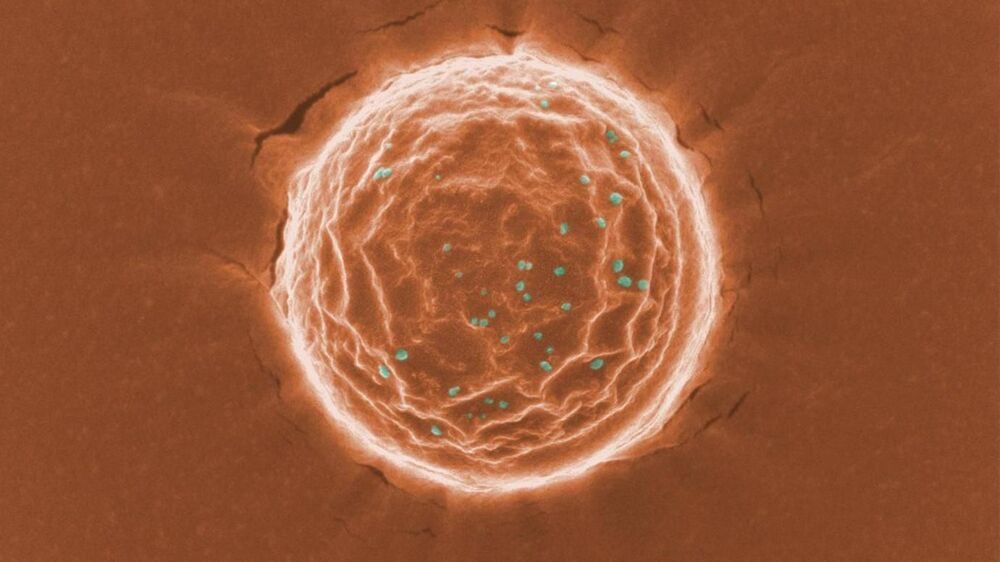

New “Nanotraps” Could Capture and Destroy Coronavirus Within the Body

At the University of Chicago, scientists have developed an absolutely innovative, promising treatment for COVID-19 in the form of nanoparticles with the ability to trap SARS-CoV-2 viruses inside the body and use the body’s own immune system to kill them.

The “nanotraps” lure the virus by imitating the target cells infected by the virus. When the virus gets trapped by the nanotraps, it is then sequestered from other cells and targeted for destruction by the immune system.

Theoretically, these nanotraps could be used on different variants of the virus, resulting in a promising new way to suppress the virus in the future. The therapy is still in the early stages of testing, but the researchers believe that it could be administered through a nasal spray as a treatment for COVID-19.

Why Carbon Credits Are The Next Opportunity For Farmers

Meat lovers will be upset about what I am going to write. I consider myself a meat lover too but I have to face the facts. Livestock industry is consuming a lot of crops like corn, barley, hay and soybeans which cover most of farmlands. And these crops can not be grown inside vertical farms or hydroponic farms. Regenerative agriculture can reduce CO2 and gives a solution to improve the quality of the soil from breaking. We need to let most farm lands to recover so we can avoid desertification. Plant-based food also uses soybeans and other crops but i think it will have less impact on farmlands since livestock will have less share. Humans were hunter gatherers then we start growing wheat to feed our growing population to adapt with the situation and now we are facing new challenges that could change our diet in the next 40 years.

Regenerative farming refers to practices focused on replenishing the soil’s nutrients and includes things like no-till cultivation, rotational cattle grazing, using less synthetic fertilizers and planting cover crops. In addition to making soil and crops healthier, the practices help to sequester CO2.

Lately, the movement has gained the support of major corporations like General Mills and PepsiCo, as well as the Biden administration. Now, a number of carbon markets such as Nori and Indigo Ag are springing up to encourage farmers to participate, but challenges remain.

» Subscribe to CNBC: https://cnb.cx/SubscribeCNBC

» Subscribe to CNBC TV: https://cnb.cx/SubscribeCNBCtelevision.

» Subscribe to CNBC Classic: https://cnb.cx/SubscribeCNBCclassic.

About CNBC: From ‘Wall Street’ to ‘Main Street’ to award winning original documentaries and Reality TV series, CNBC has you covered. Experience special sneak peeks of your favorite shows, exclusive video and more.