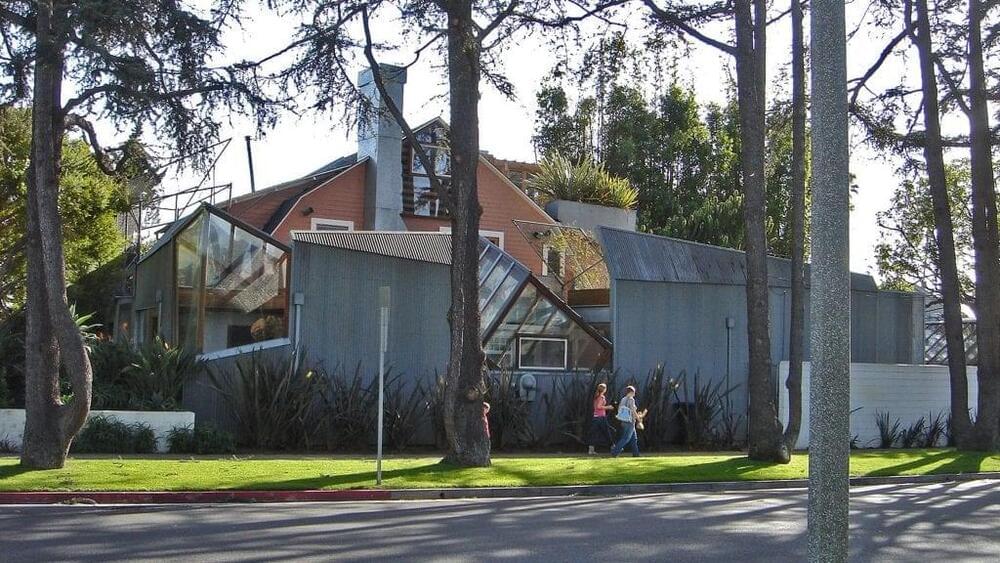

Next up in our series exploring deconstructivist architecture we look at Gehry House, architect Frank Gehry’s radical extension to his home in California.

Imagining our everyday life without lasers is difficult. We use lasers in printers, CD players, pointers, measuring devices, etc. What makes lasers so special is that they use coherent waves of light: all the light inside a laser vibrates completely in sync.

Meanwhile, quantum mechanics tells us that particles like atoms should also be considered waves. As a result, we can build ‘atom lasers’ containing coherent waves of matter. But can we make these matter waves last so they may be used in applications? In research that was published in Nature, a team of Amsterdam physicists shows that the answer to this question is affirmative.

Google’s AI division is creating digital versions of – normally hand-drawn – maps of electricity cables, in a move that could benefit the global utility industry.

The firm’s DeepMind engineers have partnered with UK Power Networks which delivers electricity across London, the East and South East, to create digital versions of maps covering more than 180,000km of electricity cables.

The work involves new image recognition software scanning thousands of maps – some of which date back decades – and automatically remastering them into a digital format for future use.

Join us on Patreon!

https://www.patreon.com/MichaelLustgartenPhD

Cronometer Discount Link:

https://shareasale.com/r.cfm?b=1390137&u=3266601&m=61121&urllink=&afftrack=

Papers referenced in the video:

Glycine supplementation extends lifespan of male and female mice.

https://pubmed.ncbi.nlm.nih.gov/30916479/

Ergothioneine exhibits longevity-extension effect in Drosophila melanogaster via regulation of cholinergic neurotransmission, tyrosine metabolism, and fatty acid oxidation.

https://pubmed.ncbi.nlm.nih.gov/34877949/

17-a-estradiol late in life extends lifespan in aging UM-HET3 male mice; nicotinamide riboside and three other drugs do not affect lifespan in either sex.

https://pubmed.ncbi.nlm.nih.gov/33788371/

Metagenomic and metabolomic remodeling in nonagenarians and centenarians and its association with genetic and socioeconomic factors.

In early June, the main body of NASA’s upcoming Europa Clipper spacecraft completed construction and was shipped to NASA’s Jet Propulsion Laboratory (JPL) in Pasadena, California soon after. The arrival of Europa Clipper’s main body marks a major milestone in the construction of the spacecraft and shows that the spacecraft and its teams are on track for a launch in 2024.

“It’s an exciting time for the whole project team and a huge milestone. This delivery brings us one step closer to launch and the Europa Clipper science investigation,” said Europa Clipper project manager Jordan Evans of JPL.

While the construction of the spacecraft’s main body is complete, that does not mean construction of the spacecraft as a whole is finished. Numerous mission-critical components are yet to be assembled and installed onto the spacecraft.

Serhii Pospielov is Lead Software Engineer at Exadel. Serhii has more than a decade of developer and engineering experience. Prior to joining Exadel he was a game developer at Mayplay Games. He holds a Master’s Degree in Computer Software Engineering from Donetsk National Technical University.

Get the latest industry news, expert insights and market research tailored to your interests!

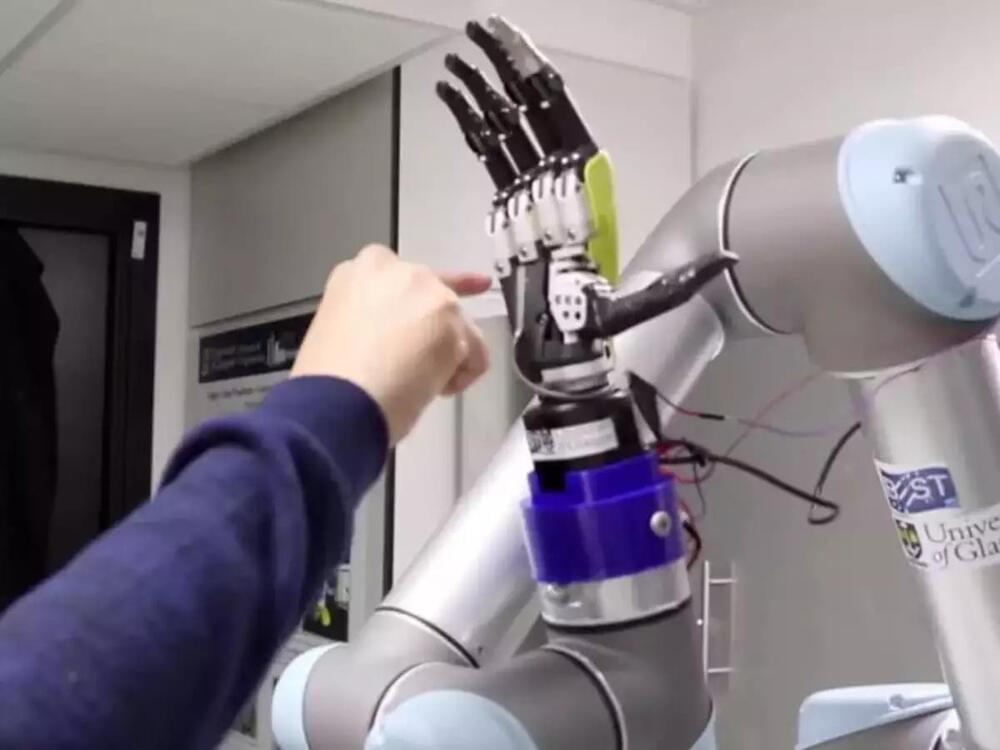

These new, more diverse approaches to training AI let it adapt to different play-styles, to make it a better team mate.

DeepMind researchers have been using the chaotic cooking game Overcooked (opens in new tab) to teach AI to better collaborate with humans. MIT researchers have followed suit, gifting their AI the ability to distinguish between a diverse range of play-styles. What’s amazing is that it’s actually working—the humans involved actually preferred playing with the AI.

Have you ever been dropped into a game with strangers only to find their play-style totally upends your own? There’s a reason we’re better at gaming with people we know—they get us. As a team, you make a point of complementing each other’s play-style so you can cover all bases, and win.

By making remarkable breakthroughs in a number of fields, unlocking new approaches to science, and accelerating the pace of science and innovation.

In 2020, Google’s AI team DeepMind announced that its algorithm, AlphaFold, had solved the protein-folding problem. At first, this stunning breakthrough was met with excitement from most, with scientists always ready to test a new tool, and amusement by some. After all, wasn’t this the same company whose algorithm AlphaGo had defeated the world champion in the Chinese strategy game Go, just a few years before? Mastering a game more complex than chess, difficult as that is, felt trivial compared to the protein-folding problem. But AlphaFold proved its scientific mettle by sweeping an annual competition in which teams of biologists guess the structure of proteins based only on their genetic code. The algorithm far outpaced its human rivals, posting scores that predicted the final shape within an angstrom, the width of a single atom. Soon after, AlphaFold passed its first real-world test by correctly predicting the shape of the SARS-CoV-2 ‘spike’ protein, the virus’ conspicuous membrane receptor that is targeted by vaccines.

The success of AlphaFold soon became impossible to ignore, and scientists began trying out the algorithm in their labs. By 2021 Science magazine crowned an open-source version of AlphaFold the “Method of the Year.” Biochemist and Editor-in-Chief H. Holden Thorp of the journal Science wrote in an editorial, “The breakthrough in protein-folding is one of the greatest ever in terms of both the scientific achievement and the enabling of future research.” Today, AlphaFold’s predictions are so accurate that the protein-folding problem is considered solved after more than 70 years of searching. And while the protein-folding problem may be the highest profile achievement of AI in science to date, artificial intelligence is quietly making discoveries in a number of scientific fields.

By turbocharging the discovery process and providing scientists with new investigative tools, AI is also transforming how science is done. The technology upgrades research mainstays like microscopes and genome sequencers 0, adding new technical capacities to the instruments and making them more powerful. AI-powered drug design and gravity wave detectors offer scientists new tools to probe and control the natural world. Off the lab bench, AI can also deploy advanced simulation capabilities and reasoning systems to develop real-world models and test hypotheses using them. With manifold impacts stretching the length of the scientific method, AI is ushering in a scientific revolution through groundbreaking discoveries, novel techniques and augmented tools, and automated methods that advance the speed and accuracy of the scientific process.