Resistive switching random-access memories (RRAMs) integrate information storage and processing into the same device, enabling faster and more energy-efficient computing. However, RRAMs are challenging to fabricate and suffer from inconsistent on-off switching. Now Zheng Jie Tan, Vrindaa Somjit, and collaborators at the Massachusetts Institute of Technology have discovered that adding dopants to the RRAMs dramatically improves their performance and the yield of their fabrication [1]. The researchers say their results provide an additional “knob” to optimize RRAMs, helping position them as one of the leading technologies for so-called in-memory computation.

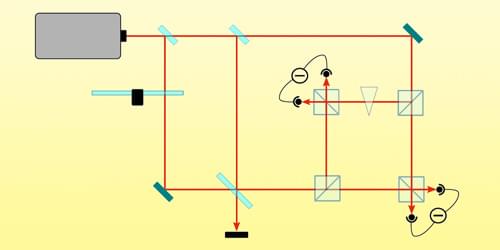

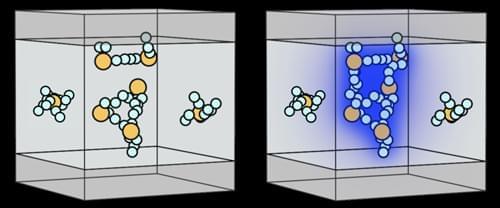

An RRAM comprises an insulating material sandwiched between two metallic layers. The bits are defined by the amount of current that passes through the device via conduction paths in the insulator under a voltage. If the voltage is strong enough, it can induce the formation or destruction of conduction paths, thus controlling information processing.

While fabricating their device, the researchers added electronegative dopants, such as gold atoms, to the insulating material. The electron redistribution induced by the dopants facilitated the formation of conduction paths, which became more stable and showed increased on-off switching consistency compared with their undoped counterparts. Moreover, doped RRAMs were consistently fabricated with conducting paths already established before the device was used. Undoped RRAMs are often fabricated without such paths, and the postfabrication process required to create them—“electroforming,” involving the application of a very strong voltage—can result in irreparable device damage.