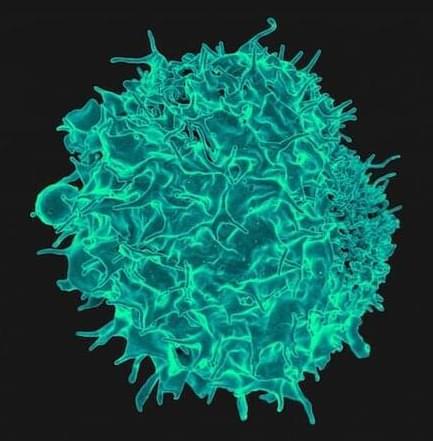

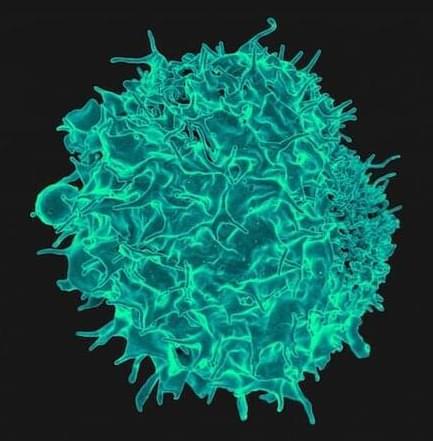

A new therapy combines two big advances, CRISPR and CAR-T, to create personalized immune cells that seek and destroy specific cancers.

Circa 2012

Researchers have identified seven genetic markers linked with a woman’s breast size, according to a new study.

While it’s was known that breast size is in part heritable, the study is the first to find specific genetic factors that are associated with differences in breast size, the researchers said.

Circa 2020 Basically this means a magnetic transistor can have not only quantum properties but also it can have nearly infinite speeds for processing speeds. Which means we can have nanomachines with near infinite speeds eventually.

Abstract The discovery of spin superfluidity in antiferromagnetic superfluid 3He is a remarkable discovery associated with the name of Andrey Stanislavovich Borovik-Romanov. After 30 years, quantum effects in a magnon gas (such as the magnon Bose–Einstein condensate and spin superfluidity) have become quite topical. We consider analogies between spin superfluidity and superconductivity. The results of quantum calculations using a 53-bit programmable superconducting processor have been published quite recently[1]. These results demonstrate the advantage of using the quantum algorithm of calculations with this processor over the classical algorithm for some types of calculations. We consider the possibility of constructing an analogous (in many respecys) processor based on spin superfluidity.

Circa 2016 😗

Last month, Google’s AI division, DeepMind, announced that its computer had defeated Europe’s Go champion in five straight games. Go, a strategy game played on a 19×19 grid, is exponentially more difficult for a computer to master than chess—there are 20 possible moves to choose from at the start of a chess game compared to 361 moves in Go—and the announcement was lauded as another landmark moment in the evolution of artificial intelligence.

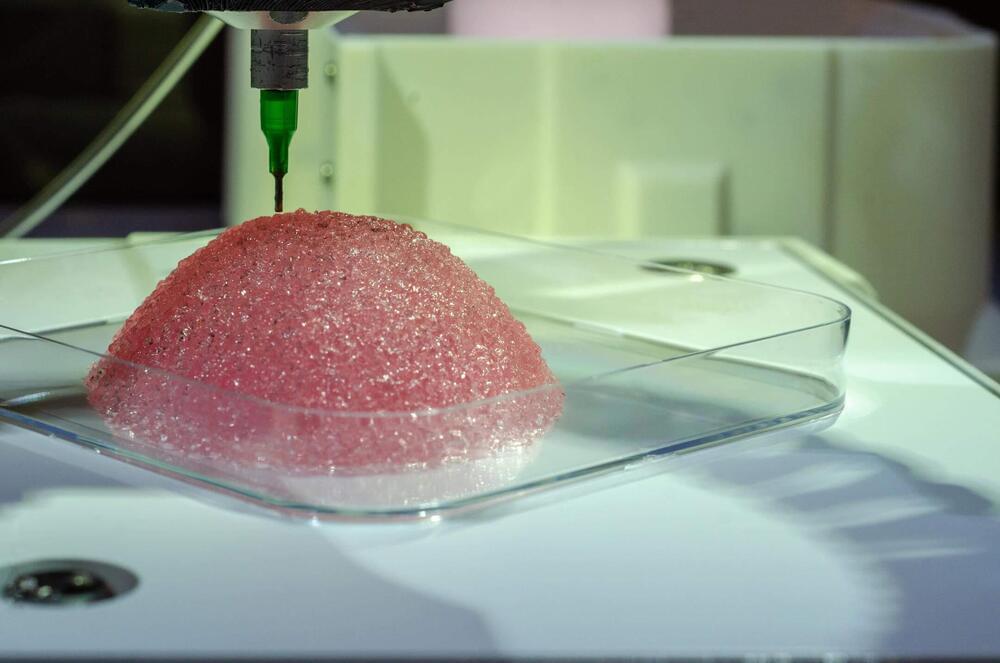

Or, at least, living neurons. His startup, Koniku, which just completed a stint at the biotech accelerator IndieBio, touts itself as “the first and only company on the planet building chips with biological neurons.” Rather than simply mimic brain function with chips, Agabi hopes to flip the script and borrow the actual material of human brains to create the chips.

Watch the Full Video at https://www.jove.com/v/55334/in-vivo-tracking-edema-developm…ideos-2022.

In Vivo Tracking of Edema Development and Microvascular Pathology in a Model of Experimental Cerebral Malaria Using Magnetic Resonance Imaging — a 2 minute Preview of the Experimental Protocol.

Angelika Hoffmann, Xavier Helluy, Manuel Fischer, Ann-Kristin Mueller, Sabine Heiland, Mirko Pham, Martin Bendszus, Johannes Pfeil.

Heidelberg University Hospital, Department of Neuroradiology; Heidelberg University Hospital, Division of Experimental Radiology, Department of Neuroradiology; Ruhr-University Bochum, NeuroImaging Centre Research, Department of Neuroscience; Heidelberg University Hospital, Centre for Infectious Diseases, Parasitology Unit; German Centre for Infection Research (DZIF),; University of Würzburg, Department of Neuroradiology; Heidelberg University Hospital, Center for Childhood and Adolescent Medicine, General Pediatrics;

We describe a mouse model of experimental cerebral malaria and show how inflammatory and microvascular pathology can be tracked in vivo using magnetic resonance imaging.

Visit https://www.jove.com?utm_source=youtube&utm_medium=social_gl…ideos-2022 to explore our entire library of 14,000+ videos of laboratory methods and science concepts.

JoVE is the world-leading producer and provider of science videos with the mission to improve scientific research and education. Millions of scientists, educators, and students at 1500+ institutions worldwide, including schools like Harvard, MIT and Stanford benefit from using JoVE’s extensive library of 14,000+ videos in their research, education and teaching.

Amazon unveils its newest warehouse robotic arm that utilizes artificial intelligence, which proves a terrifying possibility for Amazon warehouse workers to be easily replaced. John Iadarola and Jessica Burbank break it down on The Damage Report.

Amazon’s new robot should strike fear into its hundreds of thousands of warehouse workers — https://www.businessinsider.com/amazon-released-warehouse-ro…022-11

“What do you call a robotic arm that relies on computer vision, artificial intelligence, and suction cups to pick up items?

In Amazon’s world, it’s called a “Sparrow.”

The tech giant unveiled a robot on Thursday that’s capable of identifying individual items that vary in shape, size, and texture. Sparrow can also pick these up via the suction cups attached to its surface and place them into separate plastic crates.

Sparrow is the first robot Amazon has revealed of its kind and it has the potential to wipe out significant numbers of the company’s warehouse workers.