Ablation of primary cilia in the striatum did not affect the object recognition memory, as evidenced by the more time mice spent with the novel object than the old object (Fig. 4e, f). Similarly, in the novel location recognition assay, IFT88-KO spent more time with the novel location than the old location (Fig. 4g, h), indicating a normal spatial memory. In addition, the contextual memory, measured using the fear conditioning test, was intact in the IFT88-KO mice, as revealed by the similar freezing time on the test day compared with the control mice (Fig. 4i).

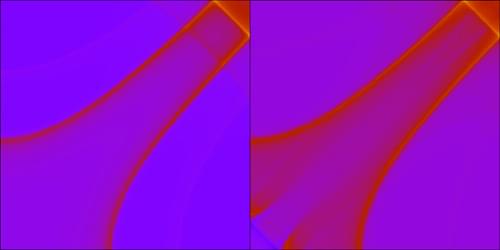

The expression of the immediate-early gene cFos was used as a molecular marker of neural activity. We examined cFos immunoreactivity (number of cFos-positive cells) in structures that are parts of striatal circuits and those known to project to or receive projections from the striatum (Fig. 5a, b). First, the rostral dorsal striatum, but not the caudal striatum of IFT88-KO mice, exhibited a significant decrease of cFos immunoreactivity (Fig. 5c, d). Within the basal ganglia circuit, there was a trend for cFos immunoreactivity reductions in the output regions (SNr and the GPm), but not in the nuclei of the indirect pathway structures (lateral globus pallidus and subthalamic nucleus) (Fig. 5c, d). The main input regions to the striatum include the dopaminergic neurons of the substantia nigra pars compact (SNc) and the glutamatergic neurons of the cortices.