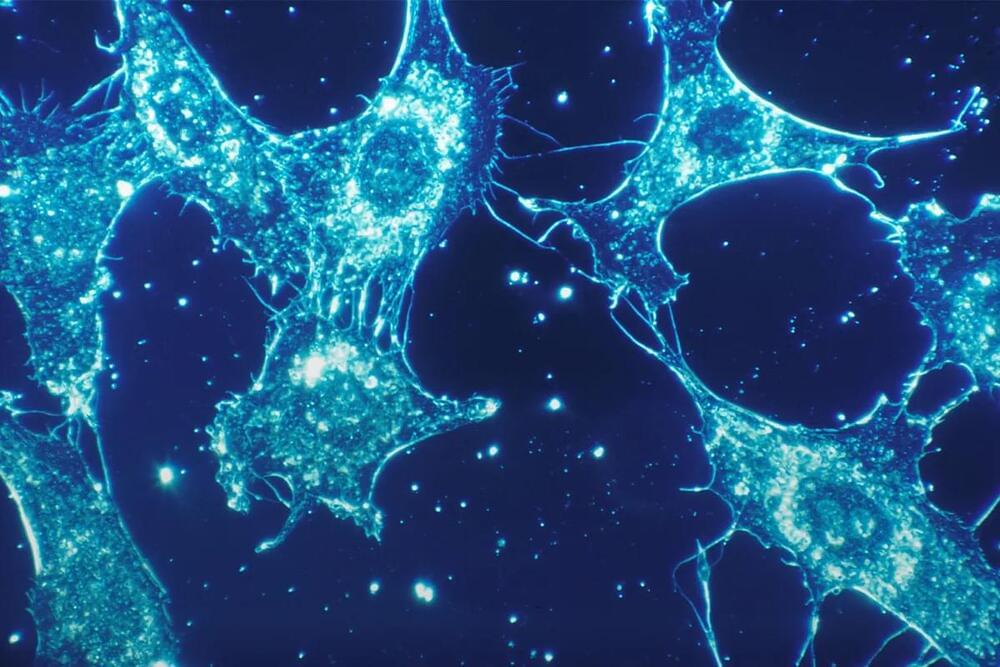

Researchers at UC San Francisco (UCSF) have engineered molecules that act like “cellular glue,” allowing them to direct in precise fashion how cells bond with each other. This discovery represents a major step toward building tissues and organs, a long-sought goal of regenerative medicine [1].

Longevity. Technology: Adhesive molecules are found naturally throughout the body, holding its tens of trillions of cells together in highly-organised patterns. They form structures, create neuronal circuits and guide immune cells to their targets. Adhesion also facilitates communication between cells to keep the body functioning as a self-regulating whole.

Now a new study, published in Nature, details how the researchers engineered cells containing customised adhesion molecules that bound with specific partner cells in predictable ways to form complex multicellular ensembles.