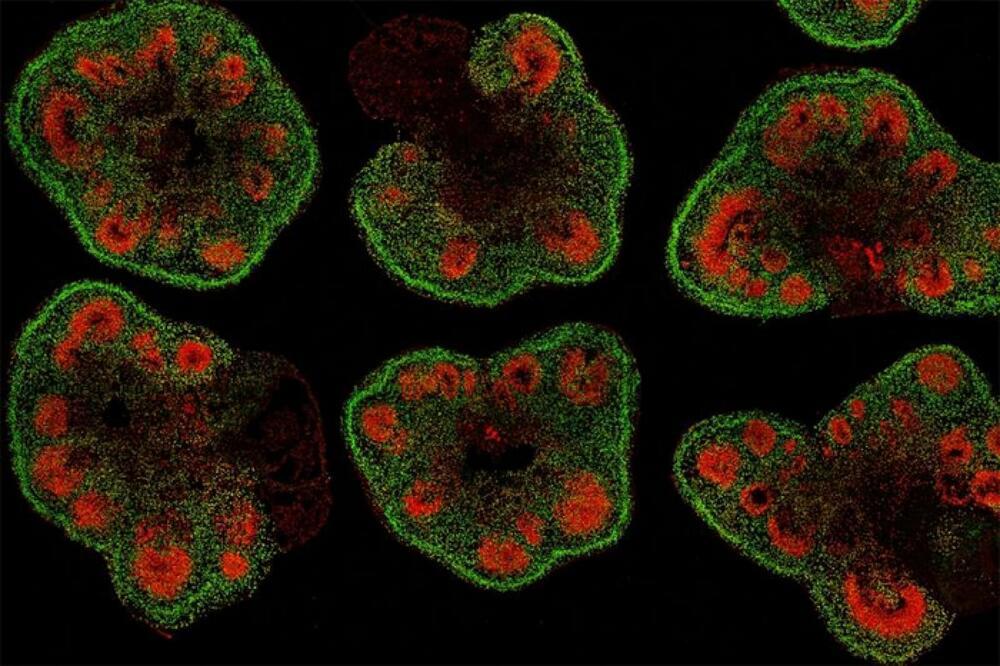

Capturing combustion on a large scale is now possible.

The first cross-sectional photos of carbon dioxide in a jet engine exhaust plume were taken by researchers using brand-new near-infrared light imaging technology.

The research was published in Applied Optics’ 28th issue.

Gordon Humphries/University of Strathclyde.

As claimed in the statement, the development of more ecologically friendly engines and aviation fuels could be sped up with the aid of this brand-new cutting-edge technology for turbine combustion.