Thermodynamic phases governed by the strong nuclear force have been linked together using multiple theoretical tools.

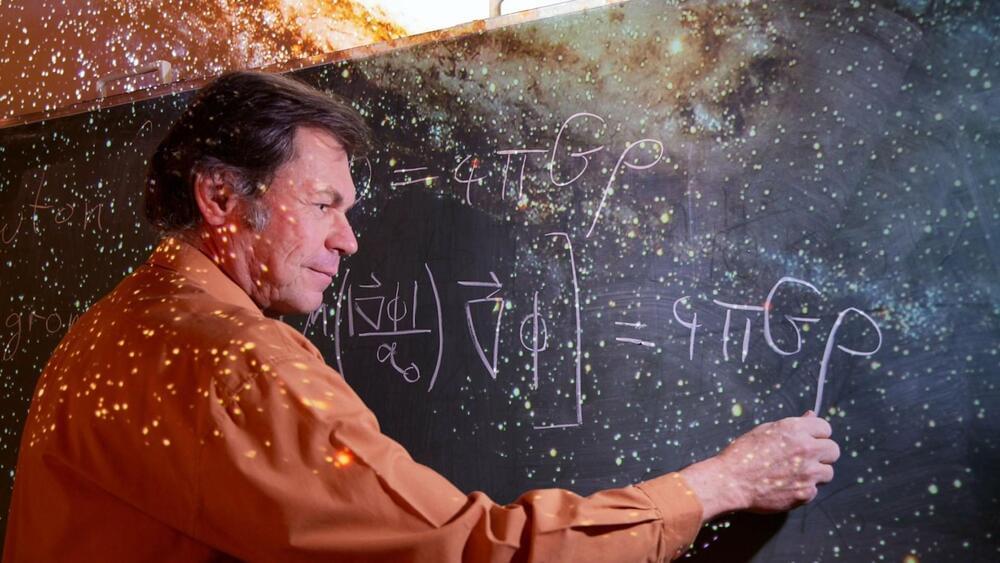

Quantum chromodynamics (QCD) is the theory of the strong nuclear force. On a fundamental level, it describes the dynamics of quarks and gluons. Like more familiar systems, such as water, a many-body system of quarks and gluons can exist in very different thermodynamic phases depending on the external conditions. Researchers have long sought to map the different corners of the corresponding phase diagram. New experimental probes of QCD—first and foremost the detection of gravitational waves from neutron-star mergers—allow for a more comprehensive view of this phase structure than was previously possible. Now Tuna Demircik at the Asia Pacific Center for Theoretical Physics, South Korea, and colleagues have put together models originally used in very different contexts to push forward a global understanding of the phases of QCD [1].

Phase transitions governed by the strong force require extreme conditions such as high temperatures and high baryon densities (baryons are three-quark particles such as protons and neutrons). The region of the QCD phase diagram corresponding to high temperatures and relatively low baryon densities can be probed by colliding heavy ions. By contrast, the region associated with high baryon densities and relatively low temperatures can be studied by observing single neutron stars. For a long time, researchers lacked experimental data for the phase space between these two regions, not least because it is very difficult to create matter under neutron-star conditions in the laboratory. This difficulty still exists, although collider facilities are being constructed that are intended to produce matter at higher baryon densities than is currently possible.