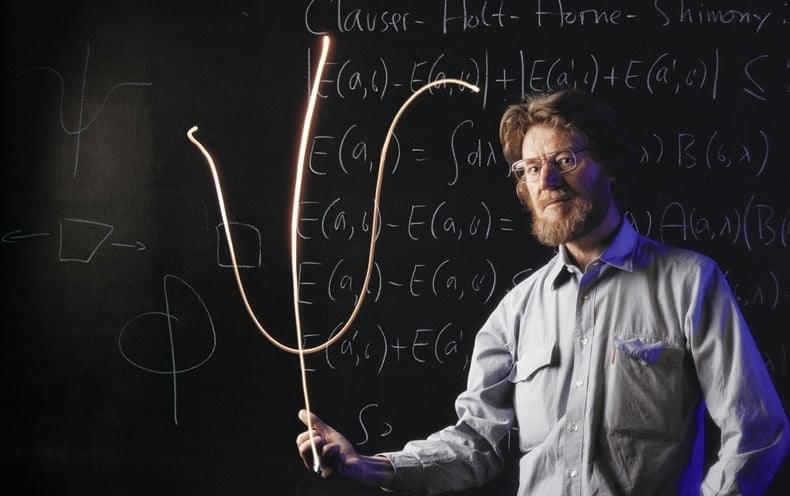

Elegant experiments with entangled light have laid bare a profound mystery at the heart of reality.

The devastating loss of a pair of newborns has provided critical insights into a rare set of blood types spotted for the first time in humans 40 years ago.

By unravelling the molecular identity of the relatively new blood type known as the Er system, a new study could hopefully prevent such tragedies in the future.

“This work demonstrates that even after all the research conducted to date, the simple red blood cell can still surprise us,” says University of Bristol cell biologist Ash Toye.

In July, particle physicists in the US completed the Snowmass process—a decadal community planning exercise that forges a vision of scientific priorities and future facilities. Organized by the Division of Particles and Fields of the American Physical Society, this year’s Snowmass meetings considered a range of plans including neutrino experiments and muon colliders. One new idea that generated buzz was the Cool Copper Collider (or C3 for short). This proposal calls for accelerating particles with conventional, or “normal-conducting,” radio frequency (RF) cavities—as opposed to the superconducting RF cavities used in modern colliders. This “retro” design could potentially achieve 500 GeV collision energies with an 8-km-long linear collider, making it significantly smaller and presumably less expensive than a comparable superconducting design.

The goal of the C3 project would be to operate as a Higgs factory, which—in particle-physics parlance—is a collider that smashes together electrons and their antimatter partners, called positrons, at energies above 250 GeV. Such a facility would make loads of Higgs bosons with less of the mess that comes from smashing protons and antiprotons together—as is done at the Large Hadron Collider (LHC) in Switzerland. A Higgs factory would give more precise measurements than the LHC of the couplings between Higgs bosons and other particles, potentially uncovering small discrepancies that could lead to new theories of particle physics. “I think the Higgs is the most interesting particle that’s out there,” says Emilio Nanni from the SLAC National Accelerator Laboratory in California. “And we should absolutely build a machine that’s dedicated to studying it with as much precision as possible.”

But an outsider might wonder why another Higgs-factory proposal is being added to the particle-physics menu. A similar factory design—the International Linear Collider (ILC)—has been in the works for years, but that project is presently stalled, as the Japanese government has not yet confirmed its support for building the facility in Japan. Waiting in the wings are several other large particle-physics proposals, including CERN’s Future Circular Collider and China’s Circular Electron Positron Collider.

Twelve new security flaws impacting various chipsets were disclosed in this month’s security advisory for Qualcomm’s devices, two of which have been given a critical severity rating. Two significant flaws in Qualcomm chipsets have been identified that might allow malicious payloads to installed remotely on the Android devices.

The first vulnerability, identified as CVE-2022–25748 (CVSS score 9.8), affects Qualcomm’s WLAN component and is described as a “Integer Overflow to Buffer Overflow during parsing GTK frames”. If exploited, this issue might result in memory corruption and remote code execution. This vulnerability impact all smart devices that use the Qualcomm Snapdragon APQ, CSRA, IPQ, MDM, MSM, QCA, WSA, WCN, WCD, SW, SM, SDX, SD, SA, QRB, QCS, QCN, and more series.

The second vulnerability, identified as CVE-2022–25718 (CVSS score 9.1), also affects Qualcomm’s WLAN component and is described as a “Cryptographic issue in WLAN due to improper check on return value while authentication handshake”. If exploited, this issue might result in memory corruption and remote code execution. This vulnerability impact all smart devices that use the Qualcomm Snapdragon APQ, CSRA, IPQ, MDM, MSM, QCA, WSA, WCN, WCD, SW, SM, SDX, SD, SA, QRB, QCS, QCN, and more series.

A security investigator has discovered three new code execution flaws in the Linux kernel that might be exploited by a local or external adversary to take control of the vulnerable computers and run arbitrary code. The roccat_report_event function in drivers/hid/hid-roccat.c has a use-after-free vulnerability identified as CVE-2022–41850 (CVSS score: 8.4). A local attacker might exploit this flaw to run malicious script on the system by submitting a report while copying a report->value. Patch has be released to addresses the Linux Kernel 5.19.12 vulnerability CVE-2022–41850.

The second flaw tracked as CVE-2022–41848 (CVSS score: 6.8), is also a use-after-free flaw due to a race condition between mgslpc_ioctl and mgslpc_detach in drivers/char/pcmcia/synclink_cs.c. By removing a PCMCIA device while calling ioctl, an attacker could exploit this vulnerability to execute arbitrary code on the system. The bug affects Linux Kernel 5.19.12 and was fixed via this patch.

Due to a compatibility issues between mgslpc ioctl and mgslpc detach in drivers/char/pcmcia/synclink cs.c, the second vulnerability, tagged as CVE-2022–41848 (CVSS score: 6.8), is likewise a use-after-free vulnerability. An adversary might use this flaw to run malicious script on the computer by deleting a PCMCIA device while executing ioctl. There is a patch that corrects this flaw that was present in the Linux Kernel 5.19.12.