More CyberTruck stuff 4 wheel steering.

Join the family at https://DailyInvestorX.com.

The 4 wheel steering feature on the Tesla Cybertruck was leaked.

#elonmusk #cybertruck #tesla

More CyberTruck stuff 4 wheel steering.

Join the family at https://DailyInvestorX.com.

The 4 wheel steering feature on the Tesla Cybertruck was leaked.

#elonmusk #cybertruck #tesla

Juan Andres Guerrero-Saade’s speciality is picking apart malicious software to see how it attacks computers.

It’s a relatively obscure cybersecurity field, which is why last month he hosted a weeklong seminar at Johns Hopkins University where he taught students the complicated practice of reverse engineering malware.

Several of the students had little to no coding background, but he was confident a new tool would make it less of a challenge: He told the students to sign up for ChatGPT.

“Programming languages are languages,” Guerrero-Saade, an adjunct lecturer at Johns Hopkins, said, referring to what the ChatGPT software does. “So it has become an amazing tool for prototyping things, for getting very quick, boilerplate code.”

YouTube and TikTok are already rife with videos of people showing how they’ve found ways to have ChatGPT perform tasks that once required a hefty dose of coding ability.

Prime Minister Benjamin Netanyahu has proposed building a network of underground highway systems across the West Bank to enable the maintenance of territorial contiguity for both Israeli settlements and Palestinian towns, The Times of Israel’s sister site, Zman Yisrael, reported Saturday.

Netanyahu is aiming for high-speed tunnels routes designed ostensibly to address the problems of traffic jams and congestion, per the vision of the billionaire Elon Musk, and his engineering firm Boring Company.

Netanyahu presented his plans during a conversation Friday with French investors in Paris at the hotel where he spent the weekend.

Food from thin air?

Food production, as we know it, is entirely dependent on land and weather conditions. Protein production is a massively disproportionate squanderer of the Earth’s resources. It’s time to enter the era of sustainable food production to liberate our planet from the burdens of agriculture.

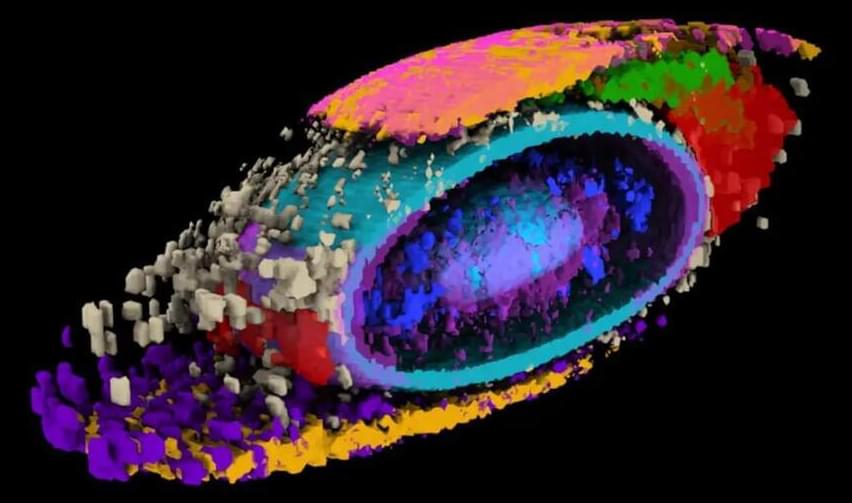

Working with hundreds of thousands of high-resolution images, researchers from the Allen Institute for Cell Science, a division of the Allen Institute, put numbers on the internal organization of human cells — a biological concept that has proven incredibly difficult to quantify until now.

The scientists also documented the diverse cell shapes of genetically identical cells grown under similar conditions in their work. Their findings were recently published in the journal Nature.

“The way cells are organized tells us something about their behavior and identity,” said Susanne Rafelski, Ph.D., Deputy Director of the Allen Institute for Cell Science, who led the study along with Senior Scientist Matheus Viana, Ph.D. “What’s been missing from the field, as we all try to understand how cells change in health and disease, is a rigorous way to deal with this kind of organization. We haven’t yet tapped into that information.”

10 AI Tools you must try in 2023. If you have used ChatGPT and noticed the power that Chat GPT provides, and you think it’s time to check out more artificial intelligence tools for business or life, here are some awesome ones!

Check out Codedamn to learn some programming below! They can help you to code and get your first job in the field too!

https://cdm.sh/adrian.

00:00 — Introduction.

00:07 — MidJourney AI Art.

01:24 — Adobe Podcast AI Voice.

02:18 — Nvidia Broadcast — AI Video Geforce.

03:10 — Codedamn — With ChatGPT Support.

04:19 — Descript — AI Video.

05:12 — Notion AI — AI Text Generation.

06:26 — Synthesia — AI Avatar.

07:00 — Resemble AI — Voice AI

07:39 — Soundraw AI — AI Music and Sound.

08:13 — Futurepedia — AI Tools Database.

#ai #tools #chatgpt.

Learn Design for Developers!

A book I’ve created to help you improve the look of your apps and websites.

📘 Enhance UI: https://www.enhanceui.com/

Feel free to follow me on:

The first 1,000 people to use the link will get a 1 month free trial of Skillshare: https://skl.sh/smeaf01231

A.I is here to stay, whether you like it or not.

Instead of fighting against it, lets adapt and start utilizing it within our workflows!

Cascadeur recently released its software to the public for free, and the A.I assisted tools are absolutely unreal.

Grab Cascadeur for free below.

https://cascadeur.com/download.

🔽SUPPORT THE CHANNEL (& Yourself ❤️)🔽

📚Level Up Academii📚

https://youtube.com/watch?v=V8cPdjO3a_U&feature=share

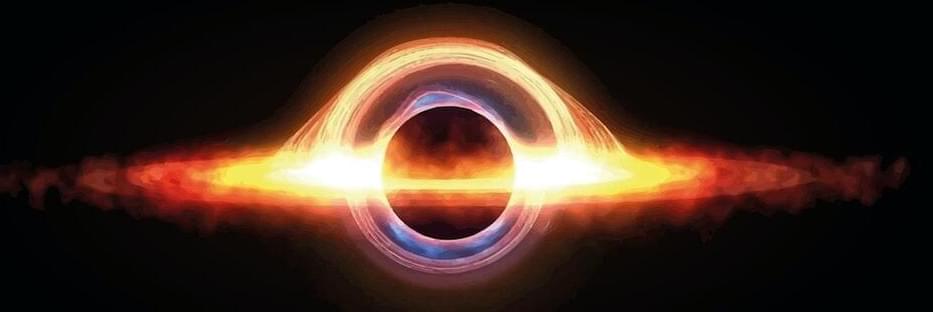

Find out what the world will be like a million years from now, as well as what kind of technology we’ll have available.

► All-New Echo Dot (5th Generation) | Smart Speaker with Clock and Alexa | Cloud Blue: https://amzn.to/3ISUX1u.

► Brilliant: Interactive Science And Math Learning: https://bit.ly/JasperAITechUniNet.

Timestamps:

0:00 No Physical Bodies.

1:51 Wormhole Creation.

2:44 Travel At Speed Of Light.

3:21 Type 3 Civilization.

4:52 Gravitational Waves.

5:46 Computers the Size of Planets.

6:56 Computronium.

I explain the following ideas on this channel:

* Technology trends, both current and anticipated.

* Popular business technology.

* The Impact of Artificial Intelligence.

* Innovation In Space and New Scientific Discoveries.

* Entrepreneurial and Business Innovation.

Subscribe link.

https://www.youtube.com/channel/UCpaciBakZZlS3mbn9bHqTEw.

Disclaimer:

Some of the links contained in this description are affiliate links.

As an Amazon Associate, I get commissions on orders that qualify.

This video describes the world and its technologies in a million years. Future technology, future technologies, tech universe, computronium, the world in a million years, digital immortality, wormhole, wormholes, faster than light, type 3 civilization, control gravity, planet sized computer, black hole energy extraction, black hole, black hole energy, and so on.

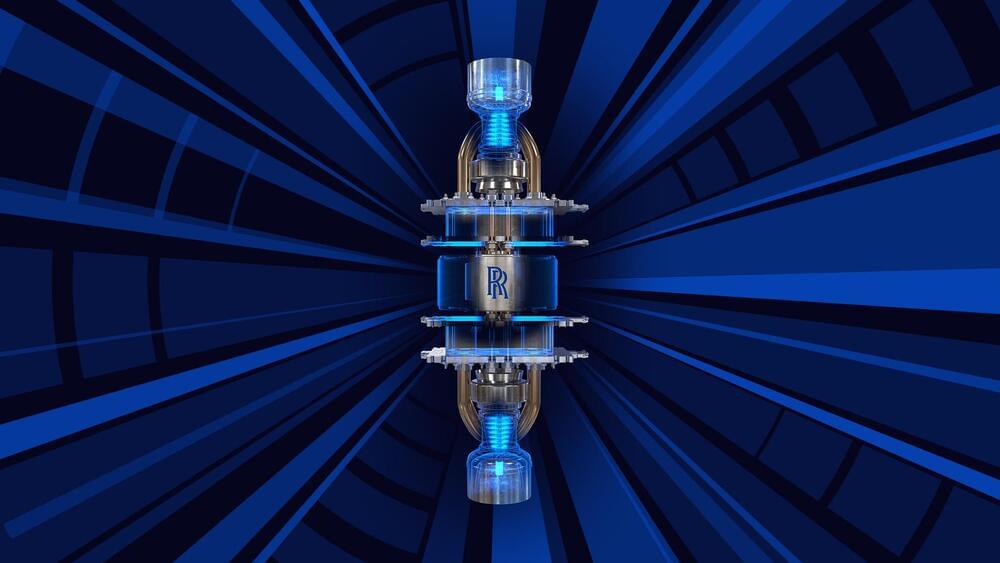

It is “designed to use an inherently safe and extremely robust fuel form.”

The future of deep space exploration is near. Rolls-Royce revealed a new image of a micro-reactor for space that it says is “designed to use an inherently safe and extremely robust fuel form.”

The iconic engineering firm recently tweeted the image alongside a caption. It is designing the nuclear fission system as part of an agreement it penned with the UK Space Agency in 2021.

Nuclear propulsion systems for space, which harness the energy produced during the splitting of atoms, have great potential for accelerating space travel and reducing transit times. This could be of particular importance when sending humans to Mars… More.

Rolls-Royce/Twitter.

Rolls-Royce revealed a new image of a micro-reactor for space that it says is “designed to use an inherently safe and extremely robust fuel form.”