A Swedish company has secured funding to build the first large-scale hydrogen-based steel plant, which could remove 95% of CO2 emissions from the reduction process.

With the new observations we are seeing a mixture of particle physics being the new physics governing even long standing laws like gravity. Also that string theory is still alive and well. I think we may never know everything unless we essentially get to a type 5 civilization or beyond.

Finding cannot be explained by classical assumptions.

An international team of astrophysicists has made a puzzling discovery while analyzing certain star clusters. The finding challenges Newton’s laws of gravity, the researchers write in their publication. Instead, the observations are consistent with the predictions of an alternative theory of gravity. However, this is controversial among experts. The results have now been published in the Monthly Notices of the Royal Astronomical Society. The University of Bonn played a major role in the study.

In their work, the researchers investigated the so-called open star clusters, which are loosely bound groups of a few tens to a few hundred stars that are found in spiral and irregular galaxies. Open clusters are formed when thousands of stars are born within a short time in a huge gas cloud. As they “ignite,” the galactic newcomers blow away the remnants of the gas cloud. In the process, the cluster greatly expands. This creates a loose formation of several dozen to several thousand stars. The cluster is held together by the weak gravitational forces acting between them.

Given a 3D piece of origami, can you flatten it without damaging it? Just by looking at the design, the answer is hard to predict, because each and every fold in the design has to be compatible with flattening.

This is an example of a combinatorial problem. New research led by the UvA Institute of Physics and research institute AMOLF has demonstrated that machine learning algorithms can accurately and efficiently answer these kinds of questions. This is expected to give a boost to the artificial intelligence-assisted design of complex and functional (meta)materials.

In their latest work, published in Physical Review Letters this week, the research team tested how well artificial intelligence (AI) can predict the properties of so-called combinatorial mechanical metamaterials.

Virtual reality (VR) and augmented reality (AR) headsets are becoming increasingly advanced, enabling increasingly engaging and immersive digital experiences. To make VR and AR experiences even more realistic, engineers have been trying to create better systems that produce tactile and haptic feedback matching virtual content.

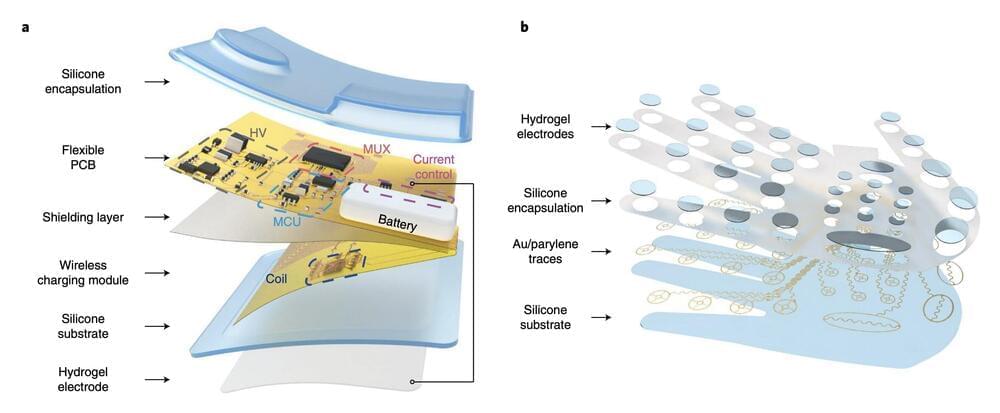

Researchers at University of Hong Kong, City University of Hong Kong, University of Electronic Science and Technology of China (UESTC) and other institutes in China have recently created WeTac, a miniaturized, soft and ultrathin wireless electrotactile system that produces tactile sensations on a user’s skin. This system, introduced in Nature Machine Intelligence, works by delivering electrical current through a user’s hand.

“As the tactile sensitivity among different individuals and different parts of the hand within a person varies widely, a universal method to encode tactile information into faithful feedback in hands according to sensitivity features is urgently needed,” Kuanming Yao and his colleagues wrote in their paper. “In addition, existing haptic interfaces worn on the hand are usually bulky, rigid and tethered by cables, which is a hurdle for accurately and naturally providing haptic feedback.”

To produce the next generation of high-frequency antennae for 5G, 6G and other wireless devices, a team at the Harvard John A. Paulson School of Engineering and Applied Sciences (SEAS) has invented the machine and manufacturing technique to manipulate microscopic objects using 3D printing and braid them into filaments a mere micrometre in diameter.

How small is this? One human hair varies in diameter between 20 and 200 micrometres from tip to root. Spider web silk can vary from 3 to 8 micrometres in diameter. So that’s teeny tiny. And for us to pack in the many antennae that go into mobile phone technology today, the smaller the better.

Current manufacturing techniques can’t make one-micrometre filaments. But the machine invented by the Harvard SEAS team can. How does it do it? It uses the surface tension of water to grab and manipulate micromaterials. The capillary forces in the water are harnessed to help in the assembly using the variable width channels contained within the machine. Using 3D printing and the hydrophilic properties of the machine’s walls, the team used surface tension to guide kevlar nanowires attached to small floats which as they travelled through the device plaited into micrometre-scale braids.

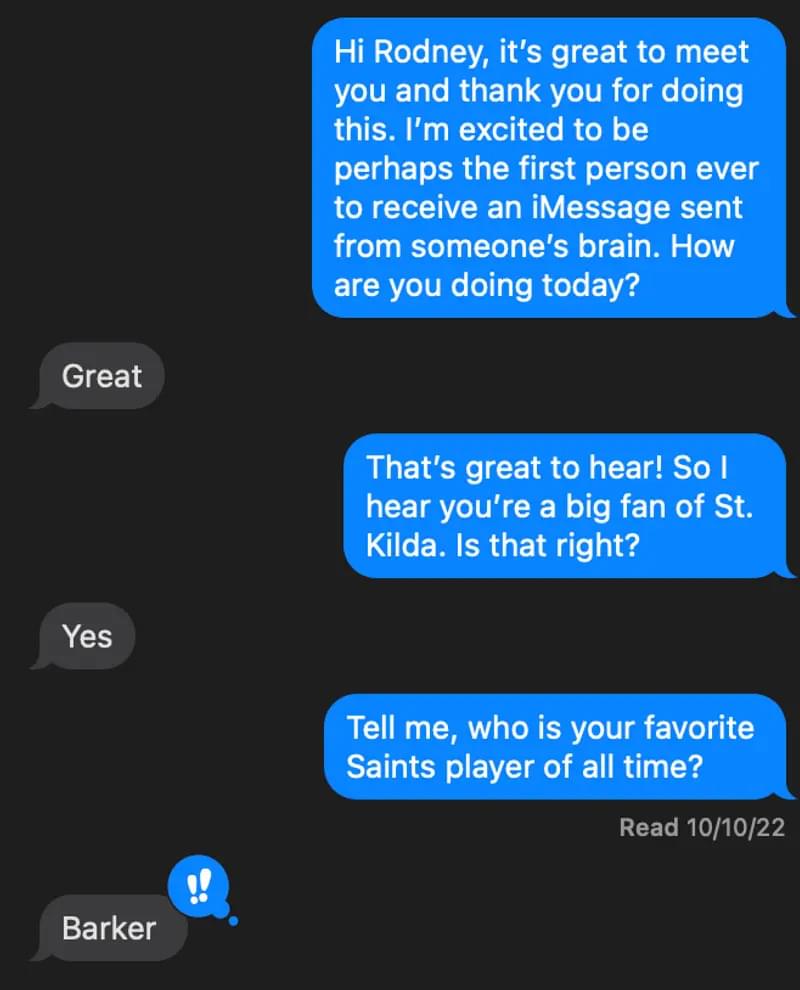

A novel brain-computer interface developed by a New York-based company called Synchron was just used to help a paralyzed patient send messages using their Apple device for the very first time. It’s a massive step up in an industry that has increasingly reported progress, which suggests that interfacing our minds with consumer devices could happen a lot sooner than some of us bargained for.

Brain-computer devices eavesdrop on brainwaves and convert these into commands. More or less the same neural signals that healthy people use to instruct their muscle fibers to twitch and enact a movement like walking or grasping an object can be used to command a robotic arm or move a cursor on a computer screen. It really is a phenomenal and game-changing piece of technology, with obvious benefits for those who are completely paralyzed and have few if any means of communicating with the outside world.

This type of technology is not exactly new. Scientists have been experimenting with brain-computer interfaces for decades, but it’s been in the last couple of years or so that we’ve actually come to see tremendous progress. Even Elon Musk has jumped on this bandwagon, founding a company called Neuralink with the ultimate goal of developing technology that allows people to transmit and receive information between their brain and a computer wirelessly — essentially connecting the human mind to devices. The idea is for anyone to be able to use this technology, even normal, healthy people, who want to augment their abilities by interfacing with machines. In 2021, Neuralink released a video of a monkey with an implanted Neuralink device playing pong, and the company wants to start clinical trials with humans soon.

With inherited gene mutations from both parents, a woman in Spain is battling with 12 tumors in her body.

As stated by the Spanish National Cancer Research Centre (CNIO), the woman first developed a tumor when still a baby and other tumors followed it within five years. 36 year-old-patient has developed twelve tumors, at least five of them malignant in her life. Each one has been of a unique kind and has affected a different area of the body.

“We still don’t understand how this individual could have formed during the embryonic stage, nor could have overcome all these pathologies,” says Marcos Malumbres, director of the Cell Division and Cancer Group at the Spanish National Cancer Research Centre (CNIO).

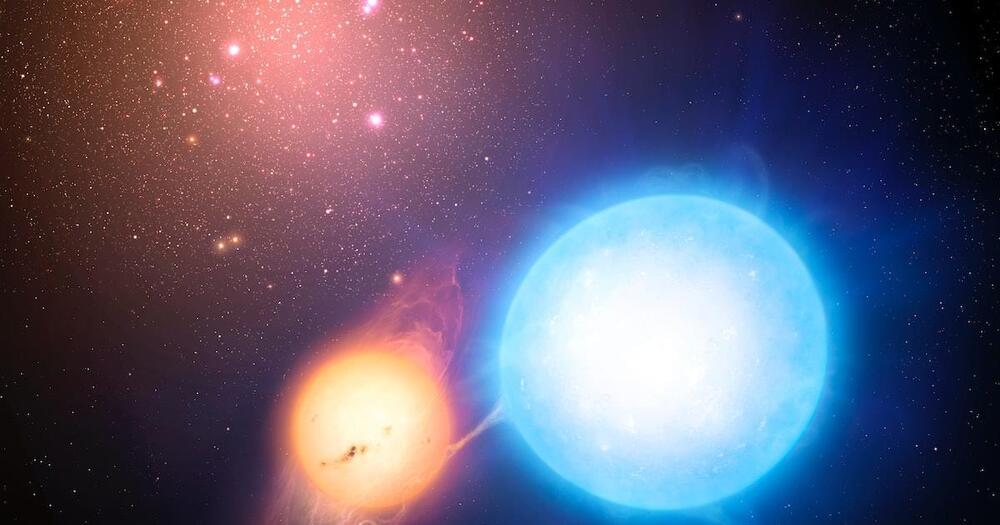

Sometimes astrophysics gets super weird.

A recent study of the star’s surface, published in the journal Nature Astronomy, says that we’re seeing Gamma Columbae in a short, deeply weird phase of a very eventful stellar life, one that lets astronomers look directly into the star’s exposed heart.

What’s New – The mix of chemical elements on the surface of Gamma Columbae look like the byproducts of nuclear reactions that should be buried in the depths of a massive star, not bubbling on its surface.