Were you unable to attend Transform 2022? Check out all of the summit sessions in our on-demand library now! Watch here.

Deepfakes aren’t new, but this AI-powered technology has emerged as a pervasive threat in spreading misinformation and increasing identity fraud. The pandemic made matters worse by creating the ideal conditions for bad actors to take advantage of organizations’ and consumers’ blindspots, further exacerbating fraud and identity theft. Fraud stemming from deepfakes spiked during the pandemic, and poses significant challenges for financial institutions and fintechs that need to accurately authenticate and verify identities.

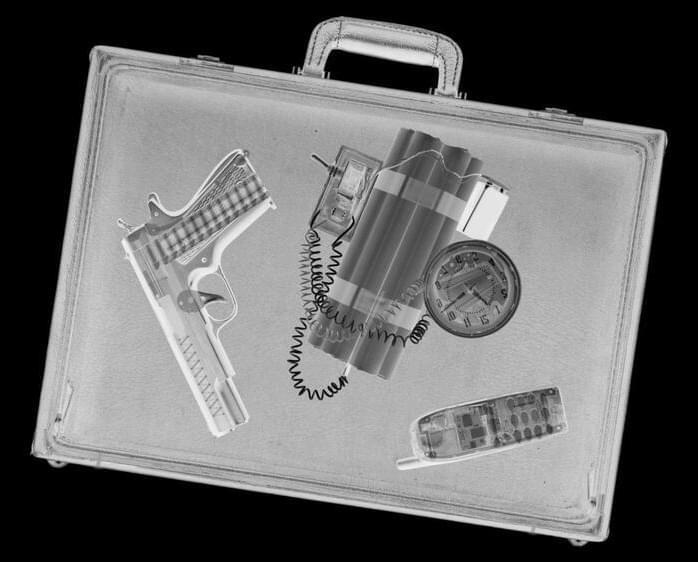

As cybercriminals continue to use tools like deepfakes to fool identity verification solutions and gain unauthorized access to digital assets and online accounts, it’s essential for organizations to automate the identity verification process to better detect and combat fraud.