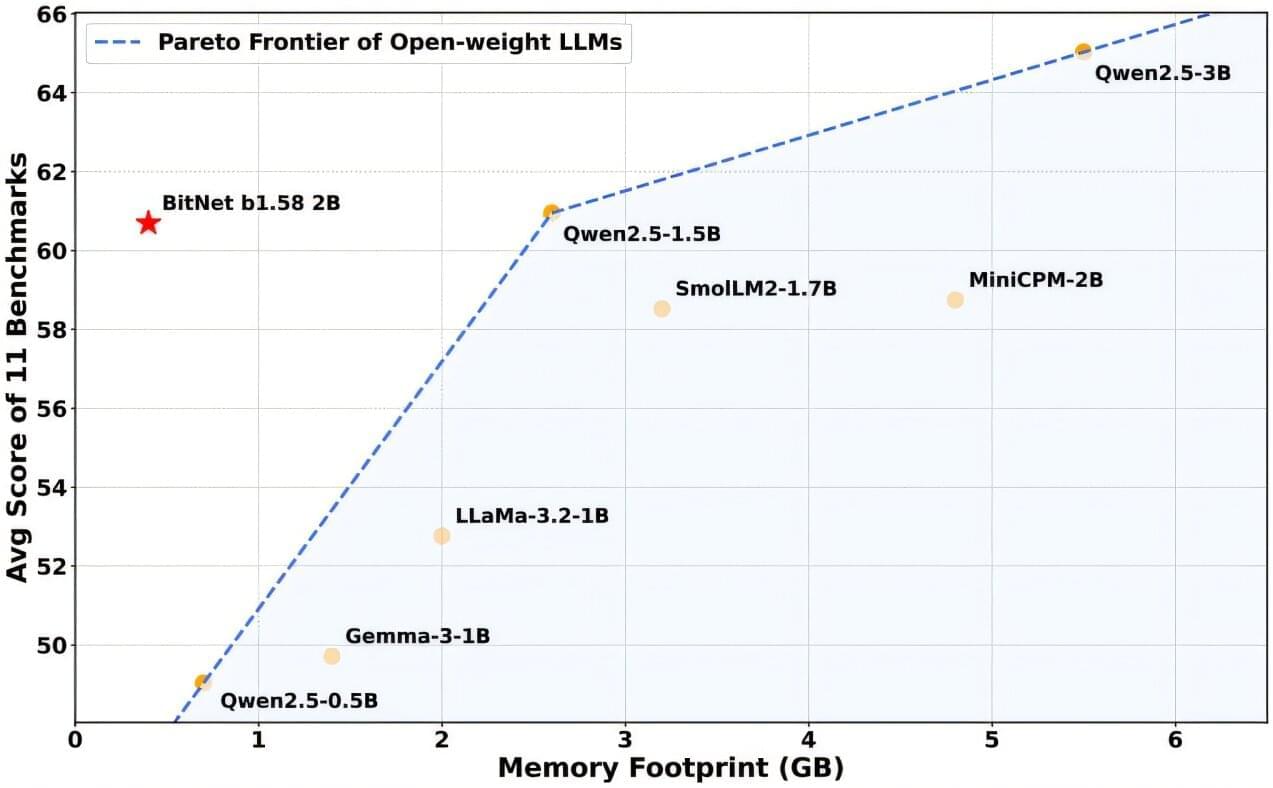

A group of computer scientists at Microsoft Research, working with a colleague from the University of Chinese Academy of Sciences, has introduced Microsoft’s new AI model that runs on a regular CPU instead of a GPU. The researchers have posted a paper on the arXiv preprint server outlining how the new model was built, its characteristics and how well it has done thus far during testing.

Over the past several years, LLMs have become all the rage. Models such as ChatGPT have been made available to users around the globe, introducing the idea of intelligent chatbots. One thing most of them have in common is that they are trained and run on GPU chips. This is because of the massive amount of computing power they need when trained on massive amounts of data.

In more recent times, concerns have been raised about the huge amounts of energy being used by data centers to support all the chatbots being used for various purposes. In this new effort, the team has found what it describes as a smarter way to process this data, and they have built a model to prove it.