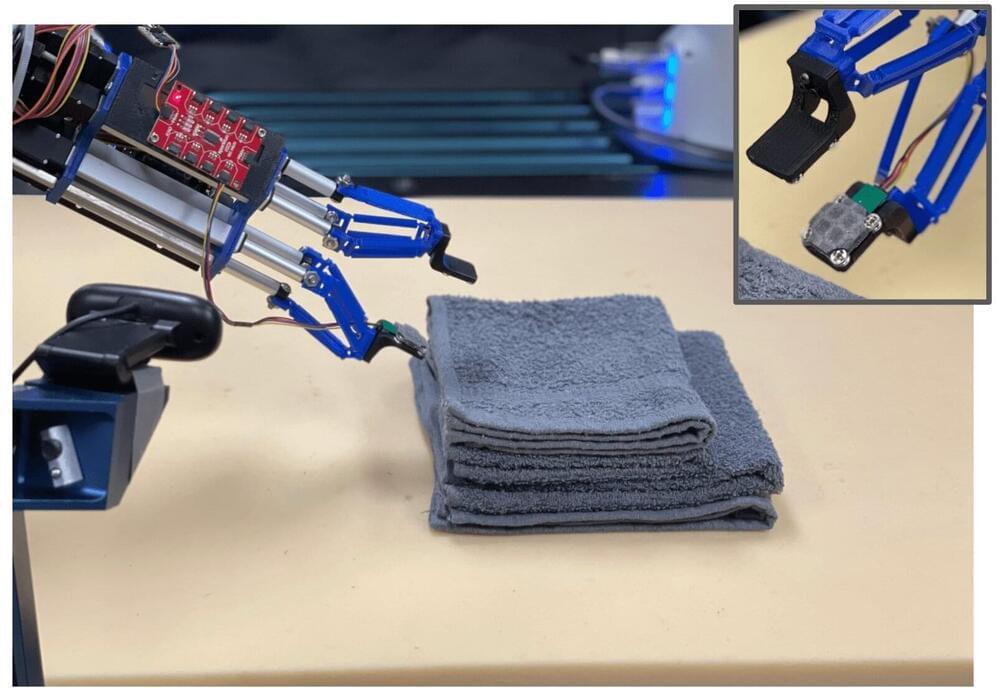

New research from Carnegie Mellon University’s Robotics Institute can help robots feel layers of cloth rather than relying on computer vision tools to only see it. The work could allow robots to assist people with household tasks like folding laundry.

Humans use their senses of sight and touch to grab a glass or pick up a piece of cloth. It is so routine that little thought goes into it. For robots, however, these tasks are extremely difficult. The amount of data gathered through touch is hard to quantify and the sense has been hard to simulate in robotics—until recently.

“Humans look at something, we reach for it, then we use touch to make sure that we’re in the right position to grab it,” said David Held, an assistant professor in the School of Computer Science and head of the Robots Perceiving and Doing (R-Pad) Lab. “A lot of the tactile sensing humans do is natural to us. We don’t think that much about it, so we don’t realize how valuable it is.”