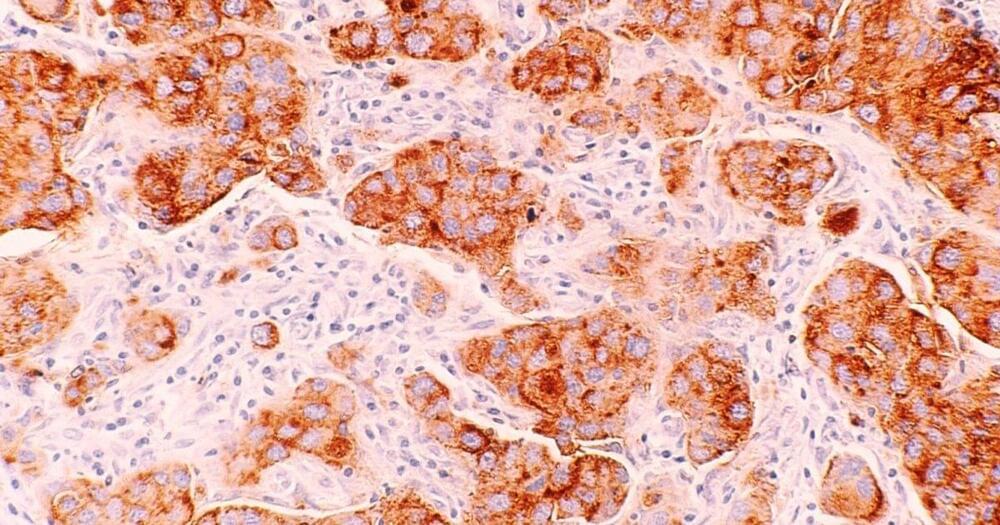

Thanks to Cancer Research UK! http://www.cancerresearchuk.org.

Links to LEARN MORE and SOURCES are below.

follow me: http://www.twitter.com/tweetsauce.

What is cancer?

http://www.cancerresearchuk.org/cancer-info/cancerandresearc…is-cancer/

http://www.cancerresearchuk.org/cancer-info/cancerstats/keyfacts/

http://en.wikipedia.org/wiki/Hallmarks_of_cancer.

Visualizing the prevention of cancer: http://www.cancerresearchuk.org/cancer-info/cancerstats/caus…alisation/

10 cancer MYTHS debunked: http://scienceblog.cancerresearchuk.org/2014/03/24/dont-beli…-debunked/

Other cancer articles: