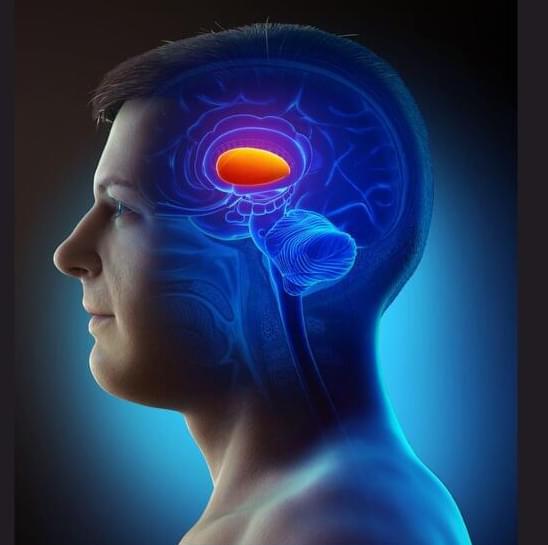

Scientists from the University of Sydney and Fudan University have found human brain signals traveling across the outer layer of neural tissue that naturally arrange themselves to resemble swirling spirals.

Published in the journal Nature Human Behaviour, the study suggests that these widespread spiral patterns, seen during both rest and cognitive activity, play a role in organizing brain function and cognitive processes.

Senior author Associate Professor Pulin Gong, from the School of Physics in the Faculty of Science, said the discovery could have the potential to advance powerful computing machines inspired by the intricate workings of the human brain.