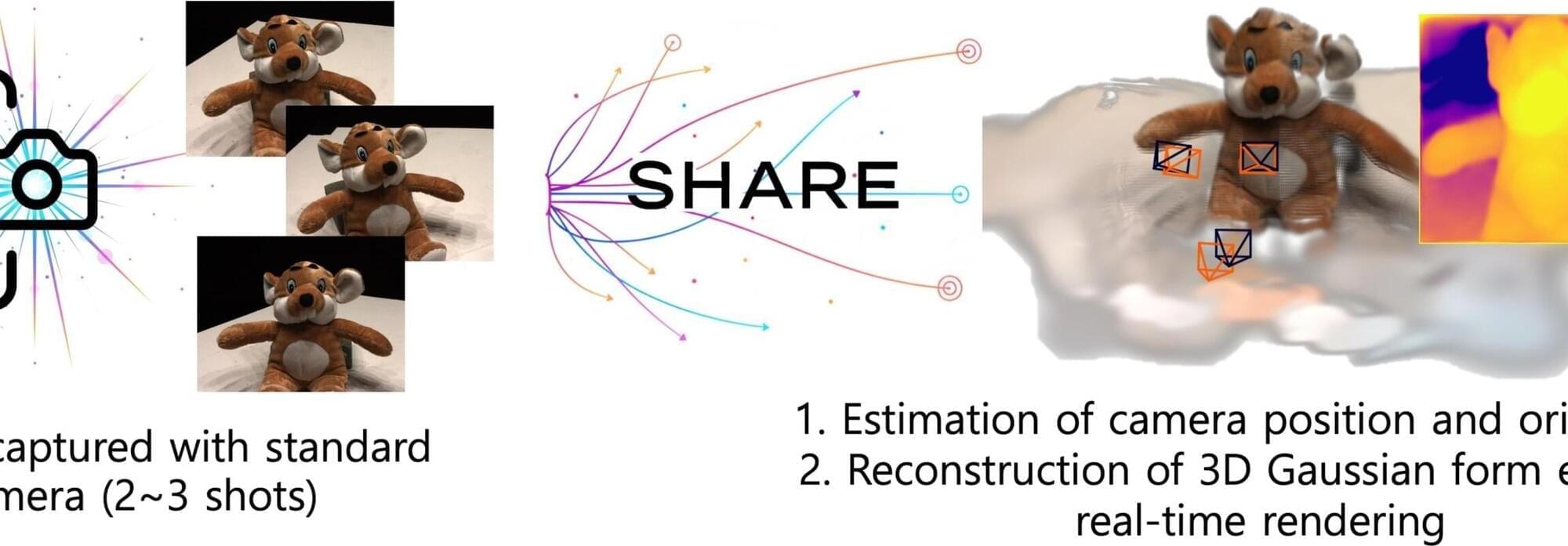

Existing 3D scene reconstructions require a cumbersome process of precisely measuring physical spaces with LiDAR or 3D scanners, or correcting thousands of photos along with camera pose information. A research team at KAIST has overcome these limitations and introduced a technology enabling the reconstruction of 3D—from tabletop objects to outdoor scenes—with just two to three ordinary photographs.

The results, posted to the arXiv preprint server, suggest a new paradigm in which spaces captured by camera can be immediately transformed into virtual environments.

The research team led by Professor Sung-Eui Yoon from the School of Computing developed the new technology called SHARE (Shape-Ray Estimation), which can reconstruct high-quality 3D scenes using only ordinary images, without precise camera pose information.