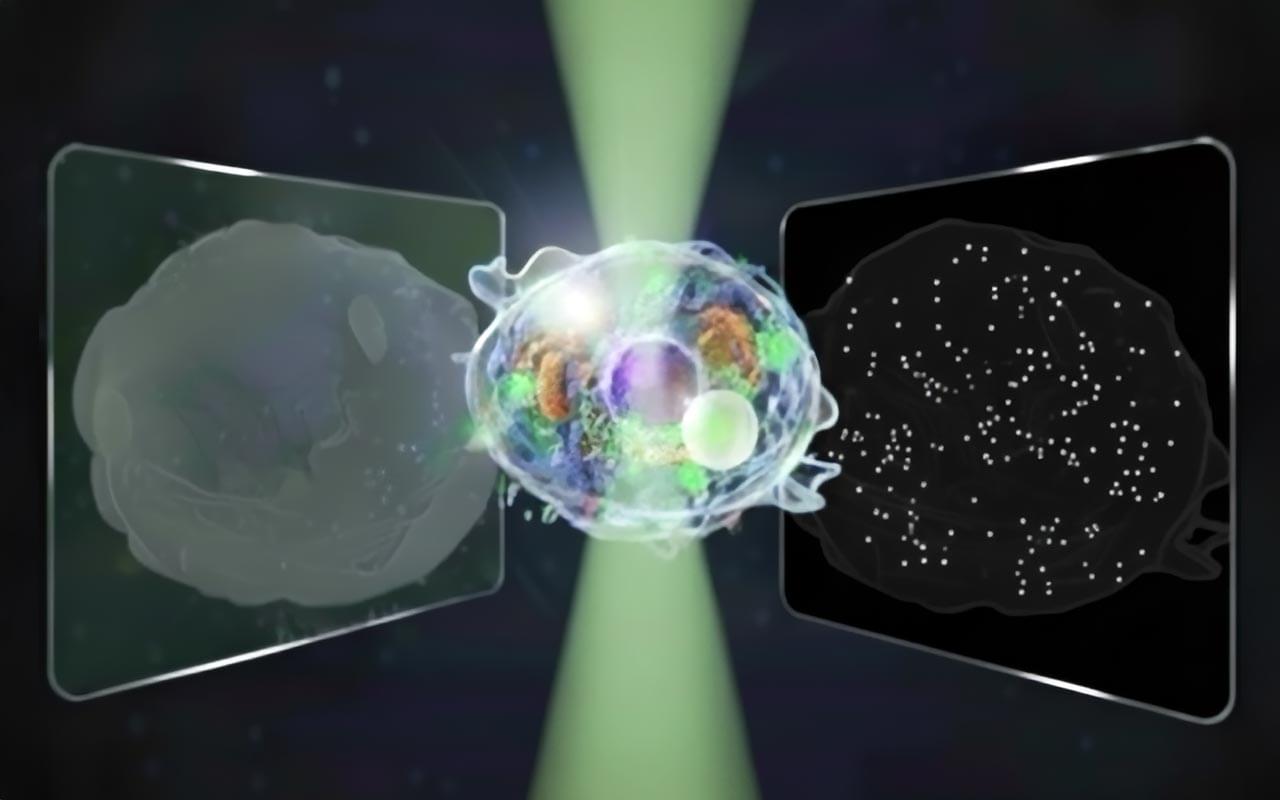

After numerous successful trials in the model, the team sought to demonstrate what the microrobot could achieve under real clinical conditions. First, they were able to demonstrate in pigs that all three navigation methods worked and that the microrobot remains clearly visible throughout the entire procedure. The investigators then navigated microrobots through the cerebral fluid of a sheep.

“This complex anatomical environment has enormous potential for further therapeutic interventions, which is why we were so excited that the microrobot was able to find its way in this environment too,” Landers noted. “In vivo experiments conducted with an ovine model demonstrated the platform’s ability to operate within anatomically constrained regions of the central nervous system,” the investigators stated in their paper. “Furthermore, in a porcine model, all locomotion strategies were validated under clinical conditions, confirming precise microrobot navigation within the cerebrovascular system and highlighting the system’s compatibility with versatile in vivo environments.”

In addition to treating thrombosis, these new microrobots could also be used for localized infections or tumors. At every stage of development, the research team has remained focused on their goal, which is to ensure that everything they create is ready for use in operating theaters as soon as possible. The next goal is to look at human clinical trials. “The use of materials that have been FDA approved for other intravascular applications, coupled with the modular design of the robotic platform, should simplify translation and adaptability to a range of clinical workflows,” the authors concluded. Speaking about what motivates the whole team, Landers said, “Doctors are already doing an incredible job in hospitals. What drives us is the knowledge that we have a technology that enables us to help patients faster and more effectively and to give them new hope through innovative therapies.”