A new analysis shows that “open source” AI tools like Llama 2 are still controlled by big tech companies in a number of ways.

Disavowal, though, is not only about waste. The disavowal of dark truths is arguably a theme of modernity itself. Modern practices around death are revealing in this regard: In many traditional societies, a corpse is kept in the family space until its burial; in most modern societies, the dead body is carted off immediately. Embalming is common to halt (and hide) the process of decay. It is precisely this approach that Lee’s mushroom burial suit is critiquing.

From a fungal vantage point, this system is indeed psychotic. Mycoremediation may not be the systemic intervention that was hoped for, but as an expression of one’s personal concern for our toxified landscape, it is far from insignificant. Rather, it is a tangible way for people without much institutional power to engage in the ongoing fight against environmental damage, to try to contain the disasters seeping around us. As a domestic intervention, mycoremediation is modest but culturally meaningful — a method of repair and reconnection.

The power of fungi comes from the proximity they have with dark truths: the abject, the mess we need to face, mortality, vitality, kinship. In other contexts, this proximity elicits wariness, but in our current crisis, it holds the possibility of a healing power — a pharmacological power. Fungi can take on the mess and the junk, break it down and transform and incorporate it rather than ignore it.

Drug development is a huge component of healthcare research that continues to grow, however, about 90% of drugs generated fail to make it to clinical trials. Drugs designed to target cancer fail due to many different obstacles including the tumor microenvironment (TME), which is the area surrounding the tumor. The TME is comprised of multiple cells generated to suppress the immune system and allow the tumor to grow. Since there are many mechanisms involved that makeup the TME, it is difficult to prescribe patients anti-cancer drugs that completely kill the tumor. Often a combination therapies are needed, but doctors run the risk of adverse side effects in patients due to toxicity of too many drugs.

Recently, a study published in eLife from Dr. Jennifer Gerton at the Stowers Institute for Medical Research in Kansas City, Missouri reported one critical reason why patients may experience unexpected side effects on the cellular level.

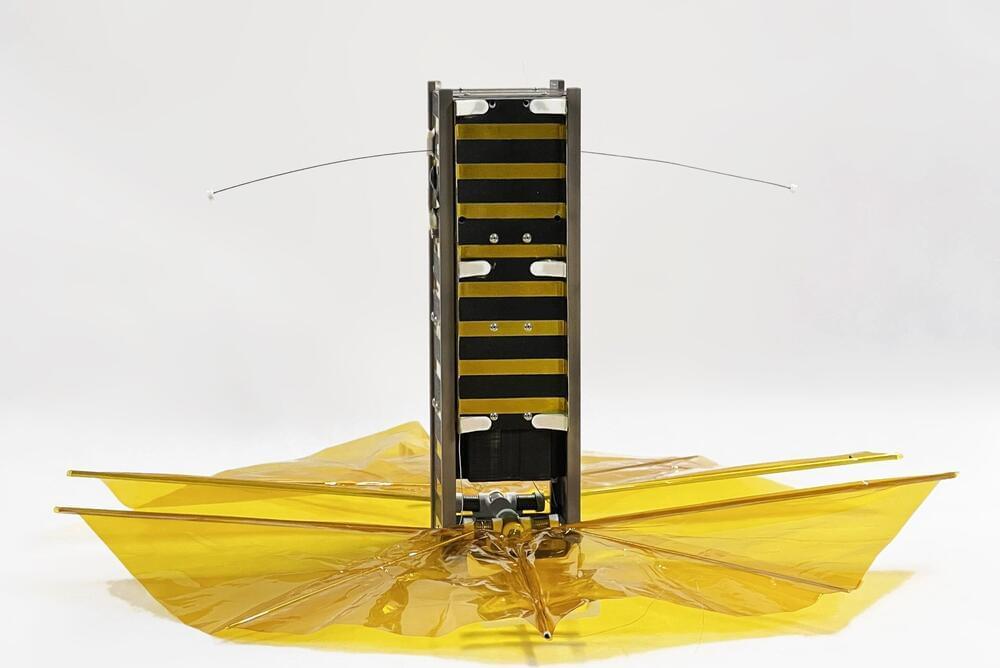

SBUDNIC, built by an academically diverse team of students, was confirmed to have successfully reentered Earth’s atmosphere in August, demonstrating a practical, low-cost method to cut down on space debris.

When it comes to space satellites, getting the math wrong can be catastrophic for an object in orbit, potentially leading to its abrupt or fiery demise. In this case, however, the fiery end was cause for celebration.

About five years ahead of schedule, a small cube satellite designed and built by Brown University students to demonstrate a practical, low-cost method to cut down on space debris reentered Earth’s atmosphere sometime on Tuesday, Aug. 8 or immediately after—burning up high above Turkey after 445 days in orbit, according to its last tracked location from U.S. Space Command.

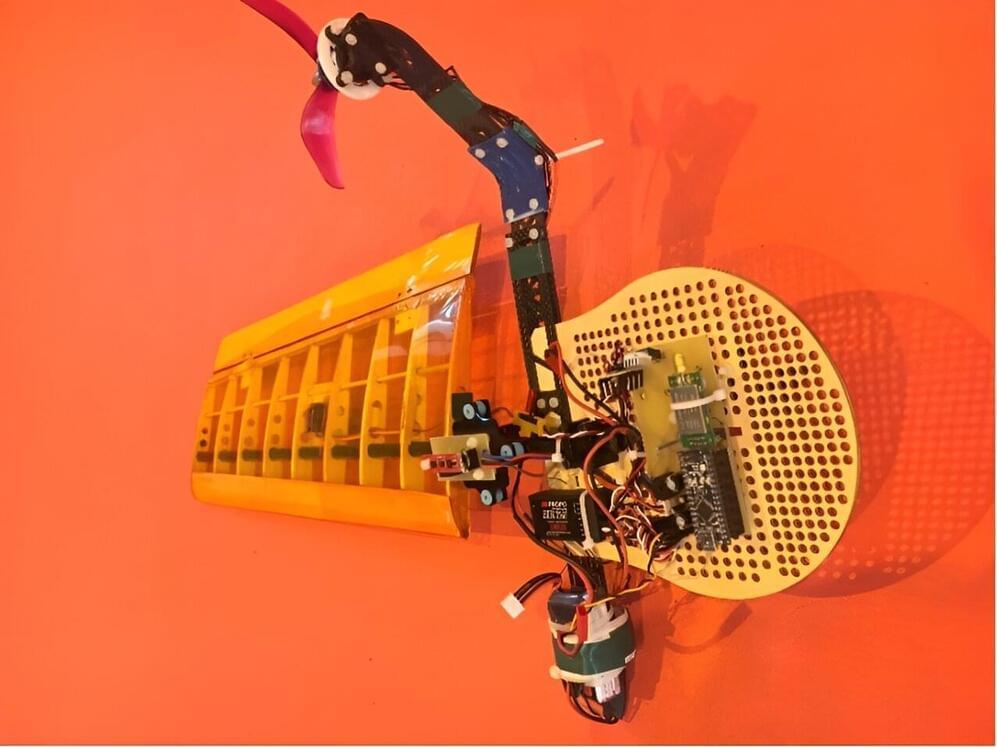

Flying robotic systems have already proved to be highly promising for tackling numerous real-world problems, including explorations of remote environments, the delivery of packages in inaccessible sites, and searches for survivors of natural disasters. In recent years, roboticists and computer scientists have introduced a multitude of aerial vehicle designs, each with distinct advantages and features.

Researchers at Sharif University of Technology in Iran recently carried out a study exploring the potential of flying robotic systems with a single wing, known as mono-wing aerial vehicles. Their paper, published in the Journal of Intelligent & Robotic Systems, outlines a new approach that could help to better control the flight of these vehicles as they navigate their surrounding environment.

“Unconventional vehicles inspired by natural phenomena consistently captivate the attention of engineers,” Afshin Banazadeh, one of the researchers who carried out the study, told Tech Xplore. “One such vehicle, the mono-wing, a single-bladed aerial vehicle, is no exception.

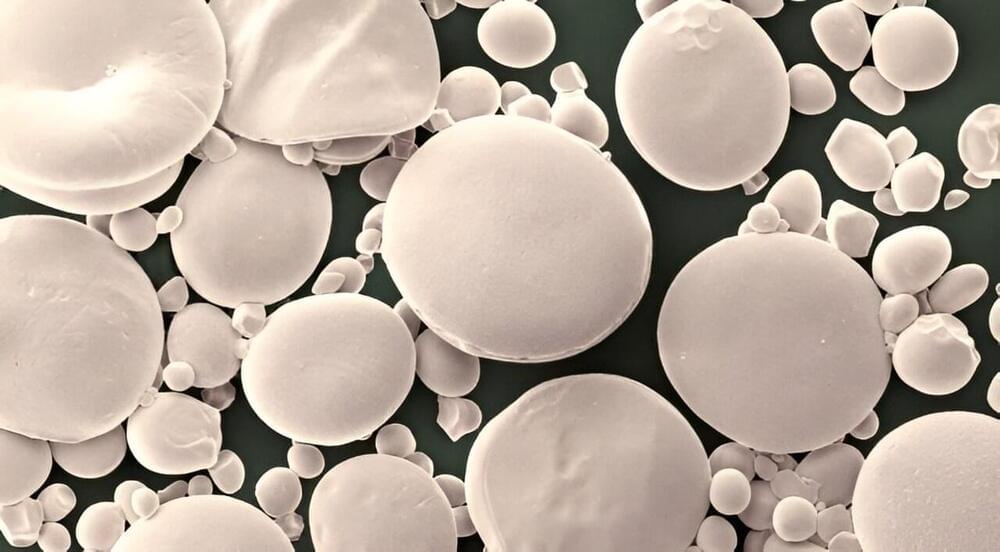

Research has brought clarity to the longstanding question of how starch granules form in the seeds of Triticeae crops—wheat, barley, and rye—unlocking diverse potential benefits for numerous industries and for human health.

Starch in wheat, maize, rice and potatoes is a vital energy-giving part of our diet and a key ingredient in many industrial applications from brewing and baking to the production of paper, glue, textiles, and construction materials.

Starch granules of different crops vary greatly in size and shape. Wheat starch (and those of other Triticeae) uniquely have two distinct types of granules: large A-type granules and smaller B-type granules.

Fascinating discussion of how biotech companies (esp. Paratus) and academic researchers are leveraging the unique immunobiology of bats to find new ways of treating inflammation, cancer, infections, metabolic diseases, and more. Exploration through comparative genomic approaches as well as newly created bat iPSCs is yielding mechanistic discoveries that could inform the medicines of tomorrow. #biotech #molecularbiology #genomics #stemcells

Harnessing the unusual biology of bats, researchers aim to turn drug discovery upside-down.

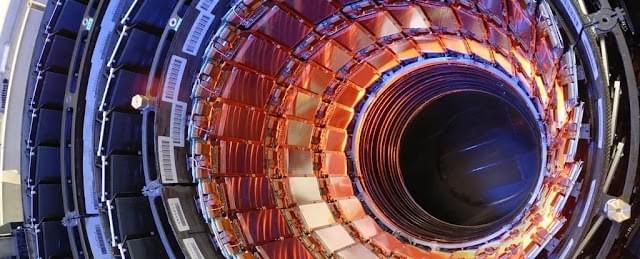

This study has successfully developed a high-efficiency neutron detector array with an exceptionally low background to measure the cross-section of the 13C(α, n)16O reaction at the China Jinping Underground Laboratory (CJPL). Comprising 24 3He proportional counters embedded in a polyethylene moderator, and shielded with 7% borated polyethylene layer, the neutron background at CJPL was as low as 4.5 counts/h, whereby 1.94 counts/h was attributed to the internal α radioactivity. Remarkably, the angular distribution of the 13C(α, n)16O reaction was proven to be a primary variable affecting the detection efficiency. The detection efficiency of the array for neutrons in the range of 0.1MeV to 4.5 MeV was determined using the 51V(p, n)51Cr reaction carried out with the 3 MV tandem accelerator at Sichuan University and Monte Carlo simulations. Future studies can be planned to focus on further improvement of the efficiency accuracy by measuring the angular distribution of 13C(α, n)16O reaction.

Gamow window is the range of energies which defines the optimal energy for reactions at a given temperature in stars. The nuclear cross-section of a nucleus is used to describe the probability that a nuclear reaction will occur. The 13C(α, n)16O reaction is the main neutron source for the slow neutron capture process (s-process) in asymptotic giant branch (AGB) stars, in which the 13C(α, n)16O reaction occurs at the Gamow window spanning from 150 to 230 keV. Hence, it is necessary to precisely measure the cross-section of 13C(α, n)16O reaction in this energy range. A low-background and high detection efficiency neutron detector is the essential equipment to carry out such measurements. This study developed a low-background neutron detector array that exhibited high detection efficiency to address the demands. With such development, advanced studies, including direct cross-section measurements of the key neutron source reactions in stars, can be conducted in the near future.

Low-background neutron detectors play a crucial role in facilitating research related to nuclear astrophysics, neutrino physics, and dark matter. By improving the efficiency and upgrading the technological capability of low background neutron detectors, this study indirectly contributes to the enhancement of scientific research. Additionally, fields involving material science and nuclear reactor technology would also benefit from the perfection of neutron detector technology. Taking into consideration the potential application and expansion of these findings, such innovative attempt aligns well with UNSDG9: Industry, Innovation & Infrastructure.

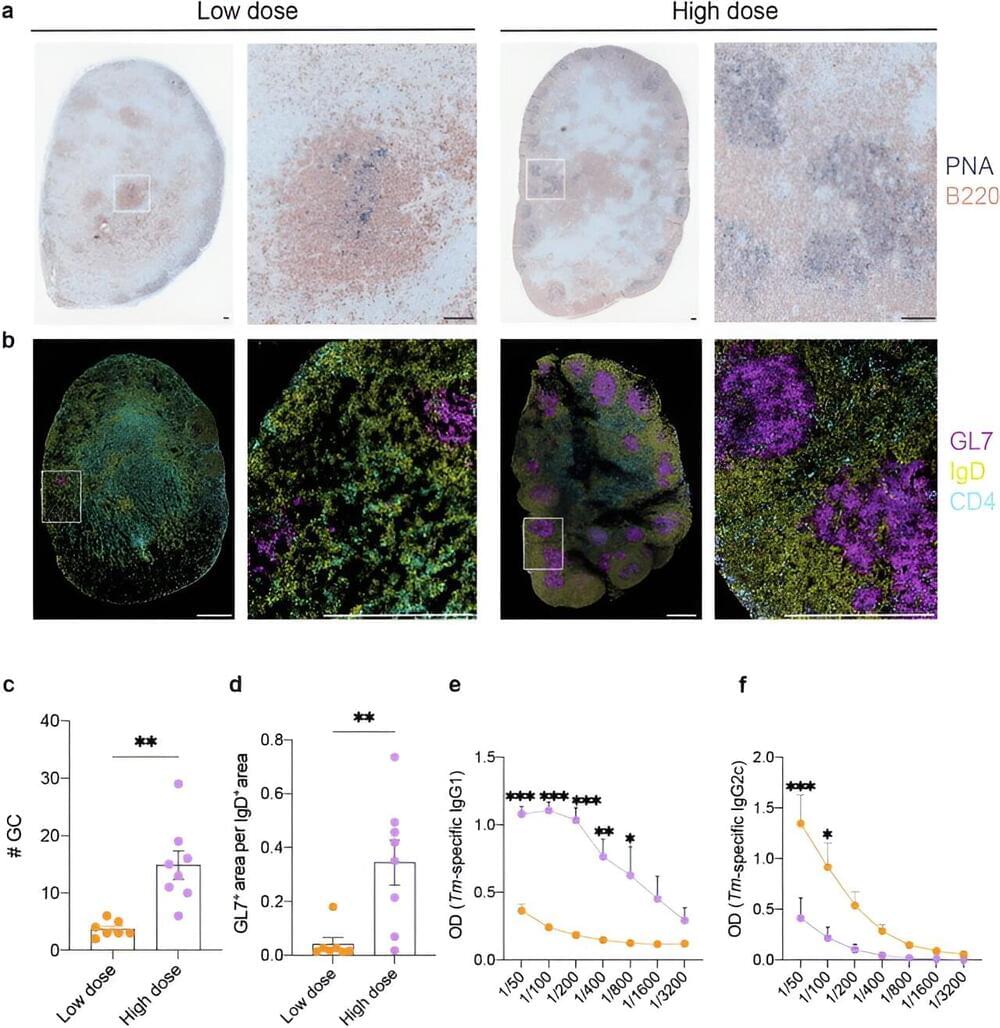

Monash University researchers have uncovered why some intestinal worm infections become chronic in animal models, which could eventually lead to human vaccines and improved treatments.

Parasitic worms, also called helminths, usually infect the host by living in the gut. About a quarter of the world population is afflicted with helminth infections.

They are highly prevalent in developing countries such as sub-Saharan Africa, South America and some tropical countries in Asia. In Australia, they can be a problem in First Nations communities.