Neuron devices prospects for recognition and interfaces.

Shared with Dropbox.

That was fast.

The Columbus Dispatch, a newspaper serving the Columbus, Ohio area, has suspended its AI efforts after its AI-powered sports writing bot was caught churning out horrible, robotic articles about local sports, Axios reports.

The Dispatch — which is notably owned by USA Today publisher Gannett — only started publishing the AI-generated sports pieces on August 18, using the bot to drum up quick-hit stories about the winners and losers in regional high school football and soccer matches. And though the paper’s ethics disclosure states that all AI-spun content featured in its reporting “must be verified for accuracy and factuality before being used in reporting,” we’d be surprised if a single human eye was laid on these articles before publishing.

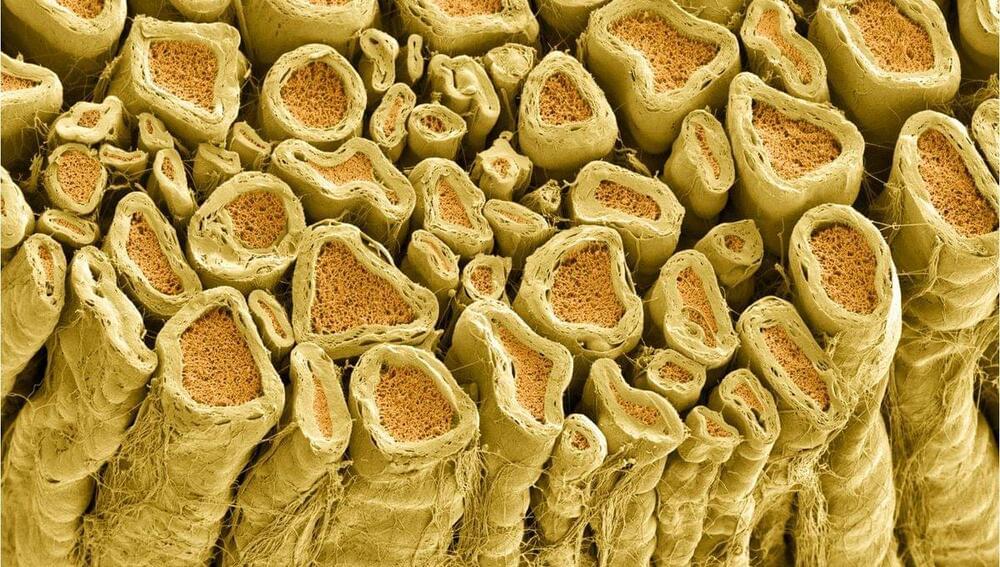

A biological pathway through which myelin, the protective coating on nerve fibers, can be repaired and regenerated has been discovered in a new study. The ramifications of this finding could be far-reaching for those with neurological diseases affecting myelin, many of which are currently untreatable.

If the axons that shoot out from the cell bodies of neurons are like electrical wires, you can think of the myelin sheath as the insulating plastic outer coating. In the brain, these sheathed nerve fibers make up most of the tissue known as white matter, but axons throughout the body are also coated in myelin.

The myelin sheath’s main functions are to protect the axon, to ensure electrical nerve impulses can travel quickly down it, and to maintain the strength of these impulses as they travel over what can be very long distances.

This post is also available in:  עברית (Hebrew)

עברית (Hebrew)

At a crucial time when the development and deployment of AI are rapidly evolving, experts are looking at ways we can use quantum computing to protect AI from its vulnerabilities.

Machine learning is a field of artificial intelligence where computer models become experts in various tasks by consuming large amounts of data, instead of a human explicitly programming their level of expertise. These algorithms do not need to be taught but rather learn from seeing examples, similar to how a child learns.

In a major milestone, Chandrayaan-3’s Pragyan rover has confirmed the presence of sulphur & other elements on the moon’s south pole. Molly Gambhir brings you a report.

#chandrayaan3 #sulphur #gravitas.

About Channel:

WION The World is One News examines global issues with in-depth analysis. We provide much more than the news of the day. Our aim is to empower people to explore their world. With our Global headquarters in New Delhi, we bring you news on the hour, by the hour. We deliver information that is not biased. We are journalists who are neutral to the core and non-partisan when it comes to world politics. People are tired of biased reportage and we stand for a globalized united world. So for us, the World is truly One.

Please keep discussions on this channel clean and respectful and refrain from using racist or sexist slurs and personal insults.

Check out our website: http://www.wionews.com.

Researchers at the Hong Kong University of Science and Technology (HKUST) have found how stem cells’ surrounding environment controls them to differentiate into functional cells, a breakthrough critical for using stem cells to treat various human diseases in the future.

Stem cells play a crucial role in supporting normal development and maintaining tissue homeostasis in adults. Their unique ability to replicate and differentiate into specialized cells holds great promise in treating diseases like Parkinson’s disease, Alzheimer’s disease and type I diabetes by replacing damaged or diseased cells with healthy ones.

Despite their potential therapeutic benefits, one of the major challenges for cell therapies lies in efficiently differentiating stem cells into functional cells to replace damaged cells in degenerative tissue. This task is particularly difficult due to the limited understanding of the underlying molecular mechanism by which the tissues around stem cells, known as the stem cell niche, guide stem cell progeny to differentiate into proper functional cell types.

Just about verything will have AI in the future.

“The compact full-frame camera promises advanced autofocus features, performance, and high-quality 4K video. Following the lead of recent Sony cameras, the a7C II also includes a dedicated artificial intelligence (AI) processing engine to drive some of its more sophisticated photo and video features, including robust subject recognition, real-time tracking, and AI-based Auto Framing.”

The Sony a7C II is available body only for $2,199.99 or in a kit with Sony’s 28-60mm zoom lens for $2,499.99. This is a $300 difference compared to the Sony a7 IV body. The Sony a7C II is available in gray and black colorways. The Sony a7C II will begin shipping this fall.

Sony a7CR: a7R V Sensor in a Compact Body.

Alongside the Sony a7C II, Sony has announced its first a7CR camera. Like the a7R series, including its most recent iteration, the Sony a7R V, the Sony a7CR offers a high-resolution image sensor, albeit in a compact package.

Experts warn that AI-generated content may pose a threat to the AI technology that produced it.

In a recent paper on how generative AI tools like ChatGPT are trained, a team of AI researchers from schools like the University of Oxford and the University of Cambridge found that the large language models behind the technology may potentially be trained on other AI-generated content as it continues to spread in droves across the internet — a phenomenon they coined as “model collapse.” In turn, the researchers claim that generative AI tools may respond to user queries with lower-quality outputs, as their models become more widely trained on “synthetic data” instead of the human-made content that make their responses unique.

Other AI researchers have coined their own terms to describe the training method. In a paper released in July, researchers from Stanford and Rice universities called this phenomenon the “Model Autography Disorder,” in which the “self-consuming” loop of AI training itself on content generated by other AI could result in generative AI tools “doomed” to have their “quality” and “diversity” of images and text generated falter. Jathan Sadowski, a senior fellow at the Emerging Technologies Research Lab in Australia who researches AI, called this phenomenon “Habsburg AI,” arguing that AI systems heavily trained on outputs of other generative AI tools can create “inbred mutant” responses that contain “exaggerated, grotesque features.”