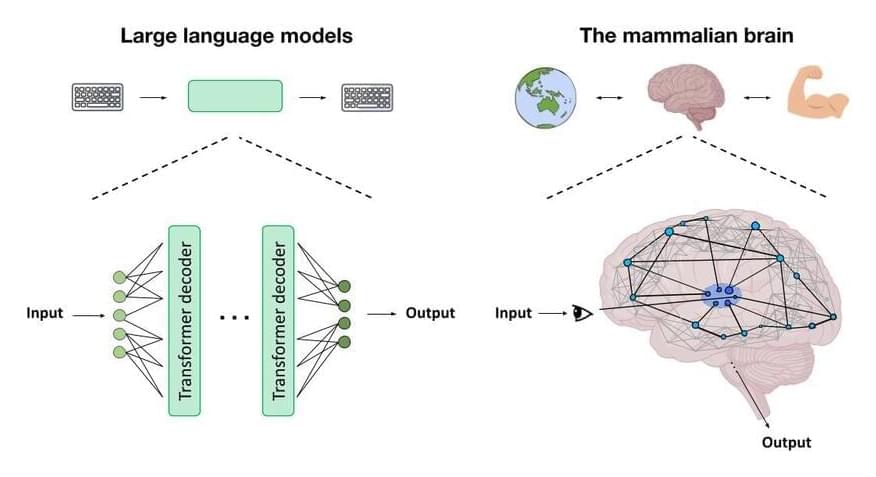

Machine learning (ML) is now mission critical in every industry. Business leaders are urging their technical teams to accelerate ML adoption across the enterprise to fuel innovation and long-term growth. But there is a disconnect between business leaders’ expectations for wide-scale ML deployment and the reality of what engineers and data scientists can actually build and deliver on time and at scale.

In a Forrester study launched today and commissioned by Capital One, the majority of business leaders expressed excitement at deploying ML across the enterprise, but data scientist team members said they didn’t yet have all the necessary tools to develop ML solutions at scale. Business leaders would love to leverage ML as a plug-and-play opportunity: “just input data into a black box and valuable learnings emerge.” The engineers who wrangle company data to build ML models know it’s far more complex than that. Data may be unstructured or poor quality, and there are compliance, regulatory, and security parameters to meet.