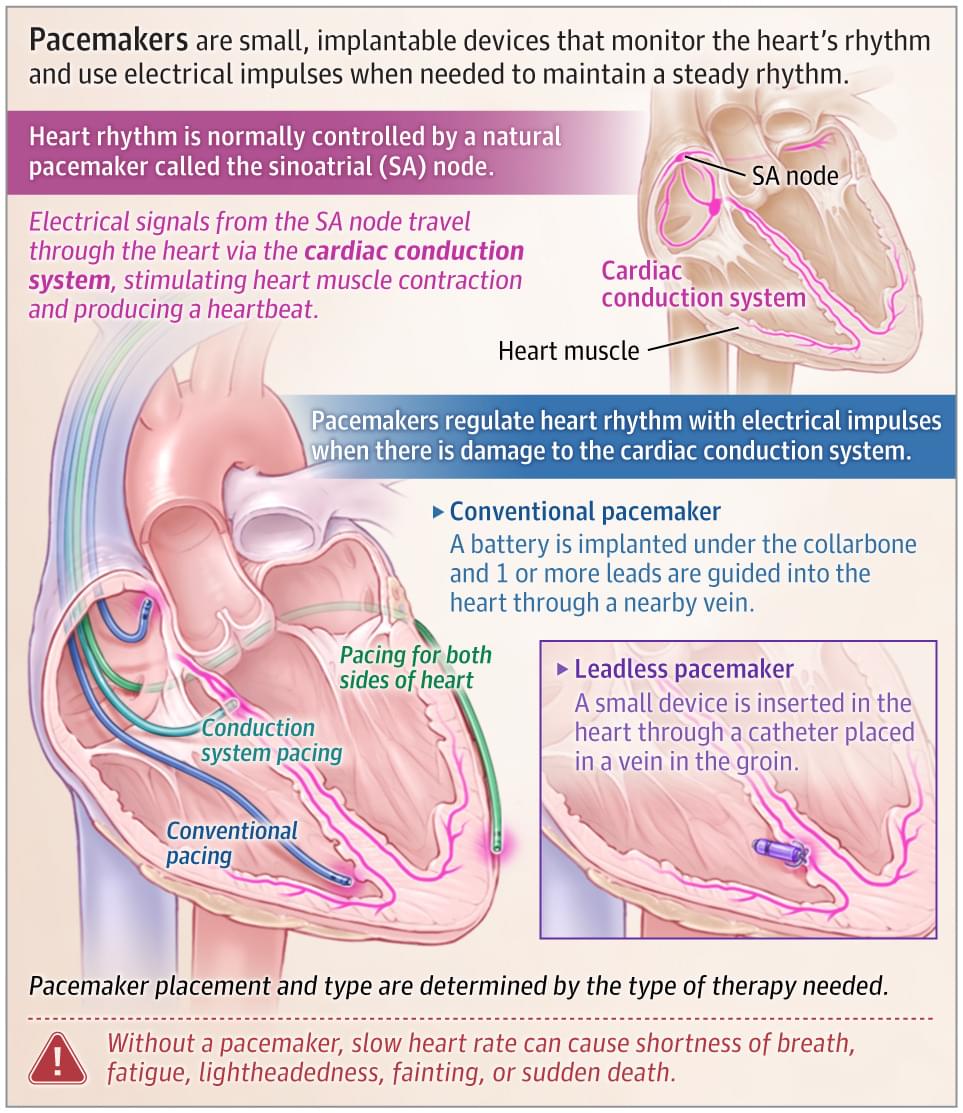

This JAMA Patient Page describes the body’s heart rhythm and the role of electronic pacemaker devices in maintaining heart rhythm for patients with certain conditions.

Wimbledon’s new automated line-calling system glitched during a tennis match Sunday, just days after it replaced the tournament’s human line judges for the first time.

The system, called Hawk-Eye, uses a network of cameras equipped with computer vision to track tennis balls in real-time. If the ball lands out, a pre-recorded voice loudly says, “Out.” If the ball is in, there’s no call and play continues.

However, the software temporarily went dark during a women’s singles match between Brit Sonay Kartal and Russian Anastasia Pavlyuchenkova on Centre Court.

These black holes were whirling at speeds nearly brushing the limits of Einstein’s theory of general relativity, forcing researchers to stretch existing models to interpret the signal.

Breathtaking images of gamma-ray flare from supermassive black hole M87

“Black holes this massive are forbidden by standard stellar evolution models,” says Professor Mark Hannam from Cardiff University. “One explanation is that they were born from past black hole mergers, a cosmic case of recursion.”

Why is Mars barren and uninhabitable, while life has always thrived here on our relatively similar planet Earth?

A discovery made by a NASA rover has offered a clue for this mystery, new research said Wednesday, suggesting that while rivers once sporadically flowed on Mars, it was doomed to mostly be a desert planet.

Mars is thought to currently have all the necessary ingredients for life except for perhaps the most important one: liquid water.

Further reading.

https://www.sciencedirect.com/science/article/abs/pii/S0956566325005779

https://journals.lww.com/medmat/fulltext/2025/06000/organoid…res.1.aspx.

https://link.springer.com/article/10.1007/s10015-024-00969-0

https://www.igi-global.com/chapter/synthetic-biology-and-ai/366024

#sciencenews #science #news #scienceexplained #artificialintelligence #ai #robots #explained #research #organoid

This is a ~18 min talk plus ~10 min Q&A on a top-down approach to bioengineering and robotics that I gave at the Biohybrid Robotics Symposium in Switzerland in July 2025 (https://biohybrid-robotics.com/).

Want more on knowledge hypergraphs? Sign up for my newsletter at Open Web Mind https://www.openwebmind.com/newsletter/ or subscribe to my YouTube channel htt…

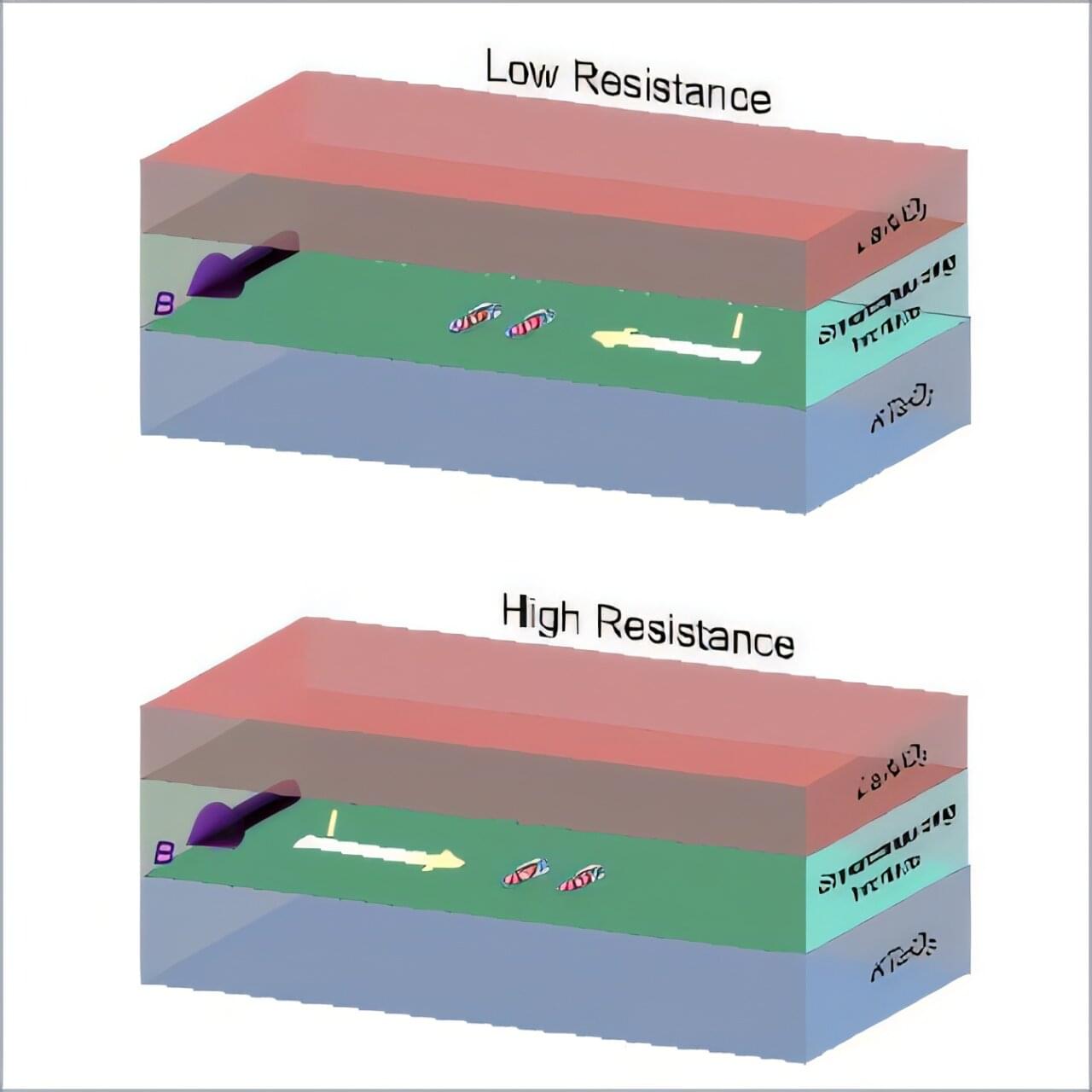

Superconductivity is an advantageous property observed in some materials, which entails the ability to conduct electricity without resistance below specific critical temperatures. One particularly fascinating phenomenon observed in some unconventional superconductors is so-called spin-triplet pairing.

In conventional superconductors, electrons form what are known as “Cooper pairs,” pairs of electrons with opposite momentum and spin, a phenomenon referred to as spin-singlet pairing. In some unconventional superconductors, on the other hand, researchers observed a different state known as spin-triplet pairing, which entails the formation of pairs of electrons with parallel spins.

Researchers at Fudan University, RIKEN Center for Emergent Matter Science (CEMS) and other institutes recently observed a significantly enhanced nonreciprocal transport in a class of superconductors known as KTaO3-based interface superconductors, which they proposed could be explained by the co-existence of spin-singlet and spin-triplet states.