Behind every successful scientist, there is another scientist.

SpaceX is making progress at Starbase, collaborating with the US Transportation Command and Air Force, potentially becoming a major source of revenue, while also facing potential competition from Relativity Space, and experiencing design errors but still managing to save samples.

Questions to inspire discussion.

What progress has SpaceX made at Starbase?

—SpaceX has made significant progress at Starbase, including construction of the Starfactory, residential area, and Rocket Garden, but the fate of prototype ships remains uncertain.

Tesla has unveiled “Optimus Gen 2”, a new generation of its humanoid robot that should be able to take over repetitive tasks from humans.

Optimus, also known as Tesla Bot, has not been taken seriously by many outside of the more hardcore Tesla fans, and for good reason.

When it was first announced, it seemed to be a half-baked idea from CEO Elon Musk with a dancer disguised as a robot for visual aid. It also didn’t help that the demo at Tesla AI Day last year was less than impressive.

People may be more than two times likelier to develop schizophrenia-related disorders if they owned cats during childhood than if they didn’t:

Living with cats as a child has once again been linked to mental health disorders, because our furry friends apparently can’t catch a break.

In a new meta-analysis published in the journal Schizophrenia Bulletin, Australian researchers identified 17 studies between 1980 and 2023 that seemed to associate cat ownership in childhood with schizophrenia-related disorders — a sample size narrowed down from a whopping 1,915 studies that dealt with cats during that 43-year time period.

As anyone who’s read anything about cats and mental health knows, there is a growing body of evidence suggesting that infection from the Toxoplasma gondii parasite, which is found in cat feces and undercooked red meat, may be linked to all sorts of surprising things. From mental illness to an interest in BDSM or a propensity for car crashes, toxoplasmosis — that’s the infection that comes from t. gondii exposure — has been thought of as a massive risk factor for decades now, which is why doctors now advise pregnant people not to clean cat litter or eat undercooked meat.

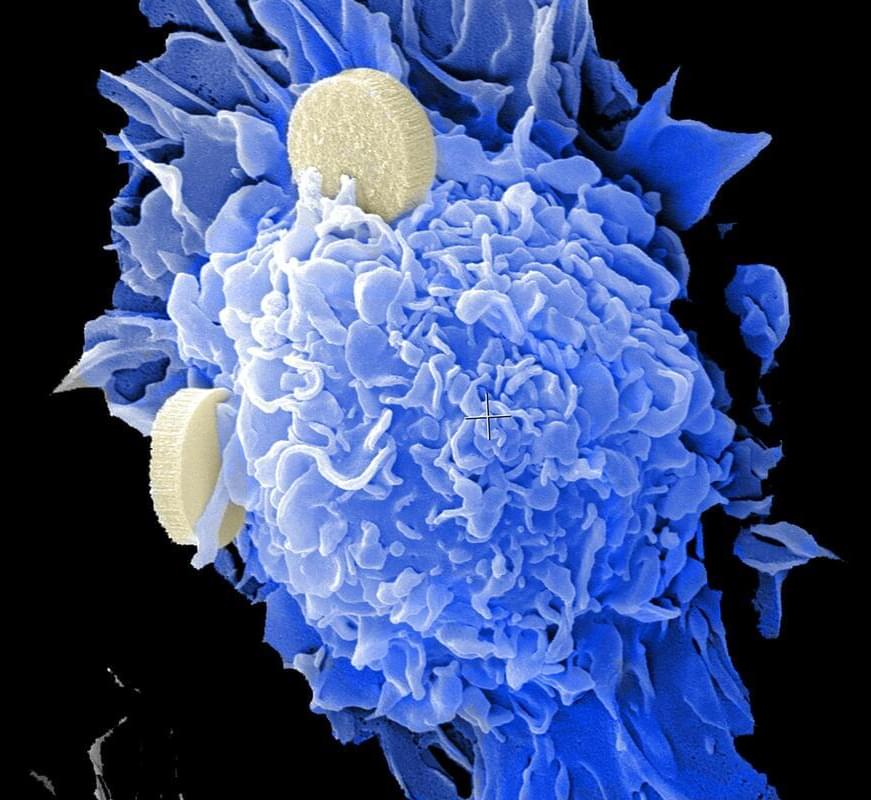

Researchers have come one step closer to answering why, in some patients, a type of lymphoma changes from indolent to aggressive, and in particular, they are closer to identifying which patients are at high risk of this change happening.

Part of the answer lies in the protein expression in the tumor, explains Associate Professor Maja Ludvigsen from the Department of Clinical Medicine at Aarhus University. Maja is one of the authors of a new study on the subject, which has just been published in the journal Blood Advances.

Follicular lymphoma is an incurable lymphoma. But unlike many other cancers, it is not always aggressive from the start. This means that patients with the disease have to live with the uncertainty of when—and how—the cancer will develop. It also means frequent visits to the hospital to monitor any acute developments.

Self-propelled nanoparticles could potentially advance drug delivery and lab-on-a-chip systems — but they are prone to go rogue with random, directionless movements. Now, an international team of researchers has developed an approach to rein in the synthetic particles.

Led by Igor Aronson, the Dorothy Foehr Huck and J. Lloyd Huck Chair Professor of Biomedical Engineering, Chemistry and Mathematics at Penn State, the team redesigned the nanoparticles into a propeller shape to better control their movements and increase their functionality. They published their results in the journal Small (“Multifunctional Chiral Chemically-Powered Micropropellers for Cargo Transport and Manipulation”).

A propeller-shaped nanoparticle spins counterclockwise, triggered by a chemical reaction with hydrogen peroxide, followed by an upward movement, triggered by a magnetic field. The optimized shape of these particles allows researchers to better control the nanoparticles’ movements and to pick up and move cargo particles. (Video: Active Biomaterials Lab)

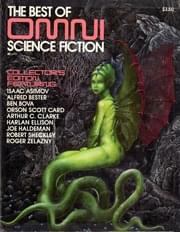

When I was growing up this was the closest thing to a futurist magazine I could find.

Omni was a science and science fiction magazine published in the US and the UK. It contained articles on science, parapsychology, and short works of science fiction and fantasy. It was published as a print version between October 1978 and 1995. The first Omni e-magazine was published on CompuServe in 1986 and the magazine switched to a purely online presence in 1996. It ceased publication abruptly in late 1997, following the death of co-founder Kathy Keeton; activity on the magazine’s website ended the following April.