The Large Hadron Collider’s ATLAS Collaboration observes, for the first time, the coincident production of a photon and a top quark.

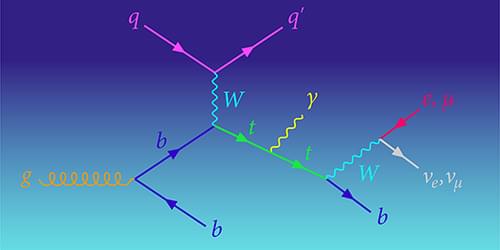

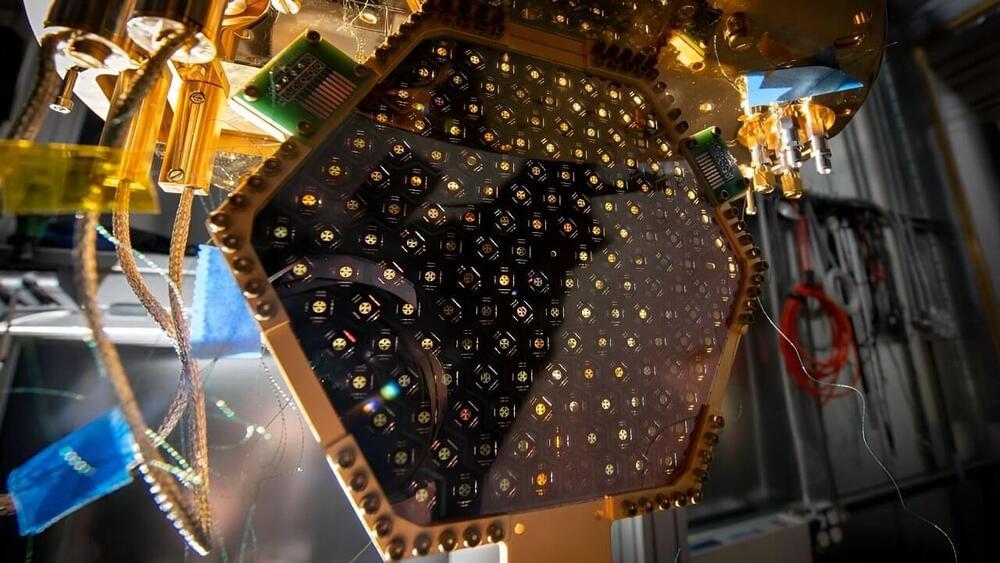

In the ever-evolving landscape of particle physics, a field that explores the nature of the Universe’s fundamental building blocks, nothing generates a buzz quite like a world’s first. Such a first is exactly what CERN’s ATLAS Collaboration has now achieved with its observation of the coincident production of single top quarks and photons in proton–proton collisions at the Large Hadron Collider (LHC) [1] (Fig. 1). This discovery provides a unique window into the intricate nature of the so-called electroweak interaction of the top quark, the heaviest known fundamental particle.

The standard model of particle physics defines the laws governing the behavior of elementary particles. Developed 50 years ago [2, 3], the model has—to date—withstood all experimental tests of its predictions. But the model isn’t perfect. One of the model’s biggest problems is a theoretical one and relates to how the Higgs boson gives mass to other fundamental particles. The mechanism by which the Higgs provides this mass is known as electroweak symmetry breaking, and while the standard model gives a reasonable description of the mechanism, exactly how electroweak symmetry breaking comes about remains a mystery.