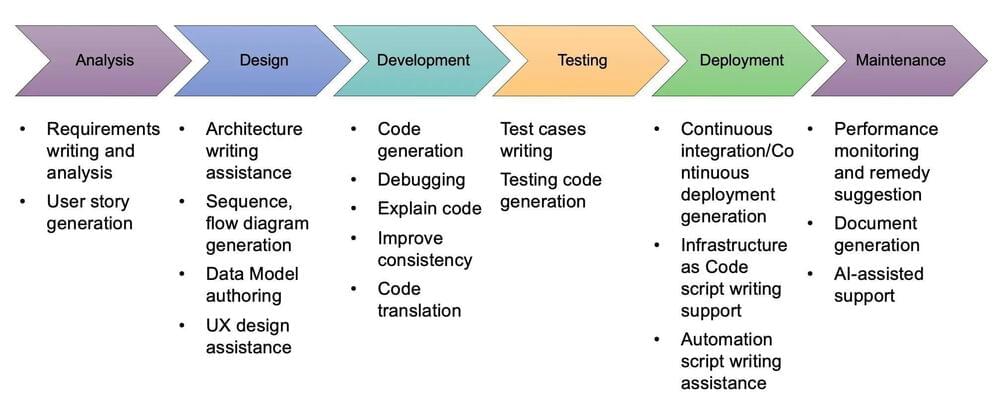

“Here is how Generative AI can help in the Overall Generative AI in Software Development Life Cycle (SDLC) stages. Overall, we want to treat Generative AI as senior developer/architect with more accessibility.

- Requirements gathering: ChatGPT can significantly simplify the requirements gathering phase by building quick prototypes of complex applications. It also can minimize the risks of miscommunication in the process since the analyst and customer can align on the prototype before proceeding to the build phase.

- Design: DALL-E, another deep learning model developed by OpenAI to generate digital images from natural language descriptions, can contribute to the design of applications. In addition to providing user interface (UI) templates for common use cases, it also may eventually be deployed to ensure that the design of a given application meets regulatory criteria such as accessibility.

- Build: ChatGPT has the capability to generate code in different languages. It could be used to supplement developers by writing small components of code, thus enhancing the productivity of developers and software quality. It even can enable citizen developers to write code without the knowledge of programming language.

- Test: ChatGPT has a major role in the testing phase. It can be used to generate various test cases and to test the application just by giving prompts in natural language. It can be leveraged to fix any vulnerabilities that could be identified through processes such as Dynamic Code Analysis (DCA) and perform chaos testing to simulate worst-case scenarios to test the integrity of the application in a faster and cost-effective way.

- Maintenance: ChatGPT can significantly improve First Contact Resolution (FCR) by helping clients with basic queries. In the process, it ensures that issue resolution times are significantly reduced while also freeing up service personnel to focus their attention selectively on more complex cases.

https://medium.com/mlearning-ai/generative-ai-in-software-de…90e466eb91