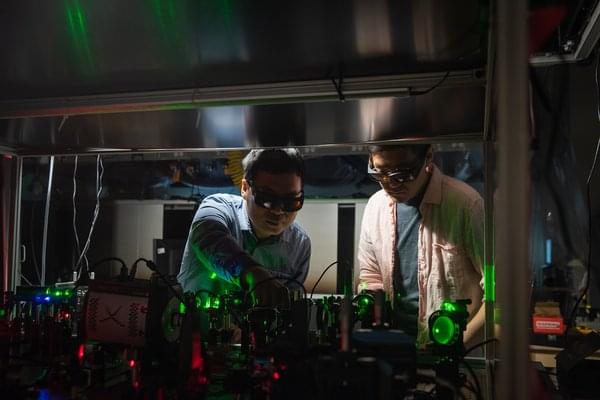

Lihong Wang’s lab has succeeded in using “biphotons” to image microscopic objects in an entirely new way.

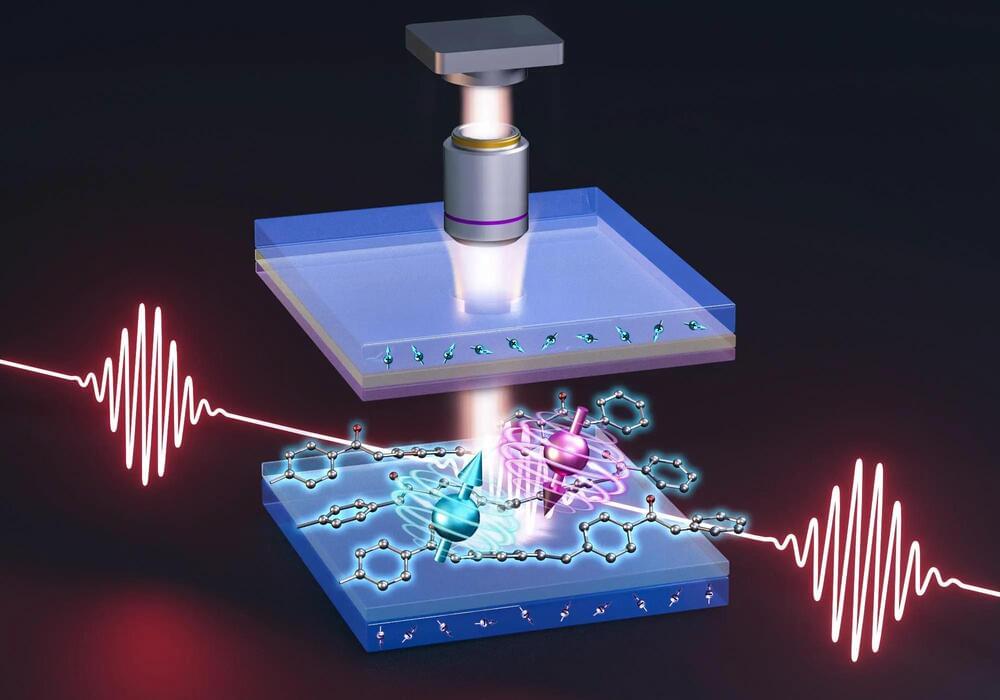

UNSW Sydney researchers have developed a chip-scale method using OLEDs to image magnetic fields, potentially transforming smartphones into portable quantum sensors. The technique is more scalable and doesn’t require laser input, making the device smaller and mass-producible. The technology could be used in remote medical diagnostics and material defect identification.

Smartphones could one day become portable quantum sensors thanks to a new chip-scale approach that uses organic light-emitting diodes (OLEDs) to image magnetic fields.

Researchers from the ARC Centre of Excellence in Exciton Science at UNSW Sydney have demonstrated that OLEDs, a type of semiconductor material commonly found in flat-screen televisions, smartphone screens, and other digital displays, can be used to map magnetic fields using magnetic resonance.

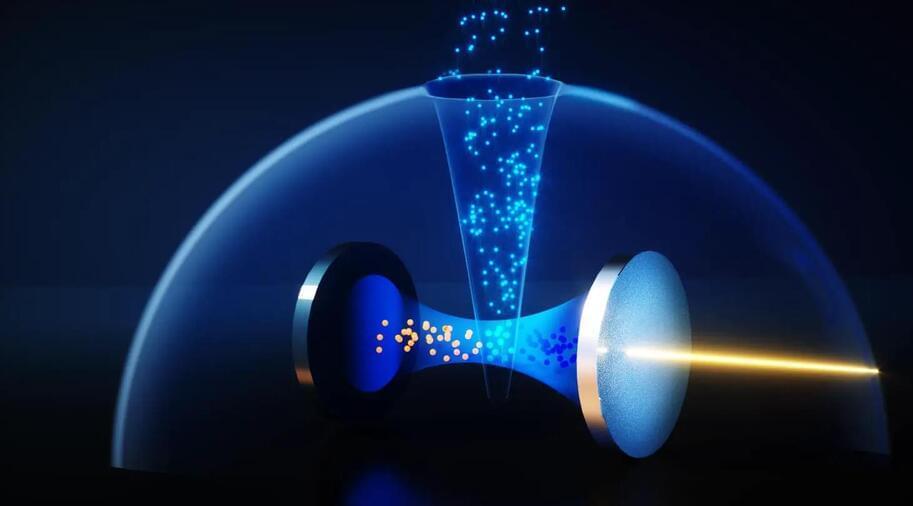

Researchers at Caltech have discovered a new phenomenon, “collectively induced transparency” (CIT), where light passes unimpeded through groups of atoms at certain frequencies. This finding could potentially improve quantum memory systems.

A newly discovered phenomenon dubbed “collectively induced transparency” (CIT) causes groups of atoms to abruptly stop reflecting light at specific frequencies.

CIT was discovered by confining ytterbium atoms inside an optical cavity—essentially, a tiny box for light—and blasting them with a laser. Although the laser’s light will bounce off the atoms up to a point, as the frequency of the light is adjusted, a transparency window appears in which the light simply passes through the cavity unimpeded.

Geoffrey Hinton who won the ‘Nobel Prize of computing’ for his trailblazing work on neural networks is now free to speak about the risks of AI.

Man often dubbed the ‘Godfather of AI’ says part of him now regrets his life’s work.

One of the pioneers in the development of deep learning models that have become the basis for tools like ChatGPT and Bard, has quit Google to warn against the dangers of scaling AI technology too fast.

Alina Grubnyak / Unsplash.

In an interview with the New York Times on Monday, Geoffrey Hinton — a 2018 recipient of the Turing Award — said he had quit his job at Google to speak freely about the risks of AI.

Major record labels are going after AI-generated songs, arguing copyright infringement. Legal experts say the approach is far from straightforward.

A certain type of music has been inescapable on TikTok in recent weeks: clips of famous musicians covering other artists’ songs, with combinations that read like someone hit the randomizer button. There’s Drake covering singer-songwriter Colbie Caillat, Michael Jackson covering The Weeknd, and Pop Smoke covering Ice Spice’s “In Ha Mood.” The artists don’t actually perform the songs — they’re all generated using artificial intelligence tools. And the resulting videos have racked up tens of millions of views.

Can human Drake stop his AI voice clone?

In a recent presentation, Tesla said that it was working to eliminate rare Earth magnets from its EVs over supply and toxicity concerns.

In a major move, Tesla is looking to rid its electric vehicles of rare Earth minerals, potentially eliminating the biggest environmental concern over the increasing number of EVs on the road.

The surprise announcement came during Tesla’s Master Plan 3 Investor event where the company outlined its business strategy for the next few years.

Tesla.

Xtekky, a European computer science student, finds out what happens if you write a program that runs queries through these freely accessible sites and returns you the answer.

In other news, a big company with a non-profit and for-profit subsidiary sues an independent creator trying to make things easier for everyday folks. Even though it technically doesn’t violate its terms and conditions? Perhaps we’ll leave that for the experts to decide.

OpenAI, the creators of ChatGPT, a scourge on teachers and succor to everyone else, opted against free access when it released its newer GPT4 model.

This is an important step on the way to develop brain–computer interfaces that can decode continuous language through non-invasive recordings of thoughts.

Results were published in a recent study in the peer-reviewed journal Nature Neuroscience, led by Jerry Tang, a doctoral student in computer science, and Alex Huth, an assistant professor of neuroscience and computer science at UT Austin.

Tang and Huth’s semantic decoder isn’t implanted in the brain directly; instead, it uses fMRI machine scans to measure brain activity. For the study, participants in the experiment listened to podcasts while the AI attempted to transcribe their thoughts into text.

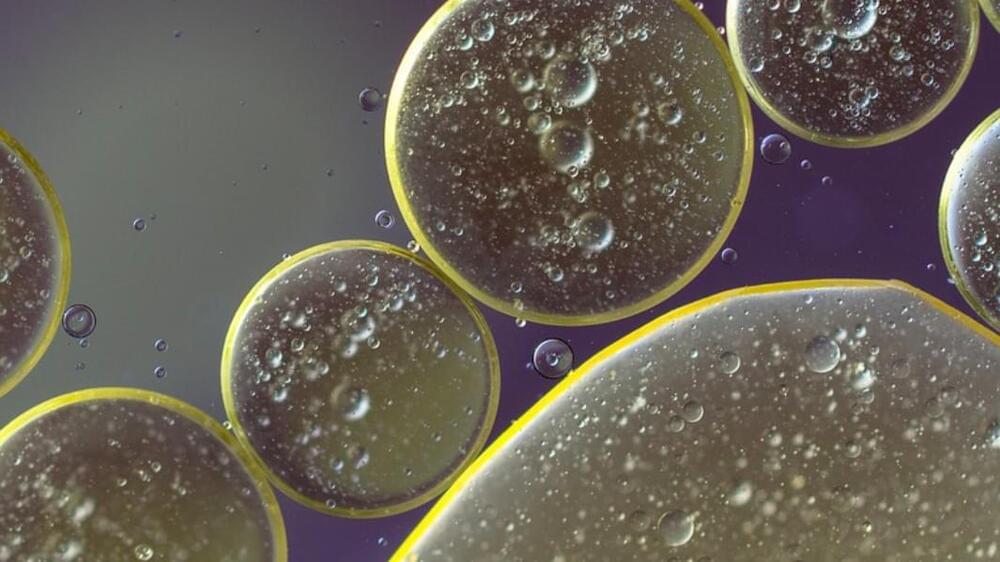

Scientists are still determining whether humans will reach a maximum possible age or if we can extend lifespan indefinitely. One thing we know is that the aging we see and feel in our bodies is connected to aging that individual cells experience. Yeast is a common model in molecular biology that is often used to study aging. In 2020, scientists found that yeast cells could go down one of two aging paths; in one, structures called nucleoli were degraded and ribosomal DNA experienced less silencing; in the other, mitochondria were affected and heme accumulation was reduced. The researchers suggested that these were two distinct types of terminal aging.

In follow-up work, the research team has manipulated the genetics of those pathways, and have extended the lifespan of cells by doing so. The work has been reported in Science. The investigators applied a solution to the cells that altered gene circuits to stop the cells from deteriorating.

IBM chief executive Arvind Krishna said the company expects to pause hiring for roles it thinks could be replaced with artificial intelligence in the coming years.