Every cell in the human body contains the same genetic instructions, encoded in its DNA. However, out of about 30,000 genes, each cell expresses only those genes that it needs to become a nerve cell, immune cell, or any of the other hundreds of cell types in the body.

Each cell’s fate is largely determined by chemical modifications to the proteins that decorate its DNA; these modification in turn control which genes get turned on or off. When cells copy their DNA to divide, however, they lose half of these modifications, leaving the question: How do cells maintain the memory of what kind of cell they are supposed to be?

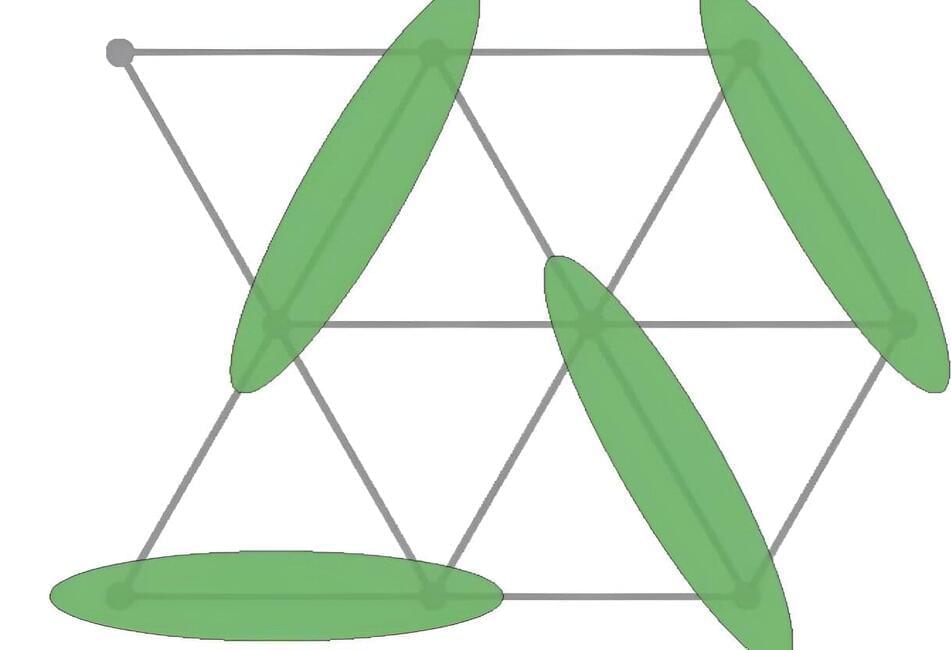

A new MIT study proposes a theoretical model that helps explain how these memories are passed from generation to generation when cells divide. The research team suggests that within each cell’s nucleus, the 3D folding pattern of its genome determines which parts of the genome will be marked by these chemical modifications.