A new study shows that cannabis can make exercise more enjoyable, but also more effortful, depending on the type and the person.

The technique of inhaling nanoparticle sensors followed by a urine test may offer the potential for faster and early detection of lung cancer.

Scientists from the Massachusetts Institute of Technology (MIT) have introduced this cutting-edge medical technology, presenting a simplified approach to diagnosing lung cancer.

Additionally, this innovation holds particular promise for low-and middle-income countries where the accessibility of computed tomography (CT) scanners is limited.

If you plan on visiting the coast, you might notice flooded parking lots in the mornings or exposed reefs or rocks in the afternoons, depending on the area.

For those who are interested in learning more about king tides, the San Diego Audubon Society will be hosting an event at the Kendall-Frost Marsh on Friday from 8–10 a.m. Attendees will get an overview of sea level rise, the birds that rely on disappearing marsh habitat, and tools for documenting or reporting king tides.

Looking beyond January, the cosmic phenomenon of king tides is expected again Feb. 9–10.

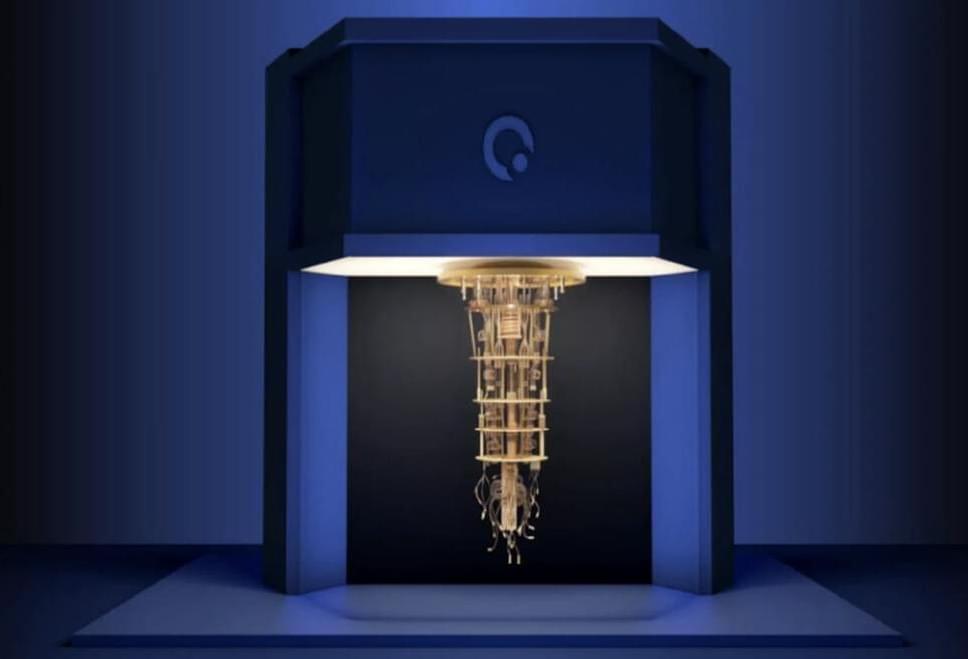

The third-generation superconducting quantum computer, “Origin Wukong,” was launched on January 6 at Origin Quantum Computing Technology in Hefei, according to Chinese-based media outlet, The Global Times, as reported by the Pakistan Today.

According to the news outlets, the “Origin Wukong” is powered by a 72-qubit superconducting quantum chip, known as the “Wukong chip.” This development marks a new milestone in China’s quantum computing journey as it’s the most advanced programmable and deliverable superconducting quantum computer in China, as per a joint statement from the Anhui Quantum Computing Engineering Research Center and the Anhui Provincial Key Laboratory of Quantum Computing Chips, shared with the Global Times.

Superconducting quantum computers, such as the “Origin Wukong,” rely on a approach being investigated by several other quantum computer makers, including IBM and Google quantum devices.

The survival strategies employed by one of the most aggressive, territorial and venomous ant species may pave the way to revolutionize robotics, medicine and engineering.

Fire ants survive floods by temporarily interlinking their legs to create a raft-like structure, allowing them to float collectively to safety as a unified colony and then releasing to resume their individual forms.

Drawing inspiration from this natural process, researchers at Texas A&M University discovered a method that allows synthetic materials to mimic the ants’ autonomous assembly, reconfiguration and disassembly in response to environmental changes such as heat, light or solvents.

Emerging evidence implicates the gut microbiome in cognitive outcomes and neurodevelopmental disorders, but the influence of gut microbial metabolism on typical neurodevelopment has not been explored in detail. Researchers from Wellesley College, in collaboration with other institutions, have demonstrated that differences in the gut microbiome are associated with overall cognitive function and brain structure in healthy children.

This study—published Dec. 22 in Science Advances—is a part of the Environmental Influences on Child Health Outcome (ECHO) Program. This study investigates this relationship in 381 healthy children, all part of The RESONANCE cohort in Providence, Rhode Island, offering novel insights into early childhood development.

The research reveals a connection between the gut microbiome and cognitive function in children. Specific gut microbial species, such as Alistipes obesi and Blautia wexlerae, are associated with higher cognitive functions. Conversely, species like Ruminococcus gnavus are more prevalent in children with lower cognitive scores. The study emphasizes the role of microbial genes, particularly those involved in the metabolism of neuroactive compounds like short-chain fatty acids, in influencing cognitive abilities.

Over the past few decades, it has become quite obvious that humans are not the only living organisms with intelligence.

The story of intelligence you are about to experience goes back 13.8 billion years, back to the moment the universe was born: the Big Bang. It’s a story of time and space, matter and energy. It is a story of unfolding, It’s the story of how the very nature of the physical universe from its very inception led to the universe getting to know itself and eventually, to reflect.

Complexity, Evolution, and Intelligence is comprised of five parts, each corresponding to a movement in Dan Forrest’s “Requiem For The Living.” This composition was performed August 2, 2013 in Raleigh, NC by Bel Canto, conducted by Dr. Bill Young.

A series of videos about time and entropy, made in collaboration with Caltech physicist Sean Carroll, and based off of his book “The Big Picture”

Einstein dreamed of a unified theory of nature’s laws. String theory has long promised to deliver it: a mathematically elegant description that some have called a “theory of everything.” Join one of the most influential groups of theorists ever assembled on a single stage to evaluate the current state of this most ambitious of theories.\

\

The Big Ideas Series is supported in part by the John Templeton Foundation.\

\

Participants:\

David Gross\

Andrew Strominger\

Edward Witten\

\

Moderator:\

Brian Greene\

\

SHARE YOUR THOUGHTS on this program through a short survey:\

https://survey.alchemer.com/s3/764113…\

\

WSF Landing Page: https://www.worldsciencefestival.com/.…\

\

- SUBSCRIBE to our YouTube Channel and \.