Three different challenges face anyone trying to build Beneficial General Intelligence. It’s the challenges of pre-BGI systems that are most urgent.

A new study published in Nature Cell Biology by Mark Alkema, PhD, professor of neurobiology, establishes an important molecular link between specific B12-producing bacteria in the gut of the roundworm C. elegans and the production of acetylcholine, a neurotransmitter important to memory and cognitive function.

There is growing recognition among scientists that diet and gut microbiota may play an important role in brain health. Changes in the composition of the microbiome have been linked to neurological disorders such as anxiety, depression, migraines and neurodegeneration. Yet, teasing out the cause and effect of individual bacteria or nutrients on brain function has been challenging.

“There are more bacteria in your intestine than you have cells in your body,” said Woo Kyu Kang, PhD, a postdoctoral fellow in the Alkema lab and first author of the current study. “The complexity of the brain, the hundreds of bacterial species that comprise the gut microbiome and the diversity of metabolites make it almost impossible to discern how bacteria impact brain function.”

“Epigenetic changes determine whether genes are turned on or off, and can potentially reverse disease, broadening the therapeutic landscape to find potential cures previously thought impossible.”

Company co-founded by Alex Aravanis and Feng Zhang targets epigenetic code to reprogram cells to a healthy state.

California-based startup Opener has been developing eVTOLs since 2011, when founder Marcus Leng flew a proof-of-concept aircraft that he had constructed himself (using wooden chopsticks as part of the build).

Startup Pivotal has unveiled the Helix eVTOL, a one-seater aircraft it plans to begin selling for $190,000 in 2024.

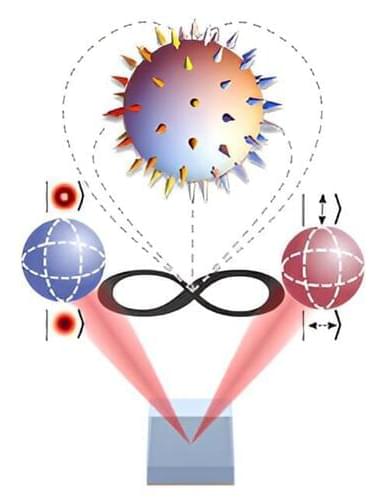

For the first time, researchers have demonstrated the remarkable ability to perturb pairs of spatially separated yet interconnected quantum entangled particles without altering their shared properties.

The team includes researchers from the Structured Light Laboratory (School of Physics) at the University of the Witwatersrand in South Africa, led by Professor Andrew Forbes, in collaboration with string theorist Robert de Mello Koch from Huzhou University in China (previously from Wits University).

“We achieved this experimental milestone by entangling two identical photons and customizing their shared wave-function in such a way that their topology or structure becomes apparent only when the photons are treated as a unified entity,” explains lead author, Pedro Ornelas, an MSc student in the structured light laboratory.

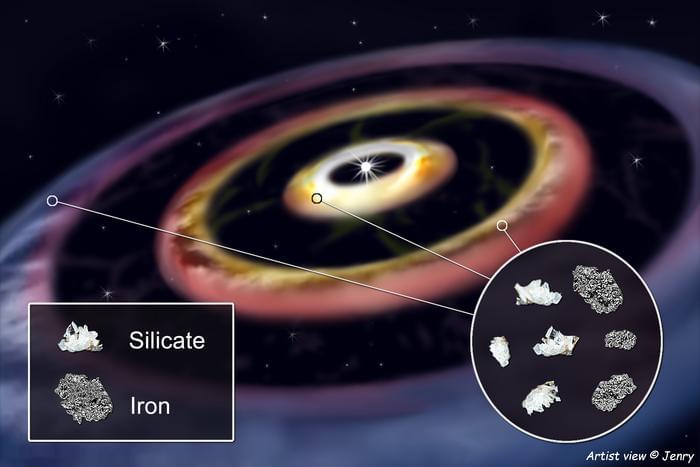

“We think that the HD 144,432 disk may be very similar to the early Solar System that provided lots of iron to the rocky planets we know today,” said Dr. Roy van Boekel.

How did our solar system form and is this process similar in other solar systems throughout the universe? This is what a study published today in Astronomy & Astrophysics hopes to figure out as a team of international researchers used data from the European Southern Observatory’s (ESO) Very Large Telescope Interferometer (VLTI) to analyze the protoplanetary disk around HD 144,432, which is a young star located approximately 500 light-years from Earth. This study holds the potential to not only help researchers better understand the formation and evolution of solar systems, but also gain greater insight into how life could evolve in these systems, as well.

“When studying the dust distribution in the disk’s innermost region, we detected for the first time a complex structure in which dust piles up in three concentric rings in such an environment,” said Dr. Roy van Boekel, who is a scientist at the Max Planck Institute for Astronomy (MPIA) and one of more than three dozen co-authors on the study. “That region corresponds to the zone where the rocky planets formed in the Solar System.”

For context in terms of the distance between the three rings, the innermost ring orbits at the same distance as Mercury, the second farthest ring orbits at the same distance as Mars, and the farthest ring orbits at the same distance as Jupiter.