Deepseek-coder: when the large language model meets programming — the rise of code intelligence.

Join the discussion on this paper page.

Tisch Cancer Institute researchers have discovered that a certain type of chemotherapy improves the immune system’s ability to fight off bladder cancer, particularly when combined with immunotherapy.

These findings, published in Cell Reports Medicine, may explain why the approach, cisplatin chemotherapy, can lead to a cure in a small subset of patients with metastatic, or advanced, bladder cancer. Researchers also believe that their findings could explain why clinical trials combining another type of chemotherapy, carboplatin-based chemo, with immunotherapy have not been successful but others that use cisplatin with immunotherapy are successful.

“We have known for decades that cisplatin works better than carboplatin in bladder cancer, however, the mechanisms underlying those clinical observations have remained elusive until now,” said the study’s lead author Matthew Galsky, M.D., Co-Director of the Center of Excellence for Bladder Cancer at The Tisch Cancer Institute at Mount Sinai.

Compared to robots, human bodies are flexible, capable of fine movements, and can convert energy efficiently into movement. Drawing inspiration from human gait, researchers from Japan crafted a two-legged biohybrid robot by combining muscle tissues and artificial materials. Published on January 26 in the journal Matter, this method allows the robot to walk and pivot.

“Research on biohybrid robots, which are a fusion of biology and mechanics, is recently attracting attention as a new field of robotics featuring biological function,” says corresponding author Shoji Takeuchi of the University of Tokyo, Japan. “Using muscle as actuators allows us to build a compact robot and achieve efficient, silent movements with a soft touch.”

The research team’s two-legged robot, an innovative bipedal design, builds on the legacy of biohybrid robots that take advantage of muscles. Muscle tissues have driven biohybrid robots to crawl and swim straight forward and make turns—but not sharp ones. Yet, being able to pivot and make sharp turns is an essential feature for robots to avoid obstacles.

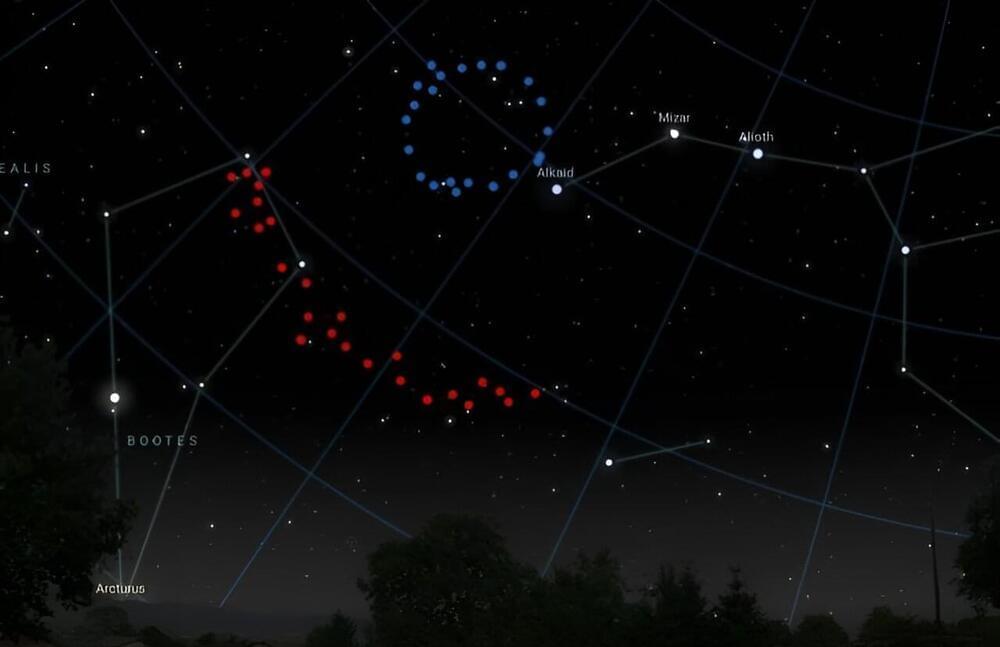

The Big Ring in the Sky is 9.2 billion light-years from Earth. It has a diameter of about 1.3 billion light-years, and a circumference of about 4 billion light-years. If we could step outside and see it directly, the diameter of the Big Ring would need about 15 full moons to cover it.

It is the second ultra-large structure discovered by University of Central Lancashire (UCLan) Ph.D. student Alexia Lopez who, two years ago, also discovered the Giant Arc in the Sky. Remarkably, the Big Ring and the Giant Arc, which is 3.3 billion light-years across, are in the same cosmological neighborhood—they are seen at the same distance, at the same cosmic time, and are only 12 degrees apart in the sky.

Alexia said, Neither of these two ultra-large structures is easy to explain in our current understanding of the universe. And their ultra-large sizes, distinctive shapes, and cosmological proximity must surely be telling us something important—but what exactly?

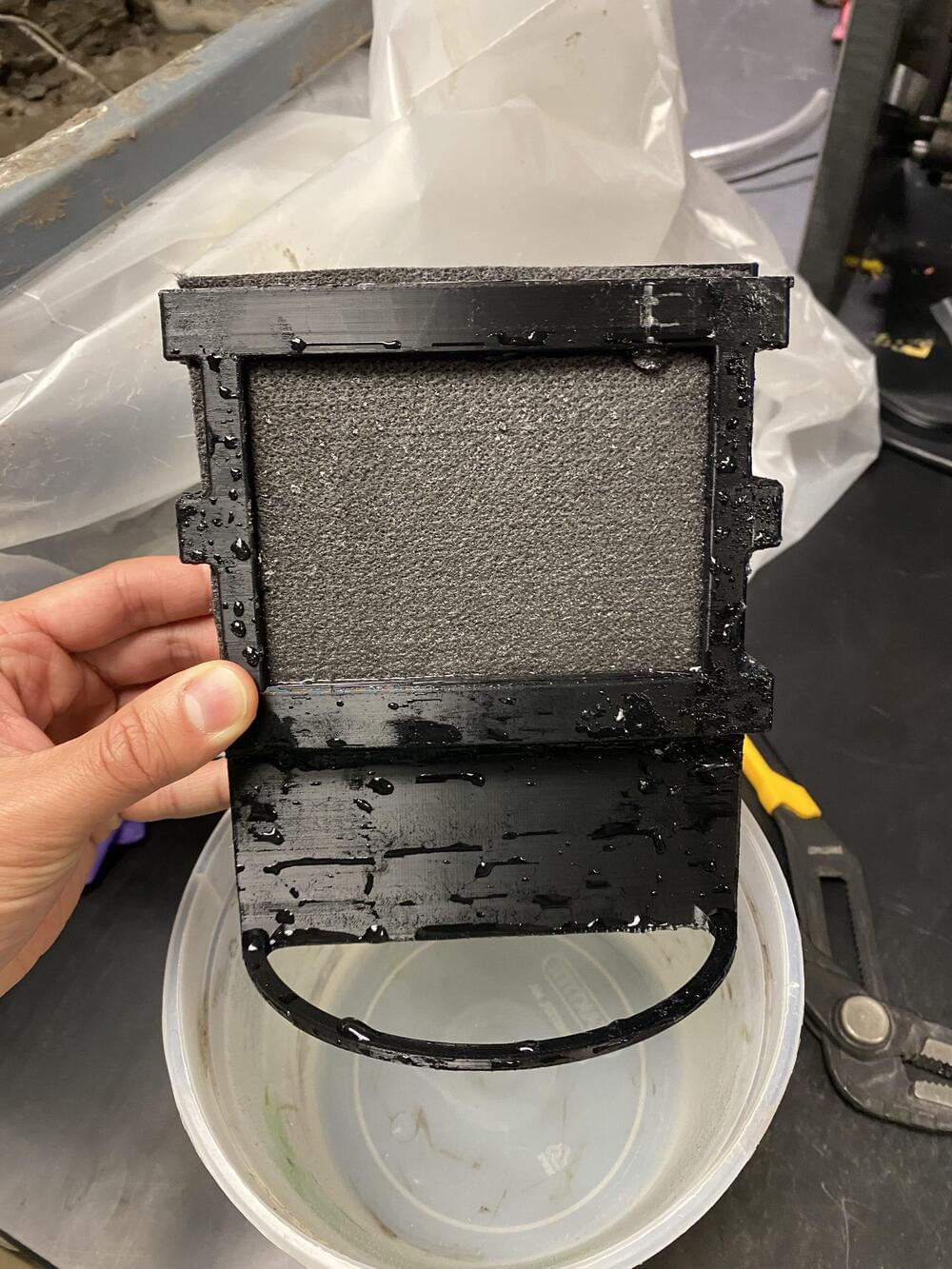

A Northwestern University-led team of researchers has developed a new fuel cell that harvests energy from microbes living in dirt.

About the size of a standard paperback book, the completely soil-powered technology could fuel underground sensors used in precision agriculture and green infrastructure. This potentially could offer a sustainable, renewable alternative to batteries, which hold toxic, flammable chemicals that leach into the ground, are fraught with conflict-filled supply chains and contribute to the ever-growing problem of electronic waste.

To test the new fuel cell, the researchers used it to power sensors measuring soil moisture and detecting touch, a capability that could be valuable for tracking passing animals. To enable wireless communications, the researchers also equipped the soil-powered sensor with a tiny antenna to transmit data to a neighboring base station by reflecting existing radio frequency signals.

A new statistical tool developed by researchers at the University of Chicago improves the ability to find genetic variants that cause disease. The tool, described in a new paper published January 26, 2024, in Nature Genetics, combines data from genome-wide association studies (GWAS) and predictions of genetic expression to limit the number of false positives and more accurately identify causal genes and variants for a disease.

GWAS is a commonly used approach to identify genes associated with a range of human traits, including most common diseases. Researchers compare genome sequences of a large group of people with a specific disease, for example, with another set of sequences from healthy individuals. The differences identified in the disease group could point to genetic variants that increase risk for that disease and warrant further study.

Most human diseases are not caused by a single genetic variation, however. Instead, they are the result of a complex interaction of multiple genes, environmental factors, and host of other variables. As a result, GWAS often identifies many variants across many regions in the genome that are associated with a disease.

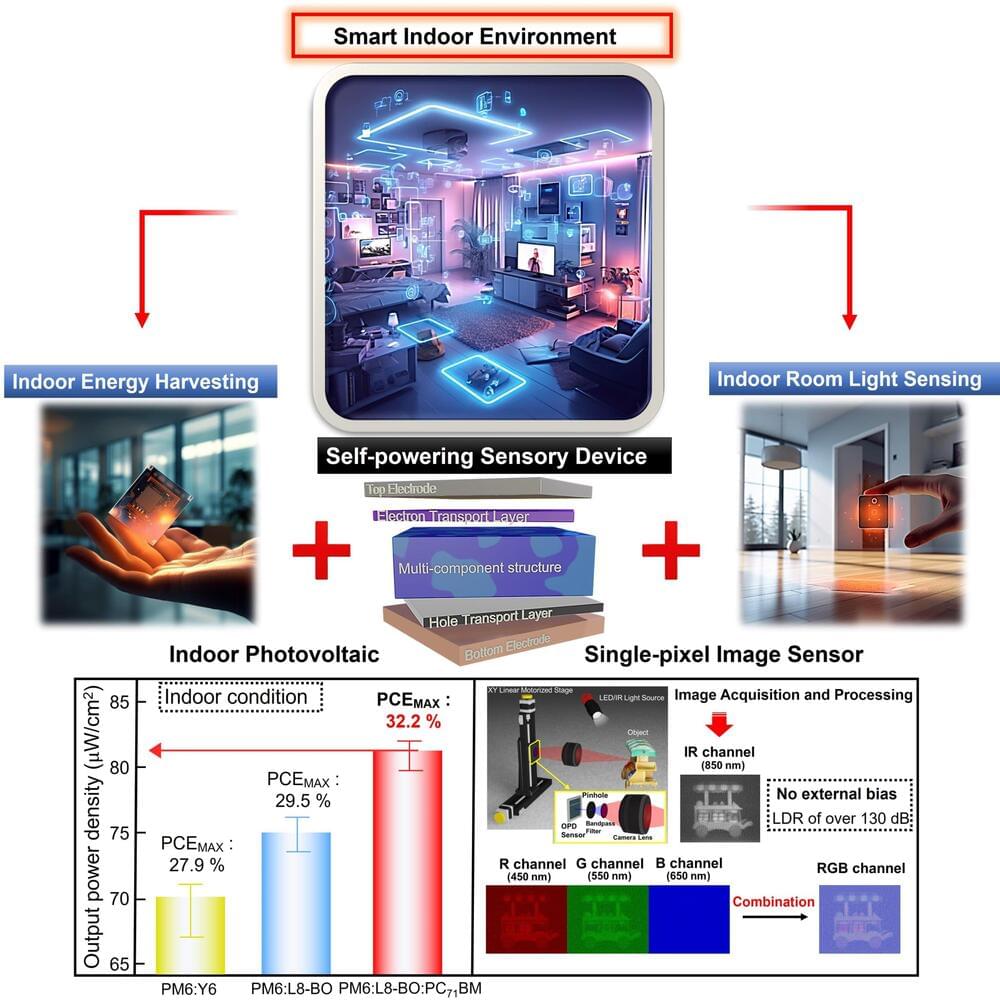

Organic-based optoelectronic technology is increasingly recognized as an energy-efficient solution for low-power indoor electronics and wireless IoT sensors. This is largely due to its superior flexibility and light weight compared to conventional silicon-based devices. Notably, organic photovoltaic cells (OPVs) and organic photodetectors (OPDs) are leading examples in this field.

OPVs have the remarkable ability to absorb energy and generate electricity even under very low light conditions, while OPDs are capable of capturing images. However, despite their potential, the development of these devices has thus far been conducted independently. As a result, they have not yet reached the level of efficiency necessary to be considered practical for next-generation, miniaturized devices.

A Korea Institute of Science and Technology (KIST) research team, led by Dr. Min-Chul Park and Dr. Do Kyung Hwang of the Center for Opto-Electronic Materials and Devices, Prof. Jae Won Shim and Prof. Tae Geun Kim of the School of Electrical Engineering at Korea University, Prof. JaeHong Park of the Department of Chemistry and Nanoscience at Ewha Womans University, have now developed an organic-based optoelectronic device.