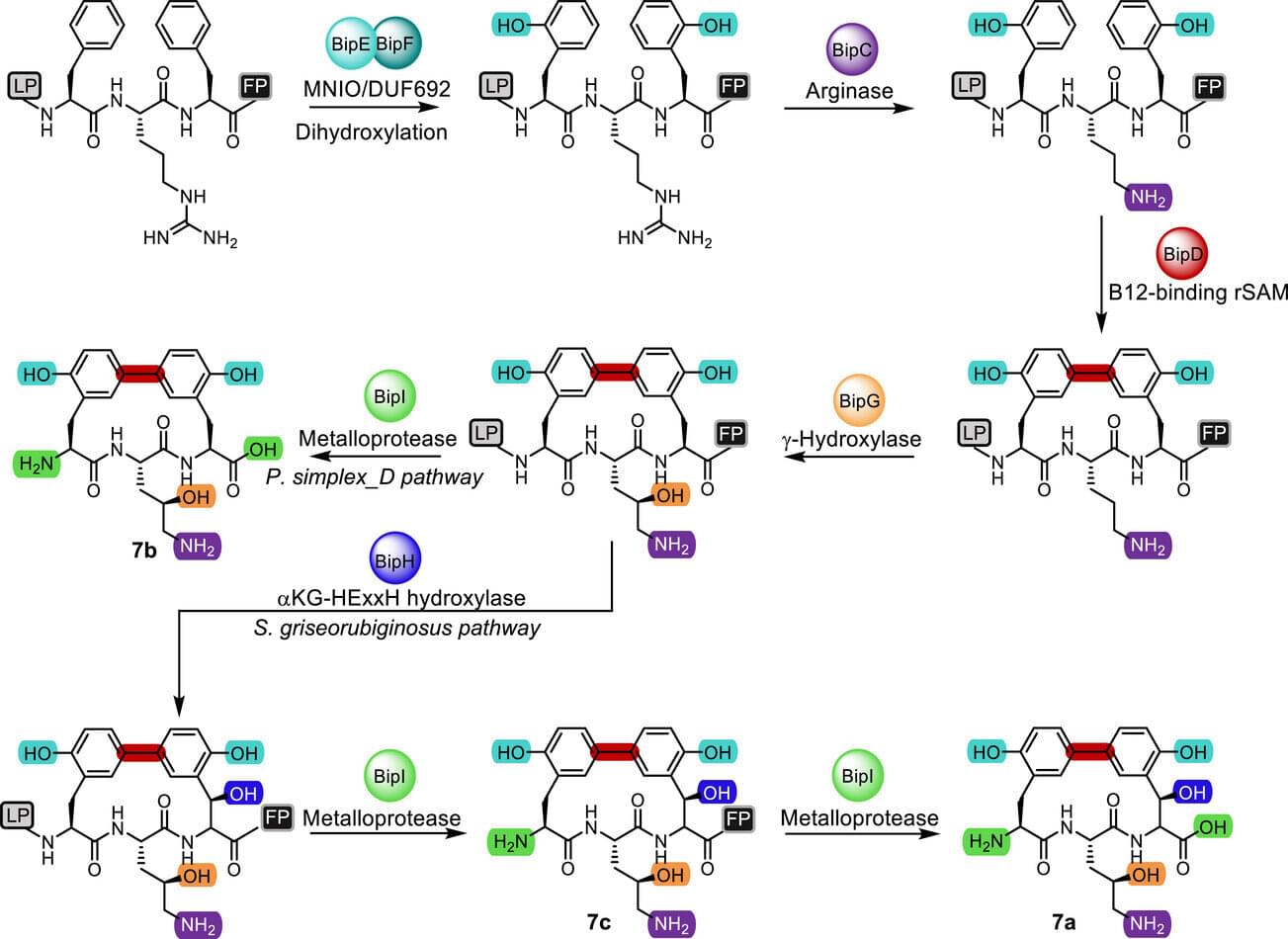

Biphenomycins, natural products derived from bacteria, show excellent antimicrobial activity, but have long remained out of reach for drug development. The main obstacle was the limited understanding of how these compounds are produced by their microbial hosts.

A research team led by Tobias Gulder, department head at the Helmholtz Institute for Pharmaceutical Research Saarland (HIPS), has now deciphered the biosynthetic pathway of the biphenomycins, establishing the foundation for their pharmaceutical advancement. The team published its findings in the journal Angewandte Chemie International Edition.