#nuclearalert 🔥 Protect your online life today🔒 Get 73% OFF NordVPN + 3 Months FREE:👉 https://go.nordvpn.net/aff_c?offer_id=612&aff_i…

Get the latest international news and world events from around the world.

New holography-inspired reconfigurable surface developed for wireless communication

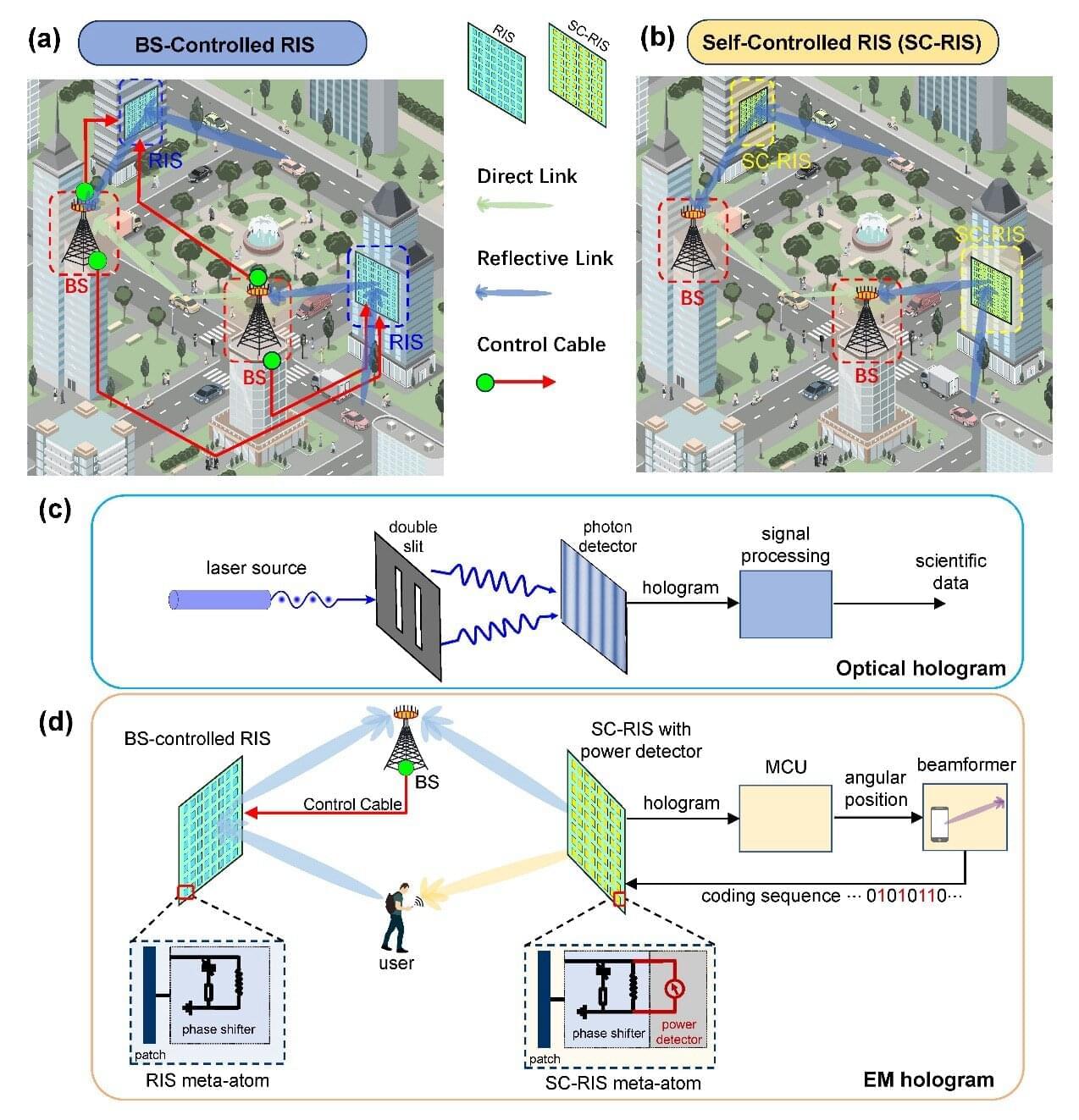

Reconfigurable intelligent surfaces (RIS) are engineered structures comprised of several elements known as ‘meta-atoms,’ which can reshape and control electromagnetic waves in real-time. These surfaces could contribute to the further advancement of wireless communications and localization systems, as they could be used to reliably redirect, strengthen and suppress signals.

In conventional applications of RIS for wireless communication, each meta-atom is controlled by a system known as the ‘base-station,’ which is connected to the surface via electrical cables. While surfaces following this design can attain good results, their reliance on wires and a base station could prevent or limit their real-world deployment.

Researchers at Tsinghua University and Southeast University recently developed a new RIS that controls itself and does not need to be connected to a base station. This new surface, introduced in a paper published in Nature Electronics, draws inspiration from holography, a well-known method to record and reconstruct an object’s light pattern to produce a 3D image of it.

Long-term stability for perovskite solar cells achieved with fluorinated barrier compound

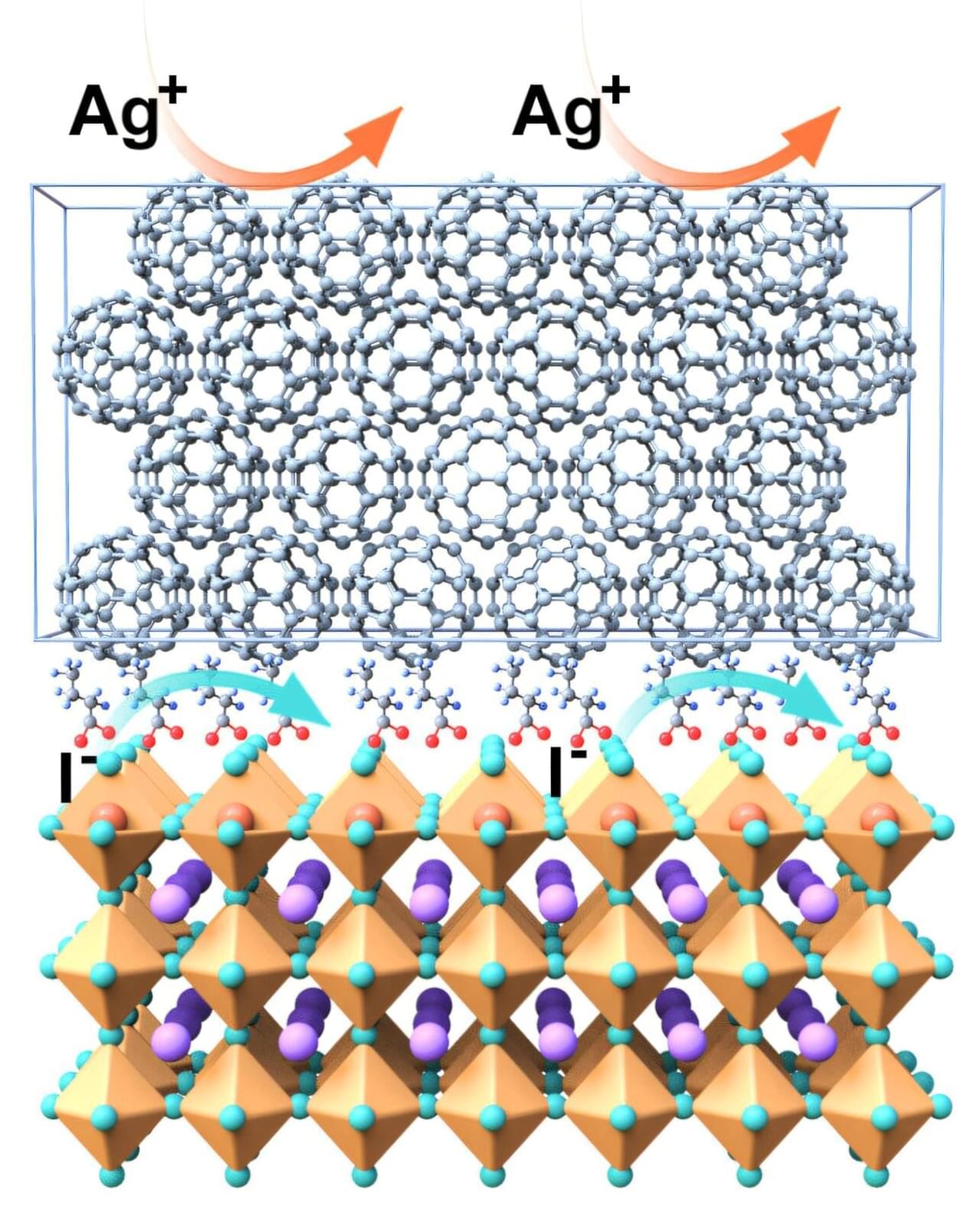

Perovskite solar cells are inexpensive to produce and generate a high amount of electric power per surface area. However, they are not yet stable enough, losing efficiency more rapidly than the silicon market standard. Now, an international team led by Prof. Dr. Antonio Abate has dramatically increased their stability by applying a novel coating to the interface between the surface of the perovskite and the top contact layer. This has even boosted efficiency to almost 27%, which represents the state-of-the-art.

After 1,200 hours of continuous operation under standard illumination, no decrease in efficiency was observed. The study involved research teams from China, Italy, Switzerland and Germany and has been published in Nature Photonics.

“We used a fluorinated compound that can slide between the perovskite and the buckyball (C60) contact layer, forming an almost compact monomolecular film,” explains Abate. These Teflon-like molecular layer chemically isolate the perovskite layer from the contact layer, resulting in fewer defects and losses. Additionally, the intermediate layer increases the structural stability of both adjacent layers, particularly the C60 layer, making it more uniform and compact.

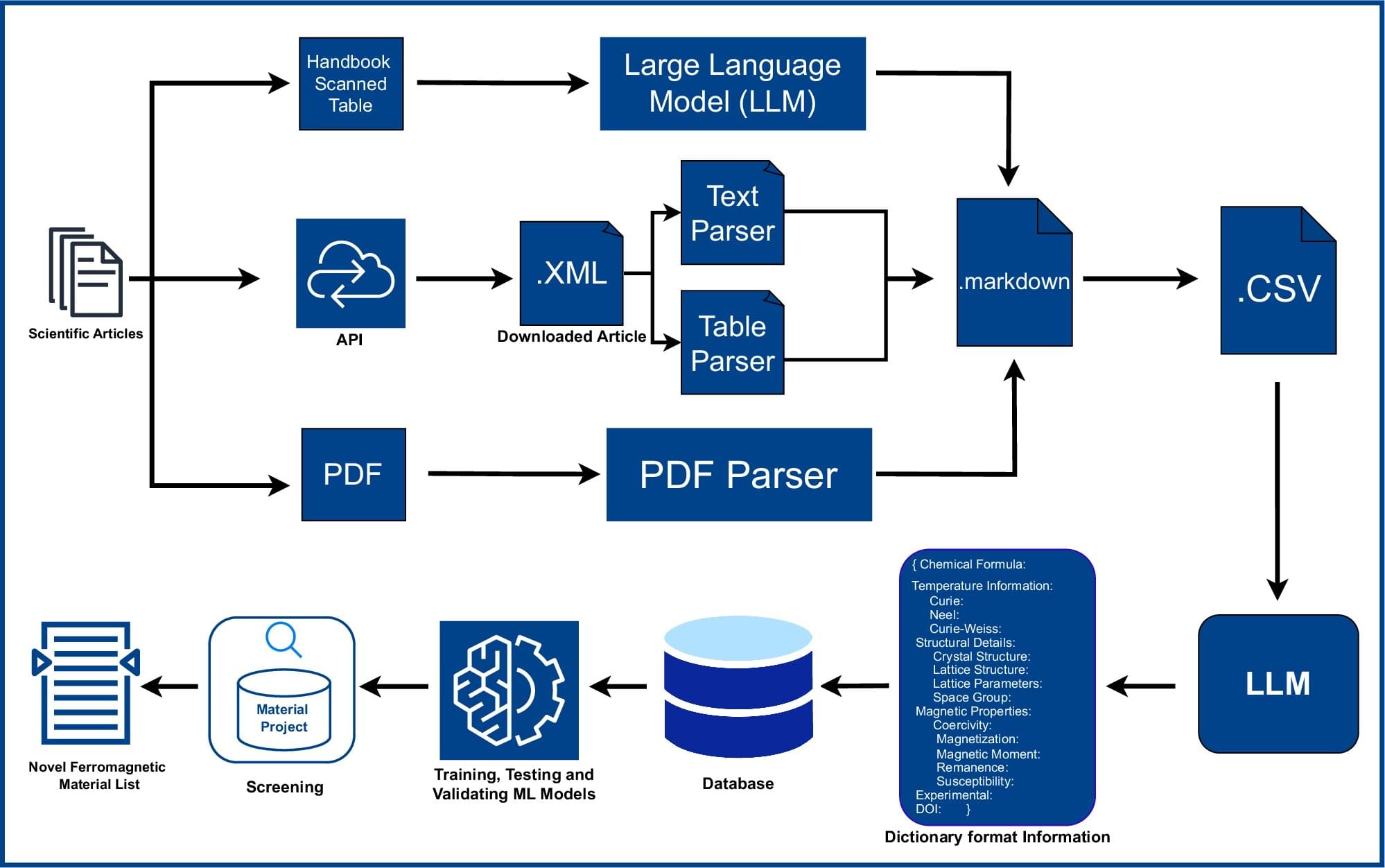

Magnetic materials discovered by AI could reduce rare earth dependence

Researchers at the University of New Hampshire have harnessed artificial intelligence to accelerate the discovery of new functional magnetic materials, creating a searchable database of 67,573 magnetic materials, including 25 previously unrecognized compounds that remain magnetic even at high temperatures.

“By accelerating the discovery of sustainable magnetic materials, we can reduce dependence on rare earth elements, lower the cost of electric vehicles and renewable-energy systems, and strengthen the U.S. manufacturing base,” said Suman Itani, lead author and a doctoral student in physics.

The newly created database, named the Northeast Materials Database, helps to more easily explore all the magnetic materials which play a major role in the technology that powers our world: smartphones, medical devices, power generators, electric vehicles and more. But these magnets rely on expensive, imported, and increasingly difficult to obtain rare earth elements, and no new permanent magnet has been discovered from the many magnetic compounds we know exist.

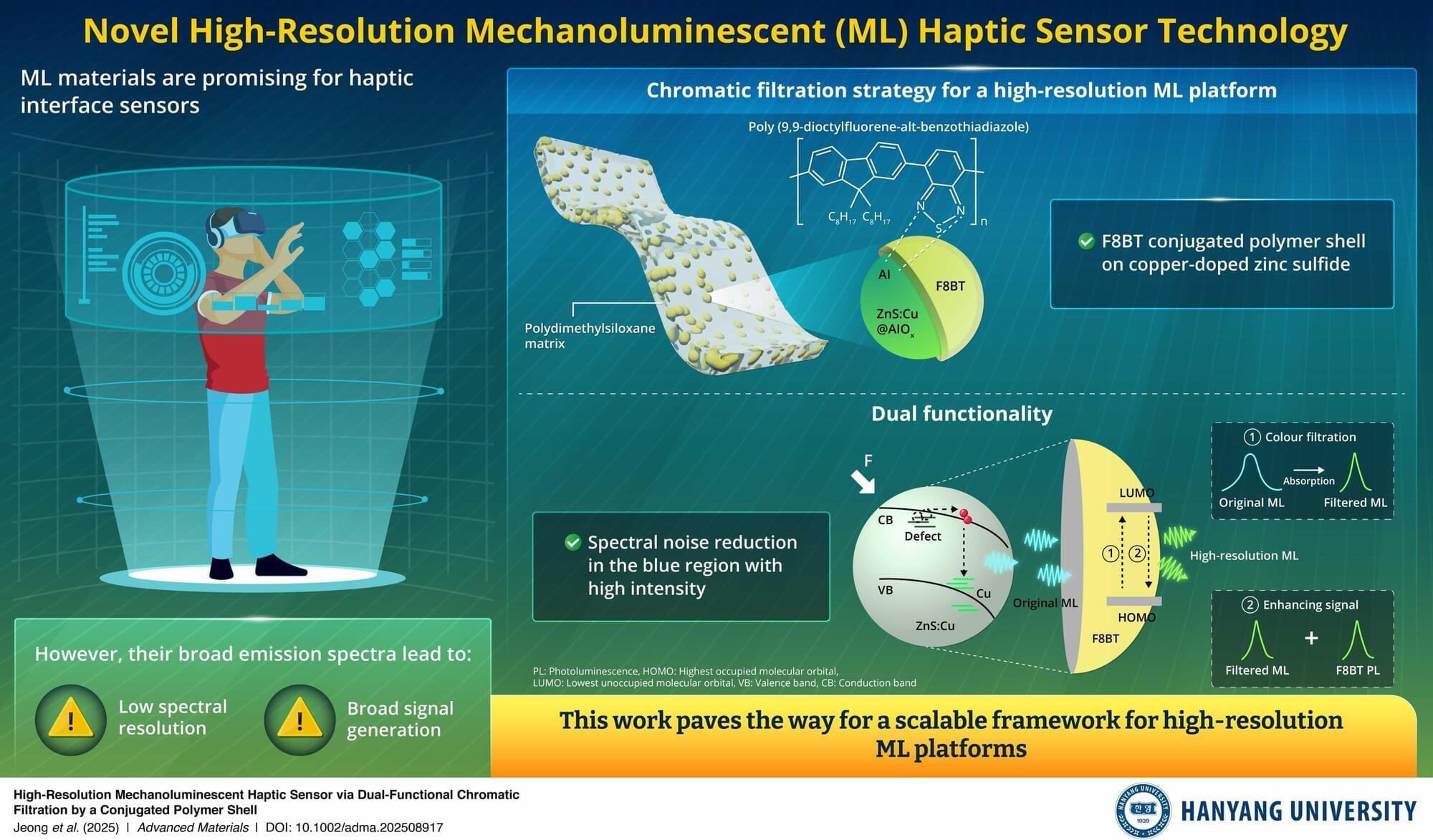

Mechanoluminescent sensors with dual-function polymer shell offer eco-friendly, high-resolution control

Mechanoluminescent (ML) materials are attractive for haptic interface sensors for next-generation technologies, including bite-controlled user interface, health care motion monitoring, and piconewton sensing, because they emit light under mechanical stimulation without an external power source. However, their intrinsically broad emission spectra can degrade resolution and introduce noise in sensing applications, necessitating further technological development.

Addressing this knowledge gap, a team of researchers from the Republic of Korea and the UK, led by Hyosung Choi, a Professor at the Department of Chemistry at Hanyang University, and including Nam Woo Kim, a master’s student at Hanyang University, recently employed a chromatic filtration strategy to pave the way to high-resolution ML haptic sensors. Their findings are published in the journal Advanced Materials.

In this study, the team coated the conjugated polymer poly(9,9-dioctylfluorene-alt-benzothiadiazole) (F8BT) onto ZnS: Cu to selectively suppress emission below 490 nm, narrowing the full width at half maximum from 94 nm to 55 nm.

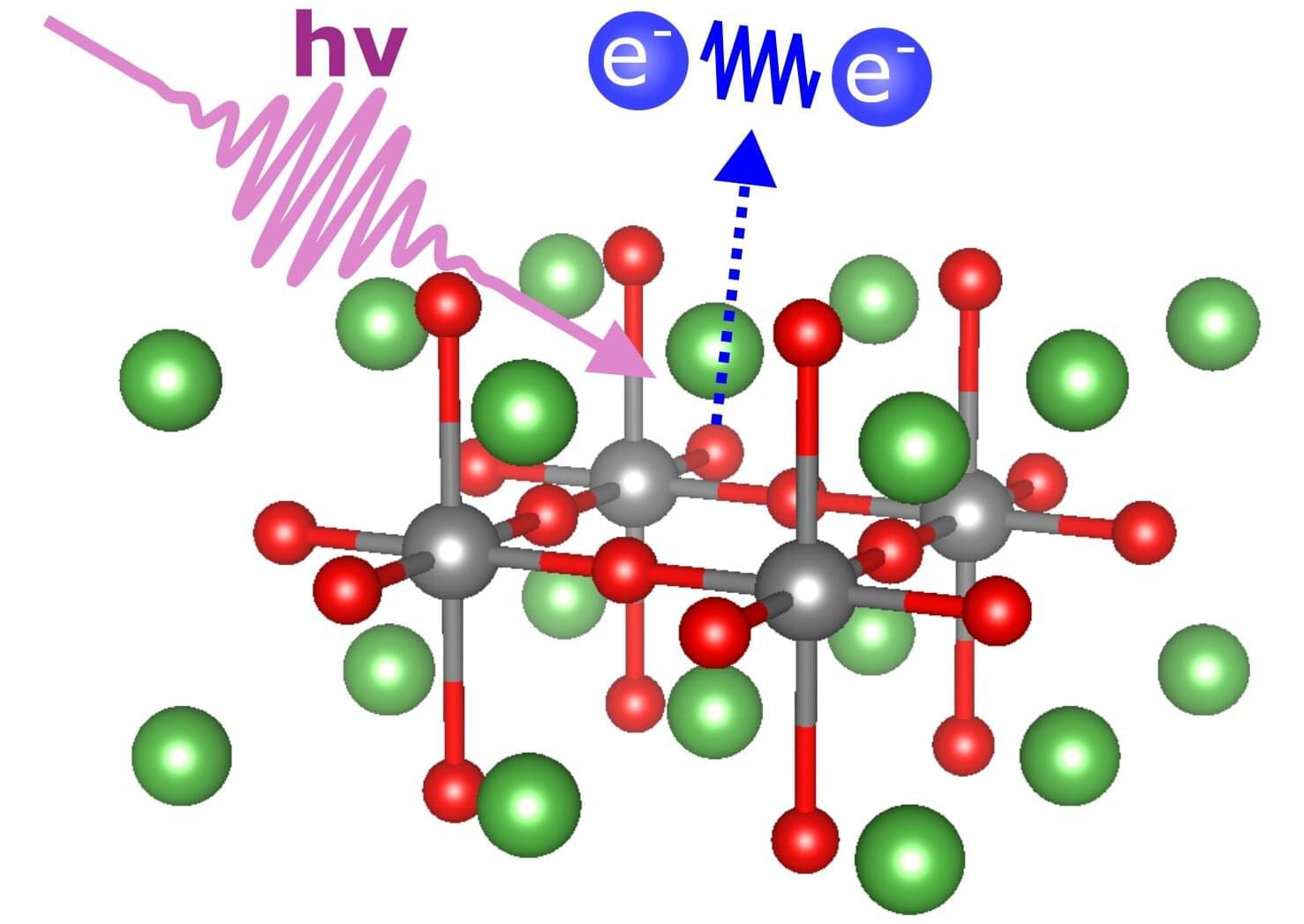

Charge carrier pairs in cuprate compounds shed light on high-temperature superconductivity

High-temperature superconductivity is still not fully understood. Now, an international research team at BESSY II has measured the energy of charge carrier pairs in undoped La₂CuO₄. Their findings revealed that the interaction energies within the potentially superconducting copper oxide layers are significantly lower than those in the insulating lanthanum oxide layers. These results contribute to a better understanding of high-temperature superconductivity and could also be relevant for research into other functional materials.

The research is published in the journal Nature Communications.

Around 40 years ago, a new class of materials suddenly became famous: high-temperature superconductors. These materials can conduct electricity completely loss-free, not only at temperatures close to absolute zero (0 Kelvin or minus 273 degrees Celsius), but also at much higher temperatures, albeit still well below room temperature.

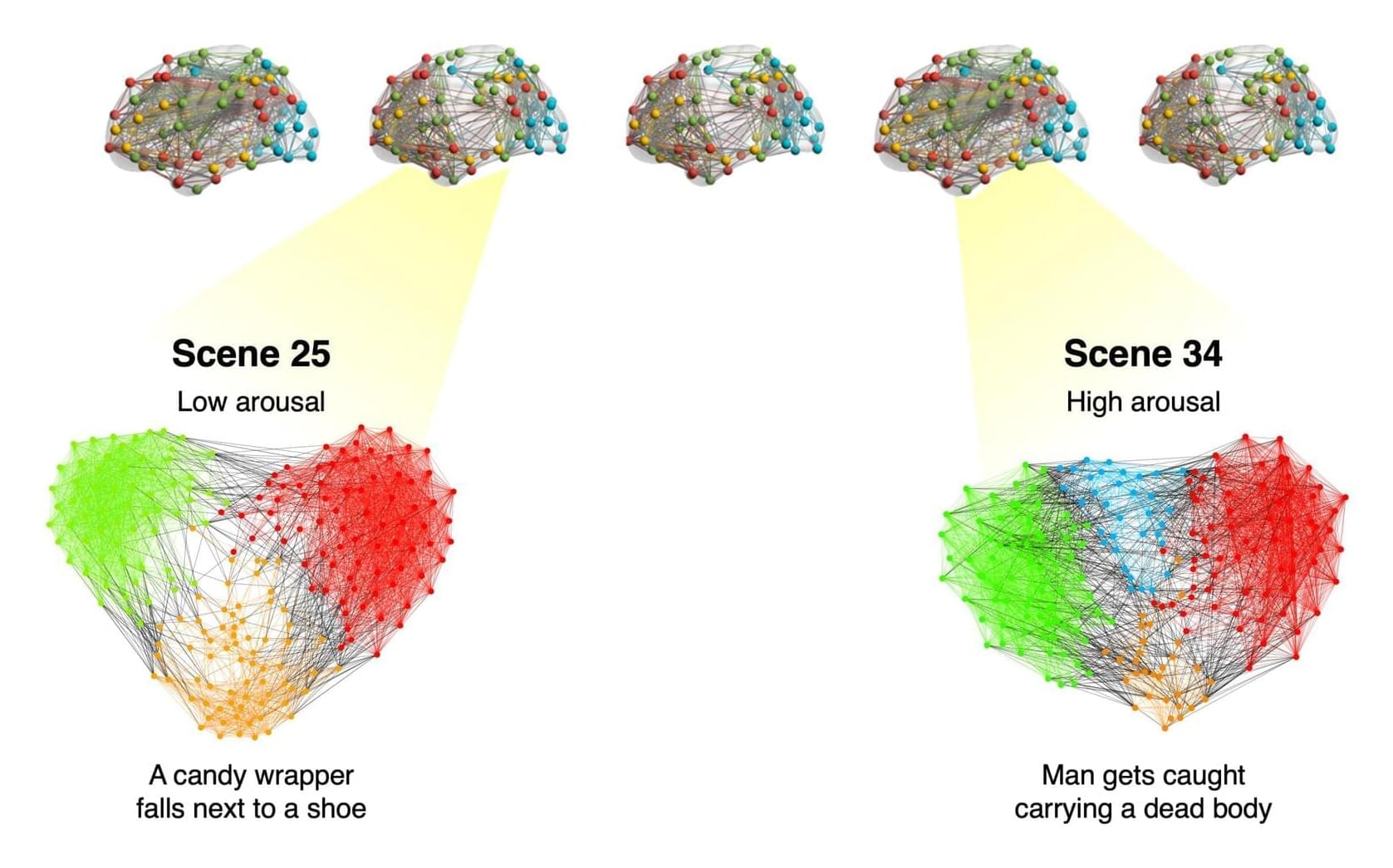

Coordinated brain network activity during emotional arousal may explain vivid, lasting memories

Past psychology studies suggest that people tend to remember emotional events, such as their wedding, the birth of a child or traumatic experiences, more vividly than neutral events, such as a routine professional meeting. While this link between emotion and the recollection of past events is well-established, the neural mechanisms via which emotional states strengthen memories remain poorly understood.

Researchers at the University of Chicago and other institutes carried out a study aimed at better understanding these mechanisms. Their findings, published in Nature Human Behaviour, suggest that emotional states facilitate the encoding of memories by increasing communication between networks of brain regions.

“Emotional experiences tend to be ‘sticky,’ meaning that they endure in our memories and shape how we interpret the past, engage with the present, and anticipate the future,” Yuan Chang Leong, senior author of the paper, told Medical Xpress.

Gut-to-brain signaling restricts post-illness protein appetite, researchers discover

When we get sick, with the flu, say, or pneumonia, there can be a period where the major symptoms of our illness have resolved but we still just don’t feel great.

“While this is common, there’s no real way to quantify what’s going on,” says Nikolai Jaschke, MD, Ph.D., who recently completed a postdoctoral fellowship at Yale School of Medicine (YSM) in the lab of Andrew Wang, MD, Ph.D., associate professor of internal medicine (rheumatology). “And unfortunately, we lack therapeutic tools to support people in this state.”

Jaschke noticed this while taking care of patients recovering from acute illnesses and, when he joined Wang’s lab, he began studying what was happening in the body during recovery. Through this work, Jaschke, Wang, and their colleagues uncovered a gut-to-brain signaling pathway in mice that restricts appetite—specifically for protein—during recovery. They published their findings on Nov. 4 in Cell.

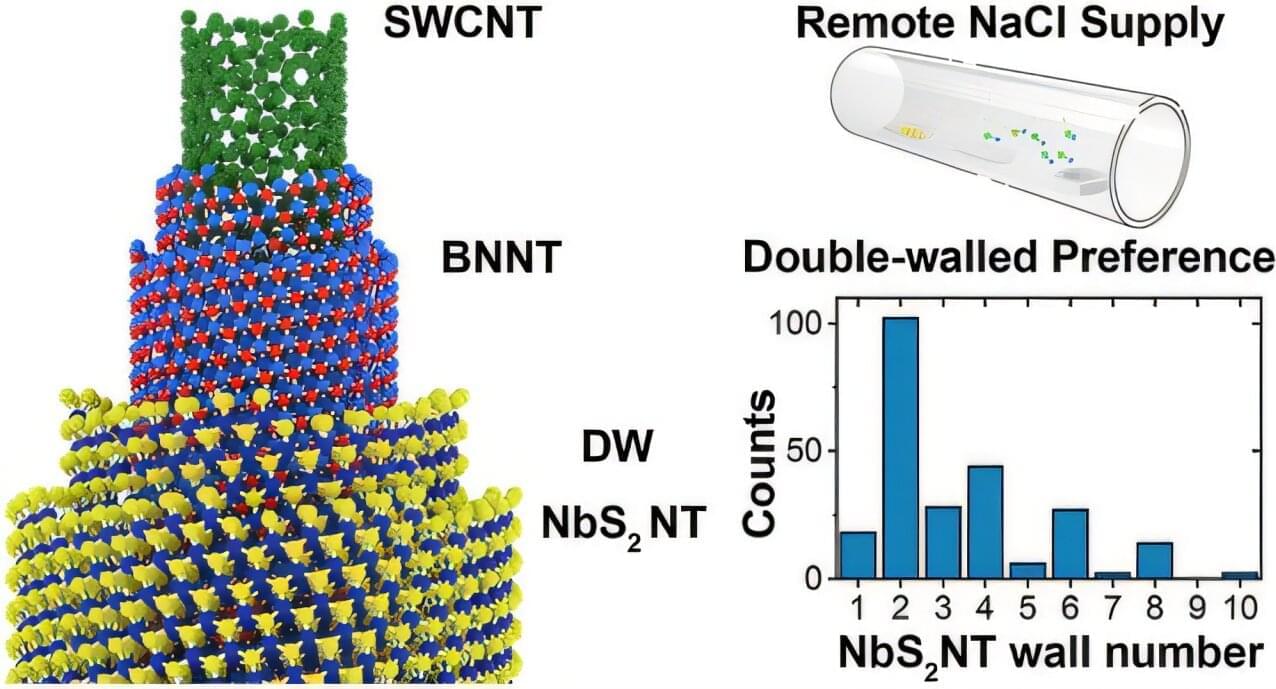

Table salt enables new metallic nanotubes with potential for faster electronics

For the first time, researchers have made niobium sulfide metallic nanotubes with stable, predictable properties, a long-sought goal in advanced materials science. According to the international team, including a researcher at Penn State, that made the accomplishment, the new nanomaterial that could open the door to faster electronics, efficient electricity transport via superconductor wires and even future quantum computers was made possible with a surprising ingredient: table salt.

They published their research in ACS Nano.

Nanotubes are structures so small that thousands of them could fit across the width of a human hair. The tiny hollow cylinders are made by rolling up sheets of atoms; nanotubes have an unusual size and shape that can cause them to behave very differently from 3D, or bulk, materials.

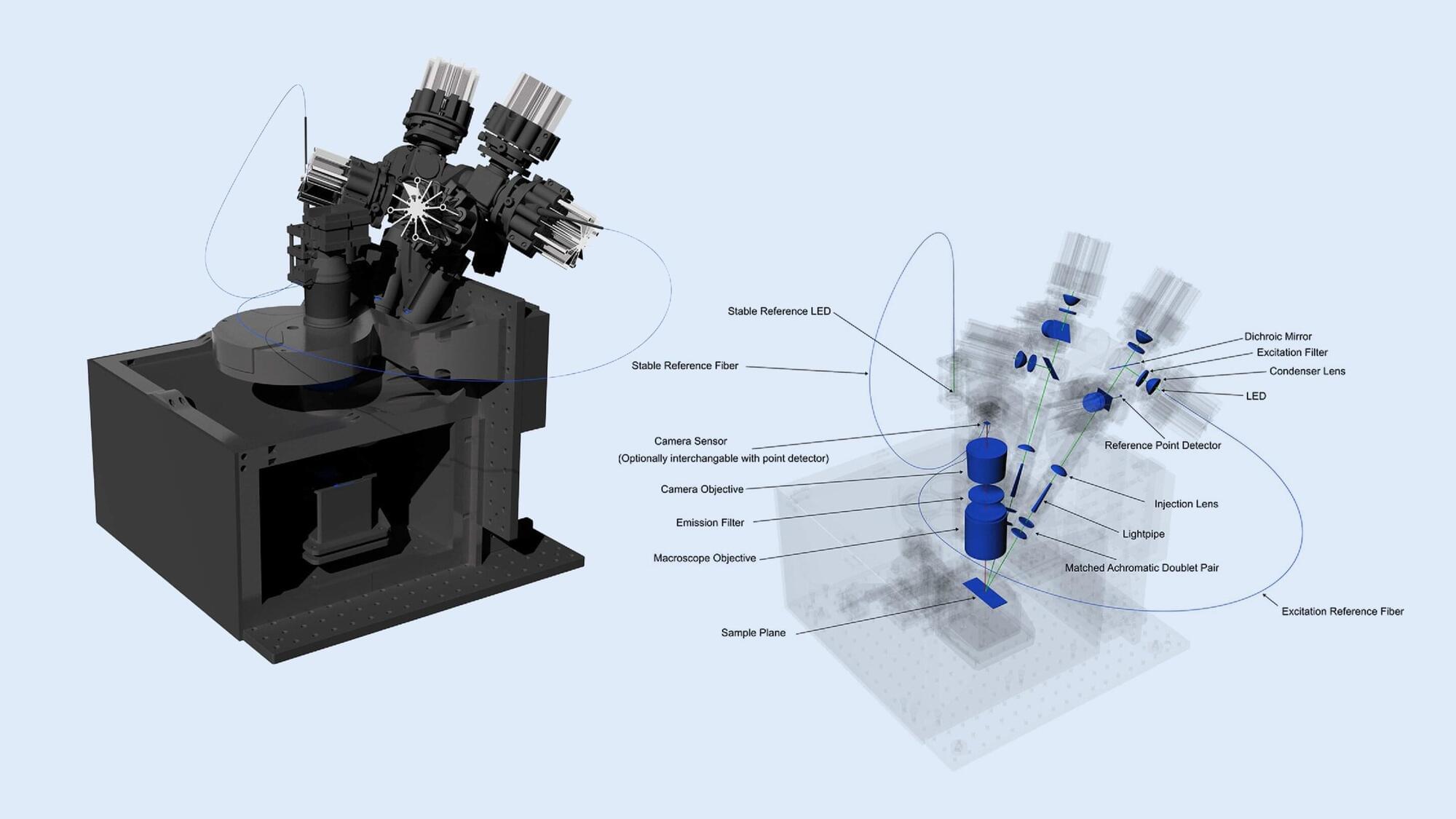

Open-source ‘macroscope’ offers dynamic luminescence imaging

A team of European researchers has developed a versatile, open-source luminescence imaging instrument designed to democratize access to advanced fluorescence and electroluminescence techniques across disciplines ranging from plant science to materials research.

The new system, detailed in Optics Express, offers an affordable and customizable alternative to bespoke laboratory setups and was developed with support from the DREAM project.

The device—described as a luminescence macroscope with dynamic illumination—combines flexibility, affordability, and precision in a single platform. Unlike conventional imaging instruments, which are often constrained by fixed optical architectures, the macroscope supports complex, time-resolved illumination and detection protocols.