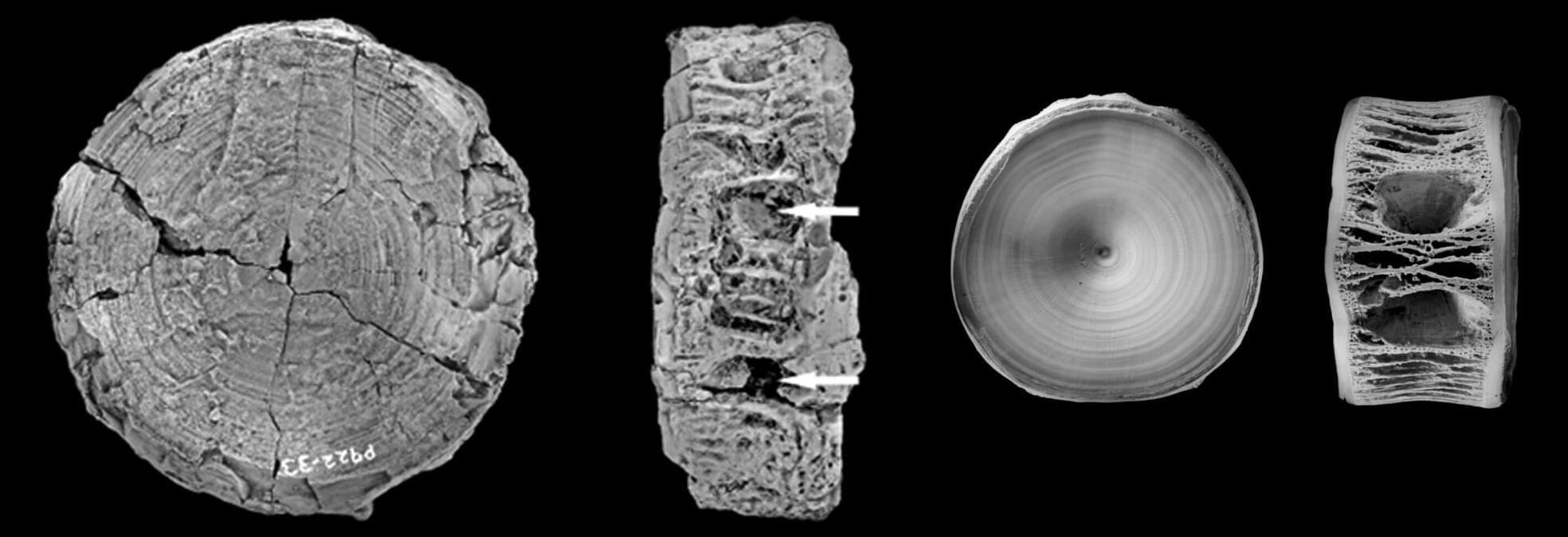

Research by Assistant Professor Jacob S. Suissa at the University of Tennessee, Knoxville, is revealing complexity in how ferns have evolved. Instead of the vascular structure inside fern stems changing as a direct adaptation to the environment, he discovered that shifts in vascular bundle arrangement in the stem are developmentally covaried with leaf placement on the stem.

“As leaf number increases, we see a direct 1:1 increase in vascular bundle number, and as the placement of leaves along the stem changes, we also see a shift in the arrangement of vascular bundles in the stem,” said Suissa, a member of UT’s Department of Ecology and Evolutionary Biology.

For 150 years, researchers have focused on how vascular bundles adapt to the environment. Suissa’s new research, published in Current Biology, suggests leaves are steering the evolution of vascular patterns inside the stem.