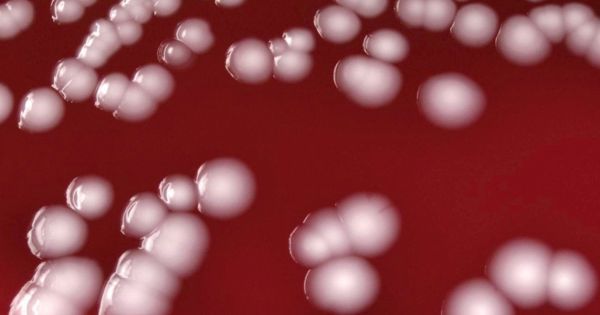

Antibiotic resistance continues to rise, and new drugs made to battle these increasingly formidable  microbes could take more than a decade to develop. In an effort to stress the urgency of this rising resistance, the World Health Organization (WHO) created a list of the twelve deadliest superbugs with which we are currently dealing.

microbes could take more than a decade to develop. In an effort to stress the urgency of this rising resistance, the World Health Organization (WHO) created a list of the twelve deadliest superbugs with which we are currently dealing.

The list is broken into three categories based on the severity of the threat (medium, high, or critical) that a given superbug poses. The three critical bacteria, Acinetobacter baumannii, Pseudomonas aeruginosa, and Enterobacteriaceae, are all already resistant to multiple drugs. One of these (Pseudomonas aeruginosa) actually explodes when they die, making them even more deadly.

Pathogens that cause more common diseases like food poisoning or gonorrhea round out the rest of the list. Some big hitters include MRSA and salmonella.