The metaverse was the next big thing and now it’s generative AI. We asked top tech executives to cut through the hype. They say this one’s for real.

Deepfake technology has been around for some time, and it’s currently causing controversies for the potential threats it may bring when fallen into the wrong hands. Even India’s business tycoon Anand Mahindra sparked alarm over hyper-realistic synthetic videos.

But some personalities are redefining the way viewers perceive deepfakes. For instance, David Guetta recently synthesized Eminem’s voice to hype up an event. And it’s only one of the many examples of people using artificial intelligence (AI) for entertainment. In fact, it already came to different social media websites like Twitch that take streaming to the next level.

Bachir Boumaaza is a Youtuber who once made a name for his record-breaking games, which he used to help numerous charities. But he became inactive, with most of his fans wondering where he went, thinking it might’ve been the end of his career.

From creator Brad Wright, who honed his expertise writing on three Stargate shows for 14 years, Travelers is a departure from traditional sci-fi tropes. It’s the kind of modern, grounded sci-fi that funnels its wriggling ball of time travel strands through the lenses of empathetic, endearing characters.

This team of time-traveling agents, called “travelers,” inhabit the bodies of people who are close to death. With the help of GPS coordinates, historical records and social media, the consciousnesses of the future travelers are inserted into the bodies of 21st century civilians. It’s the cleanest way for the travelers to go back in time and complete their mission, utilizing the lives of those who were going to die anyway.

Gleams Akihabara 703 2−8−16 Higashi-Kanda Chiyoda-ku Tokyo 101‑0031 Japan.

Tel: +81 3 5829 5,900 Fax: +81 3 5829 5,919 Email: [email protected]

©2023 GPlusMedia Inc.

When it comes to DNA, one pesky mosquito turns out to be a rebel among species.

Researchers at Rice University’s Center for Theoretical Biological Physics (CTBP) are among the pioneers of a new approach to studying DNA. Instead of focusing on chromosomes as linear sequences of genetic code, they’re looking for clues on how their folded 3D shapes might determine gene expression and regulation.

For most living things, their threadlike chromosomes fold to fit inside the nuclei of cells in one of two ways. But the chromosomes of the Aedes aegypti mosquito—which is responsible for the transmission of tropical diseases such as dengue, chikungunya, Zika, mayaro and yellow fever—defy this dichotomy, taking researchers at the CTBP by surprise.

CarMax already uses this automated vehicle inspection system.

UVeye’s technology is reducing fatalities out on the road by harnessing the power of AI and high-definition cameras to detect faulty tires, fluid leaks and damaged components before a possible accident or breakdown.

Nanoscale defects and mechanical stress cause the failure of solid electrolytes.

A group of researchers has claimed to have found the cause of the recurring short-circuiting issues of lithium metal batteries with solid electrolytes. The team, which consists of members from Stanford University and SLAC National Accelerator Laboratory, aims to further the battery technology, which is lightweight, inflammable, energy-dense, and offers quick-charge capabilities. Such a long-lasting solution can help to overcome the barriers when it comes to the adoption of electric vehicles around the world.

A study published on January 30 in the journal Nature Energy details different experiments on how nanoscale defects and mechanical stress cause solid electrolytes to fail.

According to the team, the issue was down to mechanical stress, which was induced while recharging the batteries. “Just modest indentation, bending or twisting of the batteries can cause nanoscopic issues in the materials to open and lithium to intrude into the solid electrolyte causing it to short circuit,” explained William Chueh, senior study author and an associate professor at Stanford Doerr School of Sustainability.

The possibility of dust or other impurities present at the manufacturing stage could also cause the batteries to malfunction.

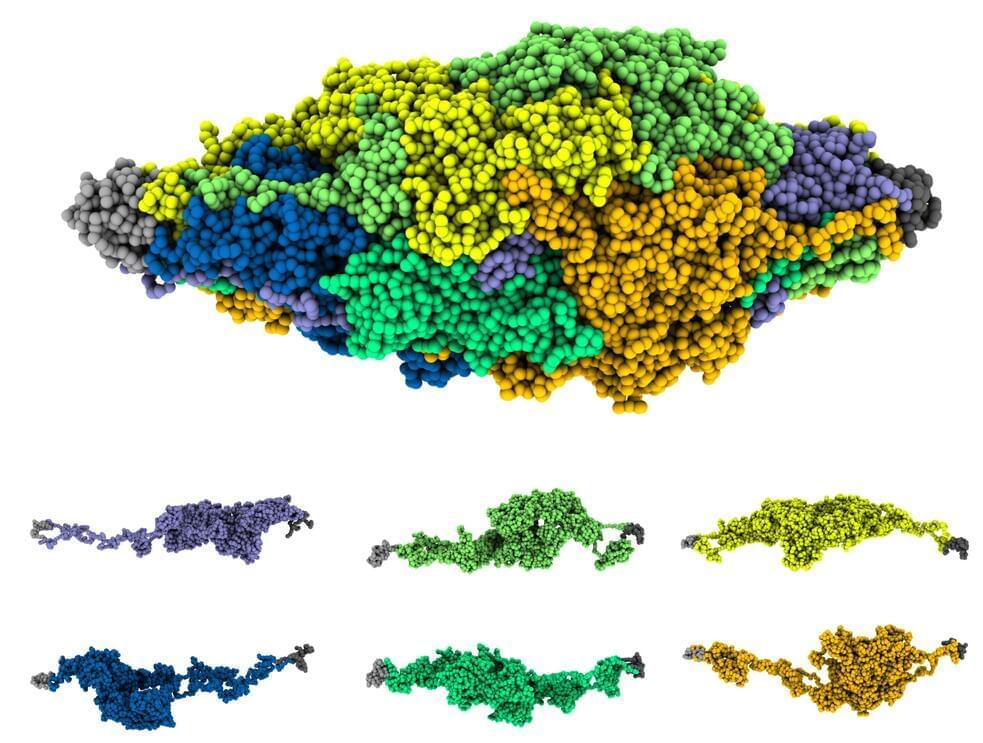

The first time a language model was used to synthesize human proteins.

Of late, AI models are really flexing their muscles. We have recently seen how ChatGPT has become a poster child for platforms that comprehend human languages. Now a team of researchers has tested a language model to create amino acid sequences, showcasing abilities to replicate human biology and evolution.

The language model, which is named ProGen, is capable of generating protein sequences with a certain degree of control. The result was achieved by training the model to learn the composition of proteins. The experiment marks the first time a language model was used to synthesize human proteins.

A study regarding the research was published in the journal *Nature Biotechnology Thursday. *The project was a combined effort from researchers at the University of California-San Francisco and the University of California-Berkeley and Salesforce Research, which is a science arm of a software company based in San Fransisco.

## The significance of using a language model

Google worked to reassure investors and analysts on Thursday during its quarterly earnings call that it’s still a leader in developing AI. The company’s Q4 2022 results were highly anticipated as investors and the tech industry awaited Google’s response to the popularity of OpenAI’s ChatGPT, which has the potential to threaten its core business.

During the call, Google CEO Sundar Pichai talked about the company’s plans to make AI-based large language models (LLMs) like LaMDA available in the coming weeks and months. Pichai said users will soon be able to use large language models as a companion to search. An LLM, like ChatGPT, is a deep learning algorithm that can recognize, summarize and generate text and other content based on knowledge from enormous amounts of text data. Pichai said the models that users will soon be able to use are particularly good for composing, constructing and summarizing.

“Now that we can integrate more direct LLM-type experiences in Search, I think it will help us expand and serve new types of use cases, generative use cases,” Pichai said. “And so, I think I see this as a chance to rethink and reimagine and drive Search to solve more use cases for our users as well. It’s early days, but you will see us be bold, put things out, get feedback and iterate and make things better.”

Pichai’s comments about the possible ChatGPT rival come as a report revealed this week that Microsoft is working to incorporate a faster version of ChatGPT, known as GPT-4, into Bing, in a move that would make its search engine, which today has only a sliver of search market share, more competitive with Google. The popularity of ChatGPT has seen Google reportedly turning to co-founders Larry Page and Sergey Brin for help in combating the potential threat. The New York Times recently reported that Page and Brin had several meetings with executives to strategize about the company’s AI plans.

During the call, Pichai warned investors and analysts that the technology will need to scale slowly and that he sees large language usage as still being in its “early days.” He also said that the company is developing AI with a deep sense of responsibility and that it’s going to be careful when launching AI-based products, as the company plans to initially launch beta features and then slowly scale up from there.

The results highlight some potential strengths and weaknesses of ChatGPT.

Some of the world’s biggest academic journal publishers have banned or curbed their authors from using the advanced chatbot, ChatGPT. Because the bot uses information from the internet to produce highly readable answers to questions, the publishers are worried that inaccurate or plagiarised work could enter the pages of academic literature.

Several researchers have already listed the chatbot as a co-author in academic studies, and some publishers have moved to ban this practice. But the editor-in-chief of Science, one of the top scientific journals in the world, has gone a step further and forbidden any use of text from the program in submitted papers.

It’s not surprising the use of such chatbots is of interest to academic publishers. Our recent study, published in Finance Research Letters, showed ChatGPT could be used to write a finance paper that would be accepted for an academic journal. Although the bot performed better in some areas than in others, adding in our own expertise helped overcome the program’s limitations in the eyes of journal reviewers.

However, we argue that publishers and researchers should not necessarily see ChatGPT as a threat but rather as a potentially important aide for research — a low-cost or even free electronic assistant.