Amid controversy, industry goes all in on plastics pyrolysis.

Chemical companies are fully backing this plastic waste recycling process. To prove their detractors wrong, they will need to make it work.

But in the past few years, a climate change hero technology has made its way into electric vehicles, one that has improved—but not solved—their cold weather issues: heat pumps. Heat pumps transfer heat from outside the car to help keep passengers warm, and so avoid sucking too much power away from the battery. And yes, heat pumps can still bring warm air into the car even if it’s freezing outside, albeit with mixed success. As counterintuitive as it sounds, there is still a good amount of heat that can be drawn from air that’s, say, 10 degrees Fahrenheit.

Today, heat pumps come in many, but not all, new electric vehicles. Teslas have come with a proprietary heat pump tech since 2021. Jaguar’s I-Pace has one built in, as does BMW’s latest i-series cars, Hyundai’s Ioniq 5, Audi’s newest e-Tron, and Kia’s new electrified flagship, the EV9.

“Any electric vehicle that comes out right now and doesn’t have a heat pump is a dinosaur already,” says John Kelly, an automotive technology professor and instructor focusing on hybrid and electric vehicle technology at Weber State University.

Archaeologists in Egypt have unearthed a cache of treasures—including more than 50 wooden sarcophagi, a funerary temple dedicated to an Old Kingdom queen and a 13-foot-long Book of the Dead scroll—at the Saqqara necropolis, a vast burial ground south of Cairo, according to a statement from the…

The team also discovered dozens of sarcophagi, wooden masks and ancient board games.

It has been known for more than century that increases in neural activity in the brain drive changes in local blood flow, known as neurovascular coupling. The colloquial explanation for these increases in blood flow (referred to as functional hyperemia) in the brain is that they serve to supply the needs of metabolically active neurons. However, there is an large body of evidence that is inconsistent with this idea. In most cases, baseline blood flow is adequate to supply even elevated neural activity. Neurovascular coupling is irregular, absent, or inverted in many brain regions, behavioral states, and conditions. Increases in respiration can generate increases in brain oxygenation independently of flow changes. Simulations have shown that areas with low blood flow are inescapable and cannot be removed by functional hyperemia given the architecture of the cerebral vasculature. What physiological purpose might neurovascular coupling serve? Here, we discuss potential alternative functions of neurovascular coupling. It may serve supply oxygen for neuromodulator synthesis, to regulate cerebral temperature, signal to neurons, stabilize and optimize the cerebral vascular structure, deal with the non-Newtonian nature of blood, or drive the production and circulation of cerebrospinal fluid around and through the brain via arterial dilations. Understanding the ‘why’ of neurovascular coupling is an important goal that give insight into the pathologies caused by cerebrovascular disfunction.

Like all energy demanding organs, the brain is highly vascularized. When presented with a sensory stimulus or cognitive task, increases in neural activity in many brain regions are accompanied by local dilation of arterioles and other microvessels, increasing local blood flow, volume and oxygenation. The increase in blood flow in response to increased neural activity (known as functional hyperemia) is controlled by a multitude of different signaling pathways via neurovascular coupling (reviewed in [1,2]). These vascular changes can be monitored non-invasively in humans and other species, with techniques (like BOLD fMRI) that are cornerstones in modern neuroscience [3,4]. Chronic disruptions of neurovascular coupling have adverse health effects on the brain. Stress affects neurovascular coupling [5,6], and many neurodegenerative diseases are marked by vascular dysfunction [7].

Scientists hoped that capturing a time-lapse from Mars could reveal cloud or dust devil activity, leading to insights about the weather on the planet. The images were taken while the rover was parked on Nov. 8, 2023, just over 4,000 sols – Martian days – into the mission.

Though the images did not reveal any weather anomalies, scientists did get a detailed look at the planet’s surface.

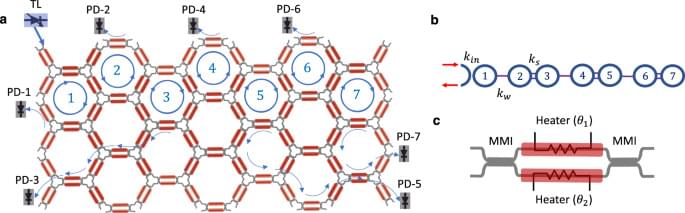

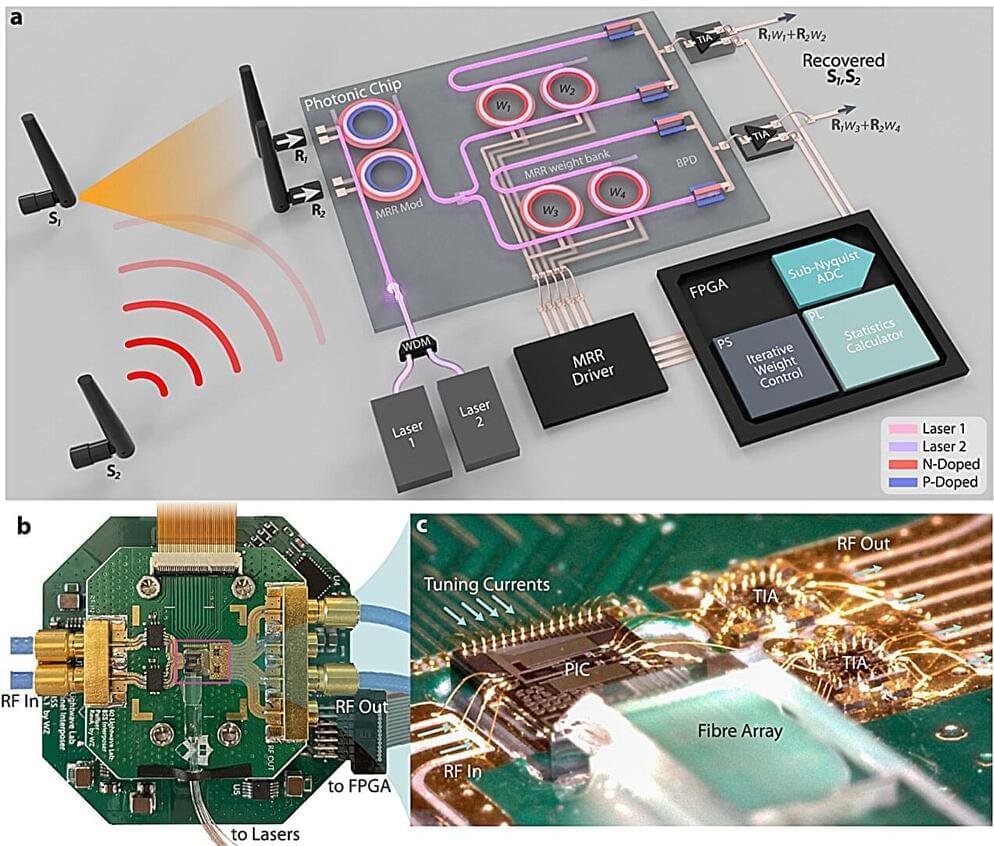

Radar altimeters are the sole indicators of altitude above a terrain. Spectrally adjacent 5G cellular bands pose significant risks of jamming altimeters and impacting flight landing and takeoff. As wireless technology expands in frequency coverage and utilizes spatial multiplexing, similar detrimental radio-frequency (RF) interference becomes a pressing issue.

To address this interference, RF front ends with exceptionally low latency are crucial for industries like transportation, health care, and the military, where the timeliness of transmitted messages is critical. Future generations of wireless technologies will impose even more stringent latency requirements on RF front-ends due to increased data rate, carrier frequency, and user count.

Additionally, challenges arise from the physical movement of transceivers, resulting in time-variant mixing ratios between interference and signal-of-interest (SOI). This necessitates real-time adaptability in mobile wireless receivers to handle fluctuating interference, particularly when it carries safety-to-life critical information for navigation and autonomous driving, such as aircraft and ground vehicles.