Kamilla Cziráki, a geophysics student at the Faculty of Science of Eötvös Loránd University (ELTE), has taken a new approach to researching the navigation systems that can be used on the surface of the moon to plan future journeys.

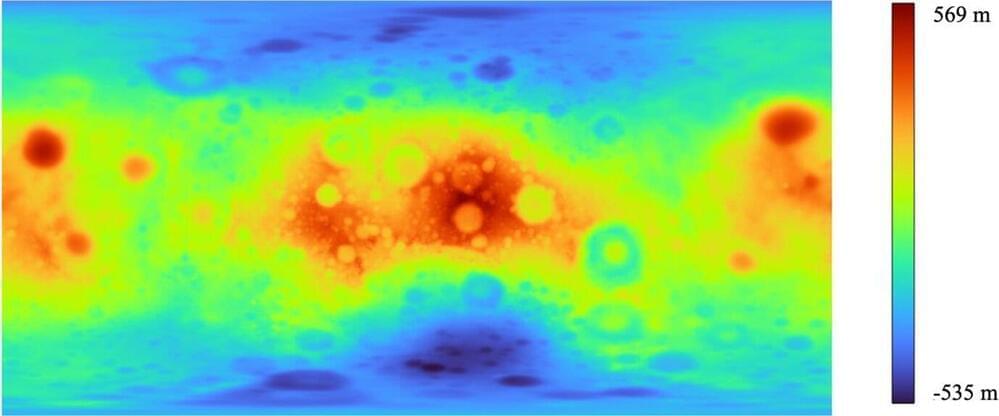

Working with Professor Gábor Timár, head of the Department of Geophysics and Space Sciences, Cziráki calculated the parameters used in the Earth’s GPS system for the moon using the method of mathematician Fibonacci, who lived 800 years ago. Their findings have been published in the journal Acta Geodaetica et Geophysica.

Now, as humanity prepares to return to the moon after half a century, the focus is on possible methods of lunar navigation. It seems likely that the modern successors to the lunar vehicles of the Apollo missions will now be assisted by some form of satellite navigation, similar to the GPS system on Earth. In the case of Earth, these systems do not take into account the actual shape of our planet, the geoid, not even the surface defined by sea level, but a rotating ellipsoid that best fits the geoid.