This was the shortest time between orbital launches at Cape Canaveral since 1966.

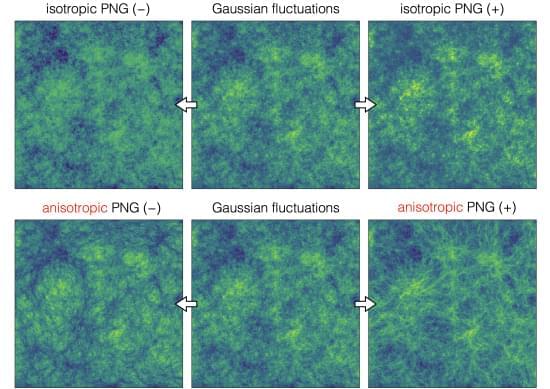

A team of researchers has analyzed more than one million galaxies to explore the origin of the present-day cosmic structures, reports a recent study published in Physical Review D as an Editors’ Suggestion.

Until today, precise observations and analyses of the cosmic microwave background (CMB) and large-scale structure (LSS) have led to the establishment of the standard framework of the universe, the so-called ΛCDM model, where cold dark matter (CDM) and dark energy (the cosmological constant, Λ) are significant characteristics.

This model suggests that primordial fluctuations were generated at the beginning of the universe, or in the early universe, which acted as triggers, leading to the creation of all things in the universe including stars, galaxies, galaxy clusters, and their spatial distribution throughout space. Although they are very small when generated, fluctuations grow with time due to the gravitational pulling force, eventually forming a dense region of dark matter, or a halo. Then, different halos repeatedly collided and merged with one another, leading to the formation of celestial objects such as galaxies.

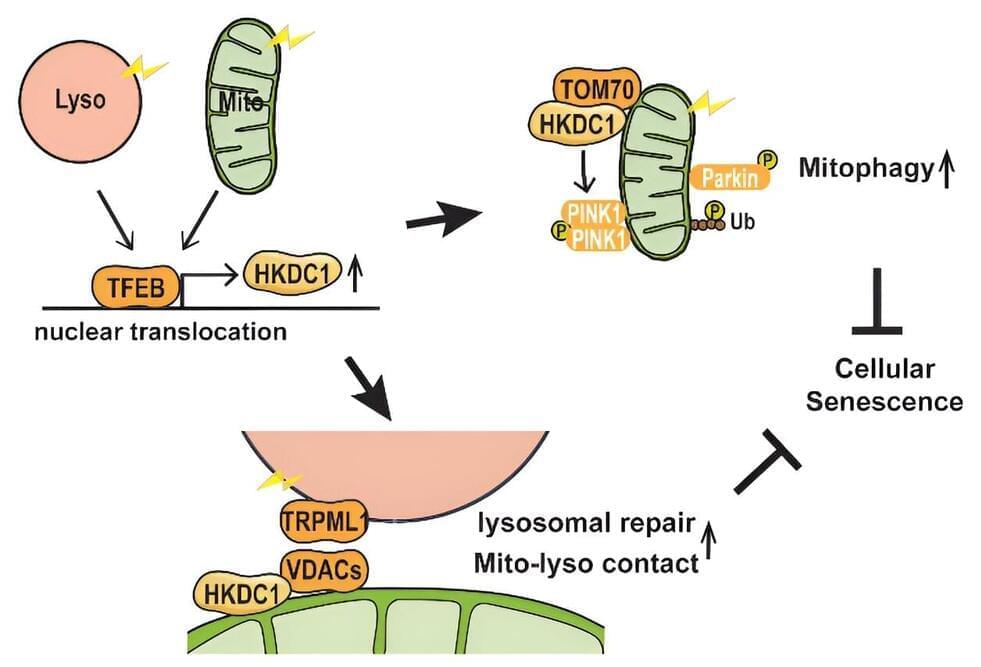

Just as healthy organs are vital to our well-being, healthy organelles are vital to the proper functioning of the cell. These subcellular structures carry out specific jobs within the cell; for example, mitochondria power the cell, and lysosomes keep the cell tidy.

Although damage to these two organelles has been linked to aging, cellular senescence, and many diseases, the regulation and maintenance of these organelles have remained poorly understood. Now, researchers at Osaka University have identified a protein, HKDC1, that plays a key role in maintaining these two organelles, thereby acting to prevent cellular aging.

There was evidence that a protein called TFEB is involved in maintaining the function of both organelles, but no targets of this protein were known. By comparing all the genes of the cell that are active under particular conditions and by using a method called chromatin immunoprecipitation, which can identify the DNA targets of proteins, the team was the first to show that the gene encoding HKDC1 is a direct target of TFEB, and that HKDC1 becomes upregulated under conditions of mitochondrial or lysosomal stress.

In a public lecture titled “The Meaning of Spacetime,” renowned physicist Juan Maldacena outlined ideas that arose from the study of quantum aspects of black holes.

V/ Perimeter Institute

On July 27, Juan Maldacena, a luminary in the worlds of string theory and quantum gravity, will share his insights on black holes, wormholes, and quantum entanglement.

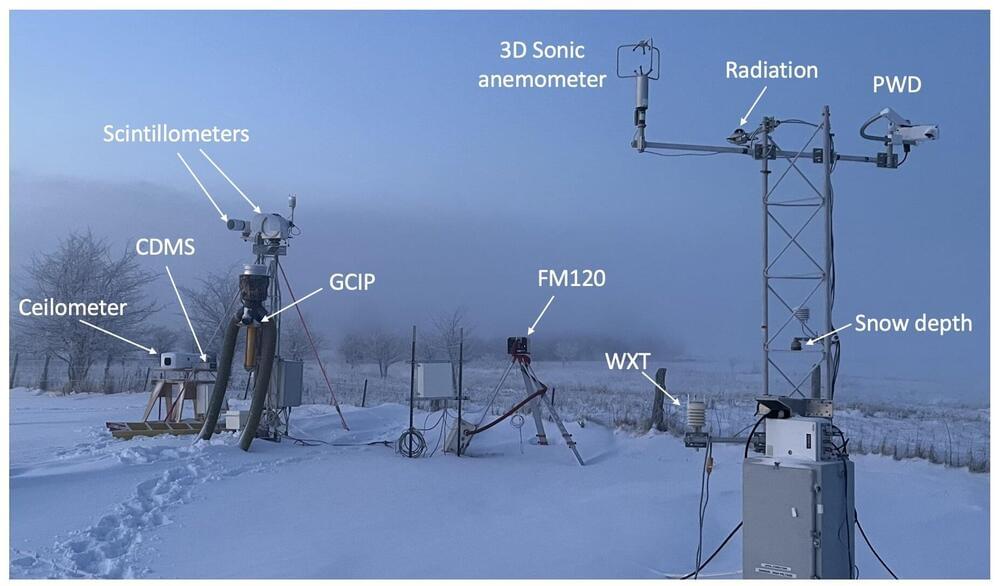

Of the world’s various weather phenomena, fog is perhaps the most mysterious, forming and dissipating near the ground with fluctuations in air temperature and humidity interacting with the terrain itself.

While fog presents a major hazard to transportation safety, meteorologists have yet to figure out how to forecast it with the precision they have achieved for precipitation, wind and other stormy events.

This is because the physical processes resulting in fog formation are extremely complex, according to Zhaoxia Pu, a professor of atmospheric sciences at the University of Utah.

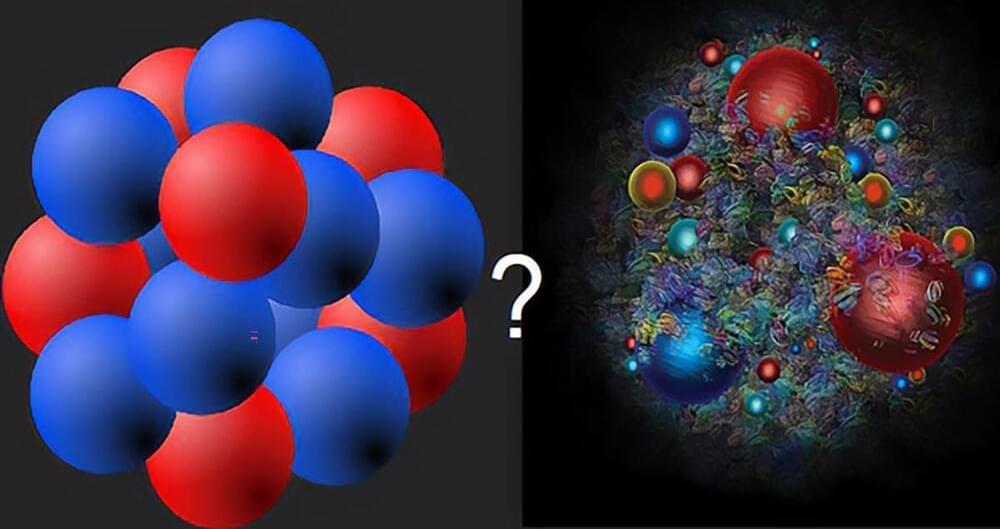

Matter inside neutron stars can have different forms: a dense liquid of nucleons or a dense liquid of quarks.

Recent studies reveal that in neutron stars, quark liquids are fundamentally different from nucleon liquids, as evidenced by the unique color-magnetic field in their vortices. This finding challenges previous beliefs in quantum chromodynamics and offers new insights into the nature of confinement.

The science of neutron star matter.

New research reveals a never-before-seen behavior in a repeating Fast Radio Burst, offering fresh insights into these mysterious cosmic phenomena.

Astronomers are continuing to unravel the mystery of deep space signals after discovering a never-before-seen quirk in a newly-detected Fast Radio Burst (FRB).

FRBs are millisecond-long, extremely bright flashes of radio light that generally come from outside our Milky Way galaxy. Most happen only once but some “repeaters” send out follow-up signals, adding to the intrigue surrounding their origin.

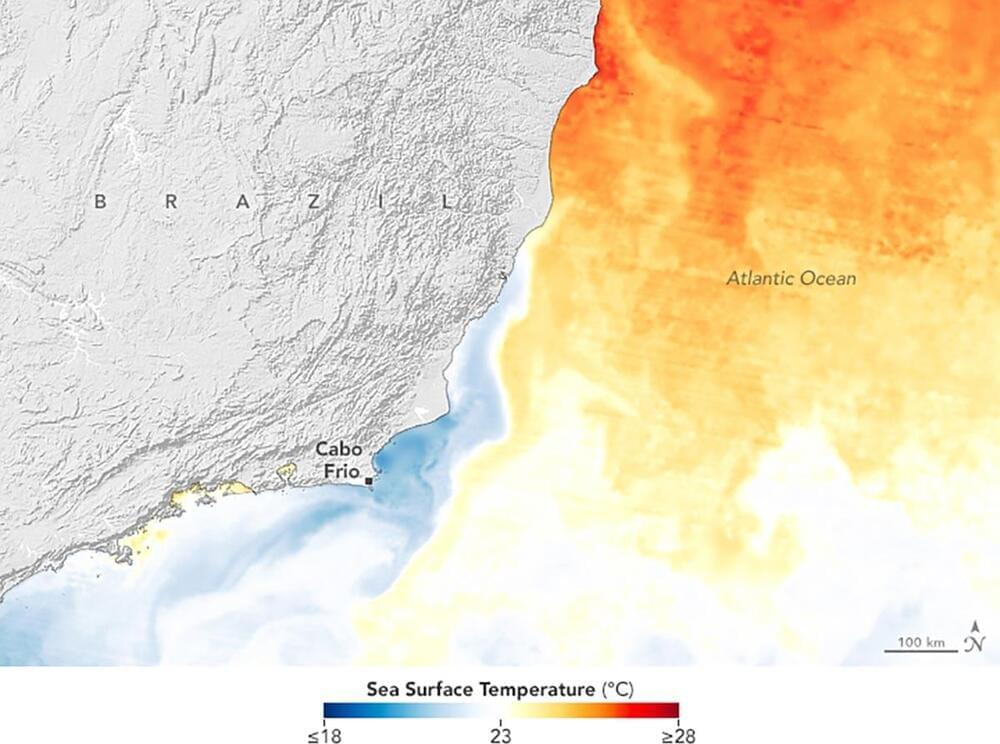

People have long noticed that the waters around Cabo Frio are unusually cool.

When European explorers first surveyed the coastline of what is now the state of Rio de Janeiro in the early 1500s, they encountered white sands, turquoise waters, shallow lagoons, and lush green mountains rising from the sea. The waters in one area, however, were unusually cool—so much so that the promontory in southeastern Brazil shown above was named Cabo Frio, Portuguese for “Cape Cold.”

Landsat 9’s OLI-2 (Operational Land Imager-2) captured this image of Cabo Frio’s diverse coastline on September 16, 2023. The map (below) shows that surface waters that day were cooler off of Cabo Frio than in the surrounding waters. The pattern is common: Upwelling of cold water from deeper in the ocean to the surface often chills Cabo Frio’s surface waters by several degrees.

Animals exhibit a diverse behavioral repertoire when exploring new environments and can learn which actions or action sequences produce positive outcomes. Dopamine release upon encountering reward is critical for reinforcing reward-producing actions1 – 3. However, it has been challenging to understand how credit is assigned to the exact action that produced dopamine release during continuous behavior. We investigated this problem with a novel self-stimulation paradigm in which specific spontaneous movements triggered optogenetic stimulation of dopaminergic neurons. Dopamine self-stimulation rapidly and dynamically changes the structure of the entire behavioral repertoire. Initial stimulations reinforced not only the stimulation-producing target action, but also actions similar to target and actions that occurred a few seconds before stimulation. Repeated pairings led to gradual refinement of the behavioral repertoire to home in on the target. Reinforcement of action sequences revealed further temporal dependencies of refinement. Action pairs spontaneously separated by long time intervals promoted a stepwise credit assignment, with early refinement of actions most proximal to stimulation and subsequent refinement of more distal actions. Thus, a retrospective reinforcement mechanism promotes not only reinforcement, but gradual refinement of the entire behavioral repertoire to assign credit to specific actions and action sequences that lead to dopamine release.

F.C. is the Director of Open Ephys Production Site.