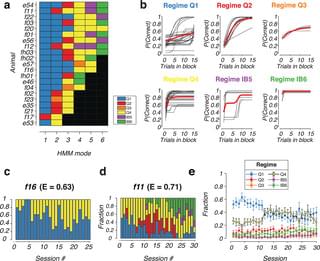

Humans and animals can use diverse decision-making strategies to maximize rewards in uncertain environments, but previous studies have not investigated the use of multiple strategies that involve distinct latent switching dynamics in reward-guided behavior. Here, using a reversal learning task, we showed that mice displayed a much more variable behavior than would be expected from a uniform strategy, suggesting that they mix between multiple behavioral modes in the task. We develop a computational method to dissociate these learning modes from behavioral data, addressing the challenges faced by current analytical methods when agents mix between different strategies. We found that the use of multiple strategies is a key feature of rodent behavior even in the expert stages of learning, and applied our tools to quantify the highly diverse strategies used by individual mice in the task. We further mapped these behavioral modes to two types of underlying algorithms, model-free Q-learning and inference-based behavior. These rich descriptions of underlying latent states form the basis of detecting abnormal patterns of behavior in reward-guided decision-making.

Citation: Le NM, Yildirim M, Wang Y, Sugihara H, Jazayeri M, Sur M (2023) Mixtures of strategies underlie rodent behavior during reversal learning. PLoS Comput Biol 19: e1011430. https://doi.org/10.1371/journal.pcbi.

Editor: Alireza Soltani, Dartmouth College, UNITED STATES