An exploration of an extension of the Phosphorus Problem solution to the Fermi Paradox. It’s far worse of a problem than we thought.

My Patreon Page:

/ johnmichaelgodier.

My Event Horizon Channel:

/ eventhorizonshow.

Links:

An exploration of an extension of the Phosphorus Problem solution to the Fermi Paradox. It’s far worse of a problem than we thought.

My Patreon Page:

/ johnmichaelgodier.

My Event Horizon Channel:

/ eventhorizonshow.

Links:

Gauging whether or not we dwell inside someone else’s computer may come down to advanced AI research—or measurements at the frontiers of cosmology.

The only people who absolutely disagree are, well, scientists. They need to get over themselves and join the fun.

Programming the Universe: A Quantum Computer Scientist Takes on the Cosmos. Seth Lloyd. xii + 221 pp. Alfred A. Knopf, 2006. $25.95.

In the 1940s, computer pioneer Konrad Zuse began to speculate that the universe might be nothing but a giant computer continually executing formal rules to compute its own evolution. He published the first paper on this radical idea in 1967, and since then it has provoked an ever-increasing response from popular culture (the film The Matrix, for example, owes a great deal to Zuse’s theories) and hard science alike.

Join us on Patreon! https://www.patreon.com/MichaelLustgartenPhDDiscount Links: At-Home Metabolomics: https://www.iollo.com?ref=michael-lustgartenUse Code: C…

The manifestation of the quantum gravitational field becomes evident when observing phenomena at the Planck’s length scale. Hence, the tiniest constituent of our cosmos, which is not even perceptible, must exist at the scale of Planck’s length.

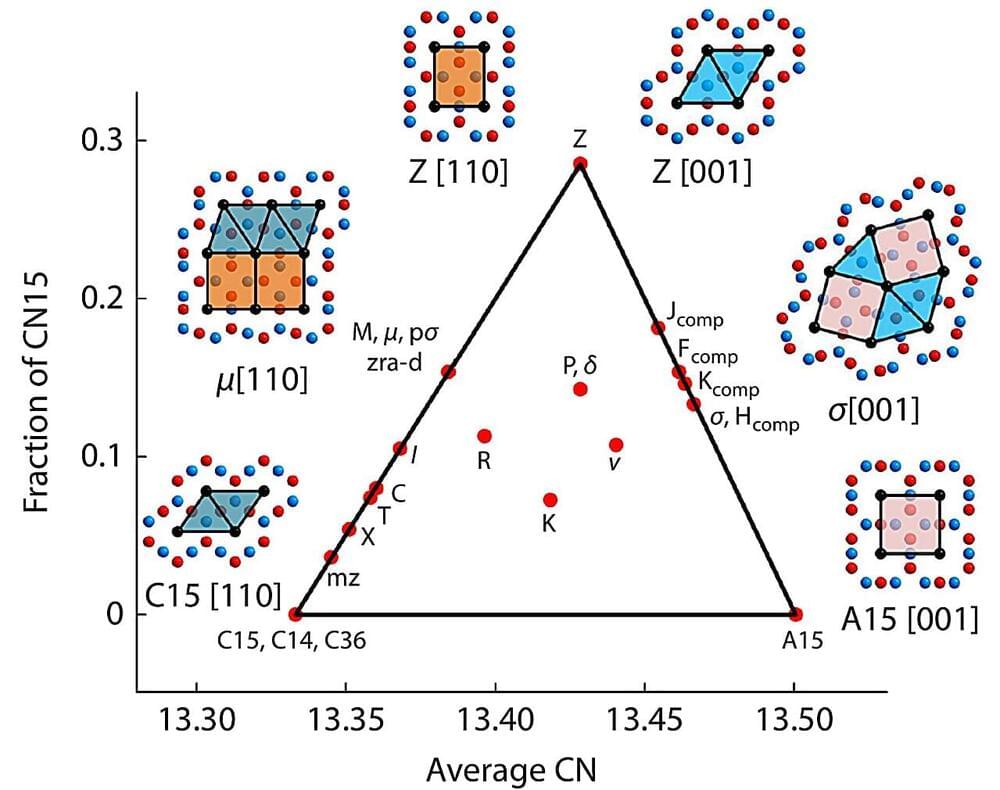

As traditional top-down approaches like photolithography reach their limitations in creating nanostructures, scientists are shifting their focus toward bottom-up strategies. Central to this paradigm shift is the self-assembly of homogeneous soft matter, a burgeoning technique with the potential to produce complex nano-patterns on a vast scale.

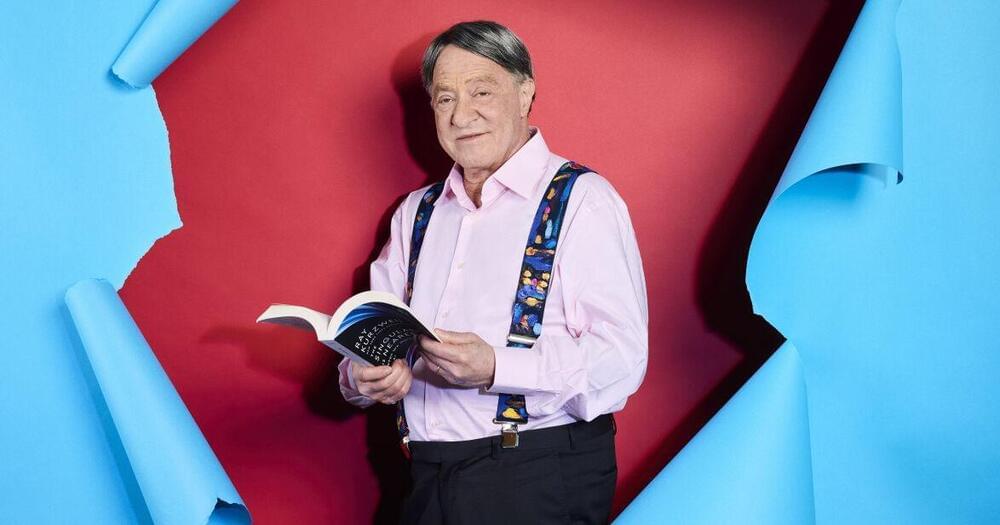

Unlike me, Kurzweil has been embracing AI for decades. In his 2005 book, The Singularity Is Near: When Humans Transcend Biology, Kurzweil made the bold prediction that AI would expand human intelligence exponentially, changing life as we know it. He wasn’t wrong. Now in his 70s, Kurzweil is upping the ante in his newest book, The Singularity Is Nearer: When We Merge with AI, revisiting his prediction of the melding of human and machine, with 20 additional years of data showing the exponential rate of technological advancement. It’s a fascinating look at the future and the hope for a better world.

Kurzweil has long been recognized as a great thinker. The son of a musician father and visual artist mother, he grew up in New York City and at a young age became enamored with computers, writing his first computer program at the age of 15.

While at MIT, earning a degree in computer science and literature, Kurzweil started a company that created a computer program to match high school students with colleges. In the ensuing years, he went on to found (and sell) multiple technology-fueled companies and inventions, including the first reading machine for the blind and the first music synthesizer capable of re-creating the grand piano and other orchestral instruments (inspired by meeting Stevie Wonder). He has authored 11 books.