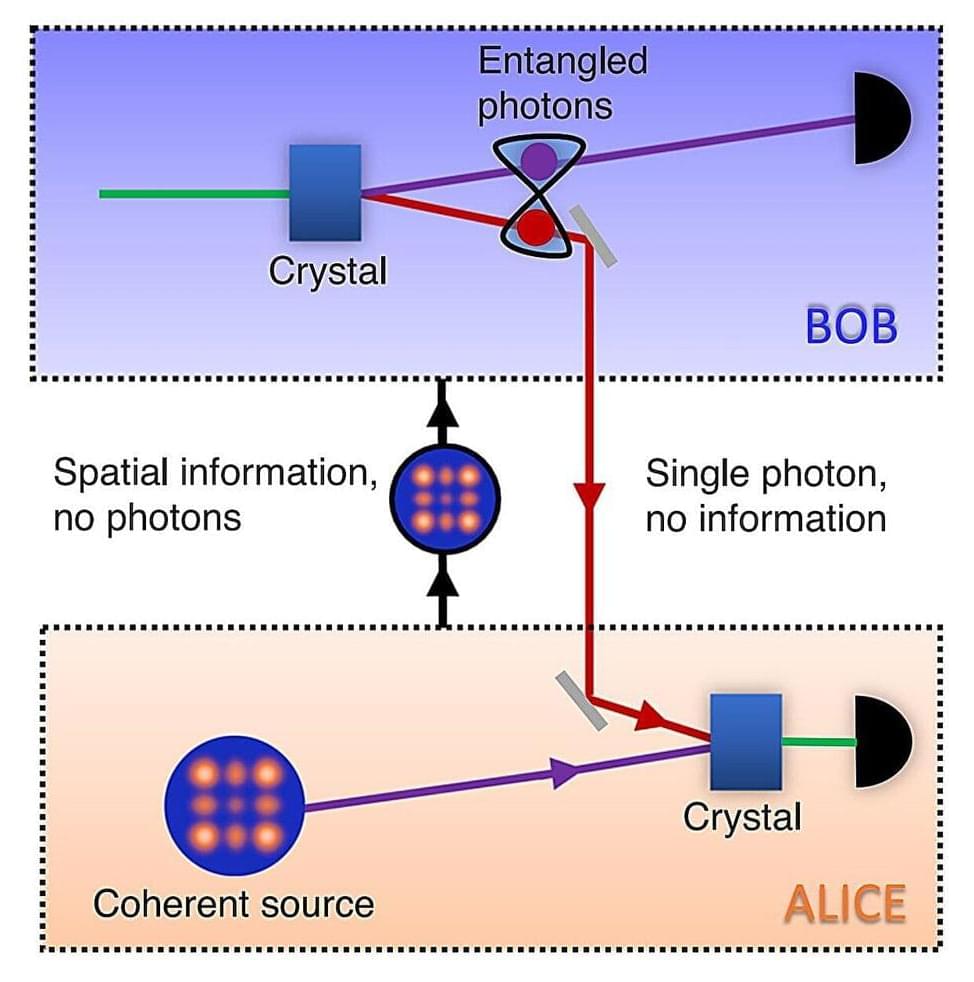

Nature Communications published research by an international team from Wits and ICFO-The Institute of Photonic Sciences, which demonstrates the teleportation-like transport of “patterns” of light—this is the first approach that can transport images across a network without physically sending the image and a crucial step towards realizing a quantum network for high-dimensional entangled states.

Quantum communication over long distances is integral to information security and has been demonstrated with two-dimensional states (qubits) over very long distances between satellites. This may seem enough if we compare it with its classical counterpart, i.e., sending bits that can be encoded in 1s (signal) and 0s (no signal), one at a time.

However, quantum optics allow us to increase the alphabet and to securely describe more complex systems in a single shot, such as a unique fingerprint or a face.