Saudi Arabia hopes that bankers will shell out cash once they see the progress on its futuristic desert city, Neom, in person.

I found this on NewsBreak:

The crew of the International Space Station has stumbled upon a drug-resistant bacteria on board, leaving them baffled as to how it arrived.

Scientists working in the low orbit lab have confirmed the discovery, which raises concerns about the potential evolution of more robust bacteria that could defy current treatments. The unique microgravity environment of the ISS is suspected to be a factor in the bacteria’s persistence.

The origin of the bacteria remains a mystery to the team, who can’t recall how it might have been introduced to the station. Life in space presents different growth conditions for organisms, leading to alternative evolutionary paths compared to their Earth-bound counterparts.

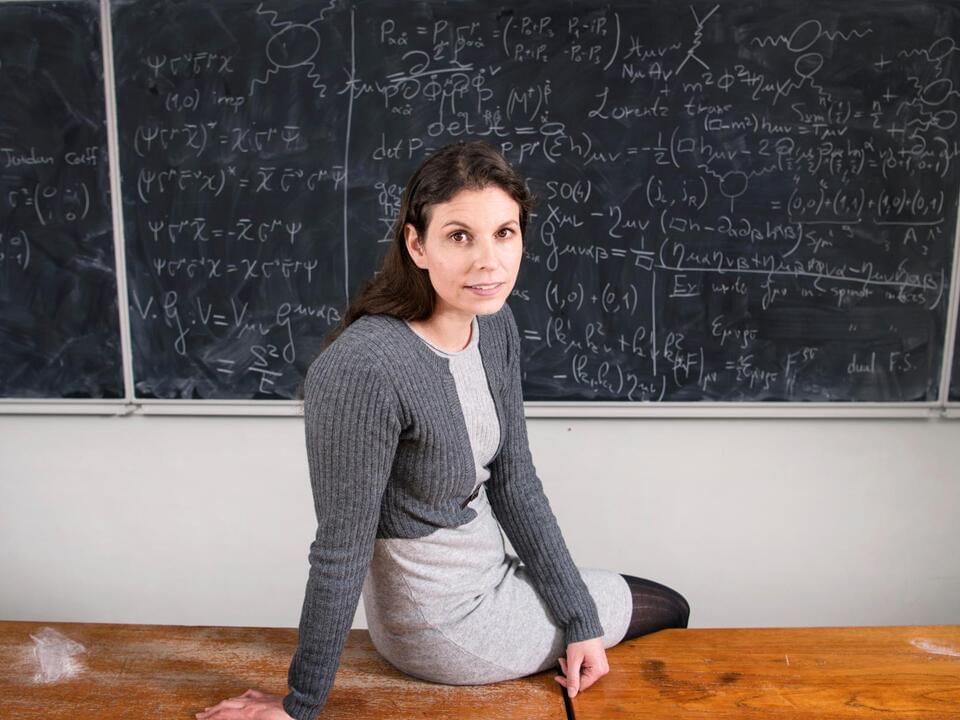

I found this on NewsBreak: Physicist Claudia de Rham: ‘Gravity connects everything, from a person to a planet’

The scientist on training as a diver, pilot and astronaut in order to understand the true nature of gravity, and why being able to describe what happens at the centre of a black hole is so important.

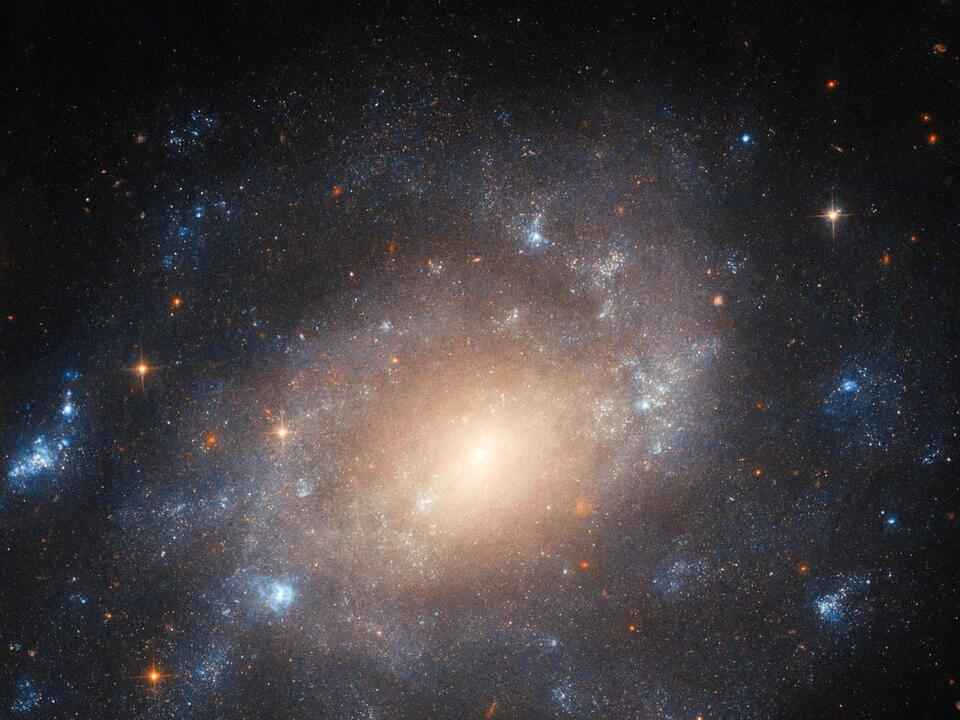

I found this on NewsBreak: New models of Big Bang show that visible universe and invisible dark matter co-evolved.

Physicists have long theorized that our universe may not be limited to what we can see. By observing gravitational forces on other galaxies, they’ve hypothesized the existence of “dark matter,” which would be invisible to conventional forms of observation.