The chip uses light instead of electrons to process information and could pave the way for smaller, faster chips with low thermal effects.

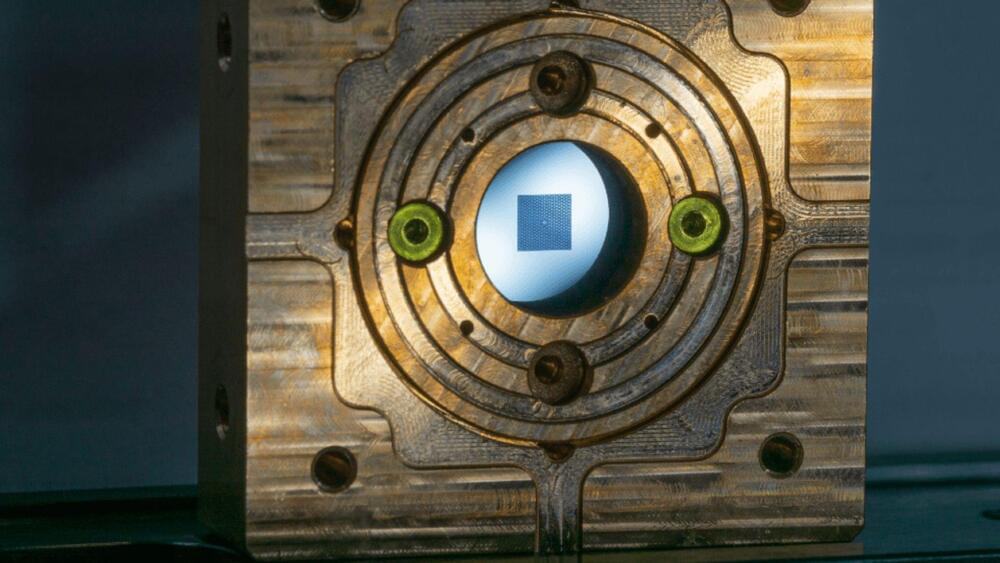

In a basement under the office at the University of Copenhagen, where Niels Bohr once conducted his research, the team toiled to demonstrate an innovative approach to storing quantum data – the quantum drum.

Made of ceramic, the small membrane of the drum has holes scattered around its edges in a neat pattern. When a laser light is incident on the membrane, it begins beating. The sonic vibrations of the drum can be stored and forwarded.

Through their previous work, the researchers know that the membrane stays in a fragile quantum state and can, therefore, receive and transmit data without losing it.

A number of high-profile astronomers are set to convene at London’s Royal Society to question some of the most fundamental aspects of our understanding of the universe.

As The Guardian reports, the luminaries of cosmology will be re-examining some basic assumptions about the universe — right down to the over-a-century-old theory that it’s expanding at a constant rate.

“We are, in cosmology, using a model that was first formulated in 1922,” coorganizer and Oxford cosmologist Subir Sarkar told the newspaper, in an apparent reference to the year Russian astronomer Alexander Friedmann outlined the possibility of cosmic expansion based on Einstein’s general theory of relativity.

The expert consensus is that human-like machine intelligence is still a distant prospect, with only a 50–50 chance that it could emerge by 2059.

But what if there was a way to do it in less than half the time?

Visual Capitalist partnered with VERSES for the final entry in our AI Revolution Series to explore a potential roadmap to a shared or super intelligence that reduces the time required to as little as 16 years.

Herbert Ong Brighter with Herbert.

Zocdoc: Go to https://www.zocdoc.com/ICED and download the Zocdoc App for FREE NetSuite: Take advantage of NetSuite’s FREE KPI checklist: https://www.netsuite.com/ICED Insider Clothing: Head to https://insider.clothing/IcedCoffeeHour and use code ICED15 for 15% off your order Hims: Start your free online visit today at https://hims.com/ich Follow Dr. Alok Kanojia and subscribe to him here: https://www.youtube.com/@HealthyGamerGG Want to understand your mental health? Check out Dr. K’s Guides to Mental Health https://bit.ly/4caPuiC Dr. K’s Book, How to Raise a Healthy Gamer https://bit.ly/3Pk8D8e NEW: Join us at http://www.icedcoffeehour.club for premium content — Enjoy! Add us on Instagram: https://www.instagram.com/jlsselby https://www.instagram.com/gpstephan Official Clips Channel: https://www.youtube.com/channel/UCeBQ24VfikOriqSdKtomh0w For sponsorships or business inquiries reach out to: [email protected] For Podcast Inquiries, please DM @icedcoffeehour on Instagram! Time Stamps: 0:00 — Intro 0:52 — This Is The RIGHT Way To Sit 6:59 — How To Know You’re ACTUALLY Happy 19:34 — How 99% of Things Are Out Of Your Control 24:13 — Who Is Dr. K? (Background) 26:18 — There Is No Such Thing As Good OR Bad 31:18 — Should You Go To Therapy? 33:26 — Dr. K’s Thoughts On Tony Robbins & Neuro-Linguistic Programming 39:03 — How To ACTUALLY Become Happy 1:02:33 — How Much Sacrifice Is Required To Be Successful? 1:14:09 — How To Get Into Your Flow State At Work 1:22:25 — Why Dr. K Thinks ‘Monk Mode’ is “Silly” 1:31:07 — Dr. K Explains Burn Out 1:39:07 — How Our Brains Can Experience “Hypothetical Pain” As REAL PAIN 1:42:47 — How To See NEGATIVES As POSITIVES 2:00:46 — Dr. K Explains The Whole Scale FAILURE of Our Traditional Institutions 2:16:26 — How Our Minds Are Being Controlled 2:46:28 — Is Social Media A GOOD or BAD Thing Overall? 2:49:24 — Dr. K’s Thoughts On Drama Bait YouTube Channels & Instagram ‘Gore” Reels 2:57:27 — Dr. K On PORN & INCELS 3:13:19 — Should You Make Decisions For Your Significant Other? 3:17:41 — Why People Lie & The POWER Of Truth *Emotional* 3:28:30 — How Terminal Patients Learn How To Deal W/ Death 3:35:34 — How Dr. K Personally Deals With Trauma & Negativity 3:59:07 — Dr. K Brings Jack & Graham Through A Meditation Exercise 4:15:31 — Closing Thoughts *Some of the links and other products that appear on this video are from companies which Graham Stephan will earn an affiliate commission or referral bonus.

The Limitless Pendant is part of the whole Limitless system, which the company is launching today. (Oh, and in case you’re wondering: yes, it’s very much a reference to the movie.) Siroker’s last AI product, Rewind, was an app that ran on your computer and would record your screen and other data in order to help you remember every tab, every song, every meeting, everything you do on your computer. (When the company first teased the Limitless Pendant, it was actually called the Rewind Pendant.) Limitless has similar aims, but instead of just running on your computer, it’s meant to collect data in the cloud and the real world, too, and make it all available to you on any device. Rewind is still around, for the folks who want the all-local, one-computer approach — but Siroker says the cross-platform opportunity is much bigger.

“The core job to be done is initially around meetings,” Siroker tells me. “Preparing you for meetings, transcribing meetings, giving you real-time notes of meetings and summaries of meetings.” For $20 a month, the app will capture audio from your computer’s mic and speakers, and you can also give it access to your email and calendar. With that combination — and ultimately all the other apps you use for work, Siroker says — Limitless can do a lot to help you keep track of conversations. What was that new app someone mentioned in the board meeting? What restaurant did Shannon say we should go to next time? Where did I leave off with Jake when we met two weeks ago? In theory, Limitless can get that data and use AI models to get it back to you anytime you ask.

Siroker and I are talking the day after the first reviews of the Humane AI Pin came out, and he’s careful to differentiate his company’s approach from these all-encompassing AI tools. “We’re trying to do a few things exceptionally well, not be a mile wide and an inch deep,” he says. “We’re not, you know, trying to reinvent the wheel with lasers.” His plan is to integrate into all the apps you use and put Limitless inside of those apps; you should be able to take notes in Notion or get action items in Slack, he thinks, instead of having to go to some other app entirely. “Why would I even have to make you log into my cloud based app, when I could just have you show up to the thing you’re already using?”

There are volcanoes erupting hundreds of millions of miles beyond Earth. And a NASA spacecraft is watching it happen.

The space agency’s Juno probe, which has orbited Jupiter since 2016, swooped by the gas giant’s volcanic moon Io last week, its last close planned flyby. The craft captured a world teeming with volcanoes, which you can see in the footage below.

“We’re seeing an incredible amount of detail on the surface,” Ashley Davies, a planetary scientist at NASA’s Jet Propulsion Laboratory who researches Io, told Mashable in February after a recent Io flyby. “It’s just a cornucopia of data. It’s just extraordinary.”