Researchers have demonstrated that magnetic spin waves called magnons can be controlled by voltage and thus could operate more efficiently as information carriers in future devices.

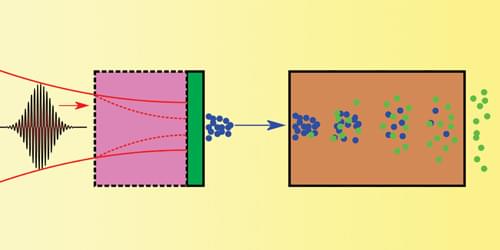

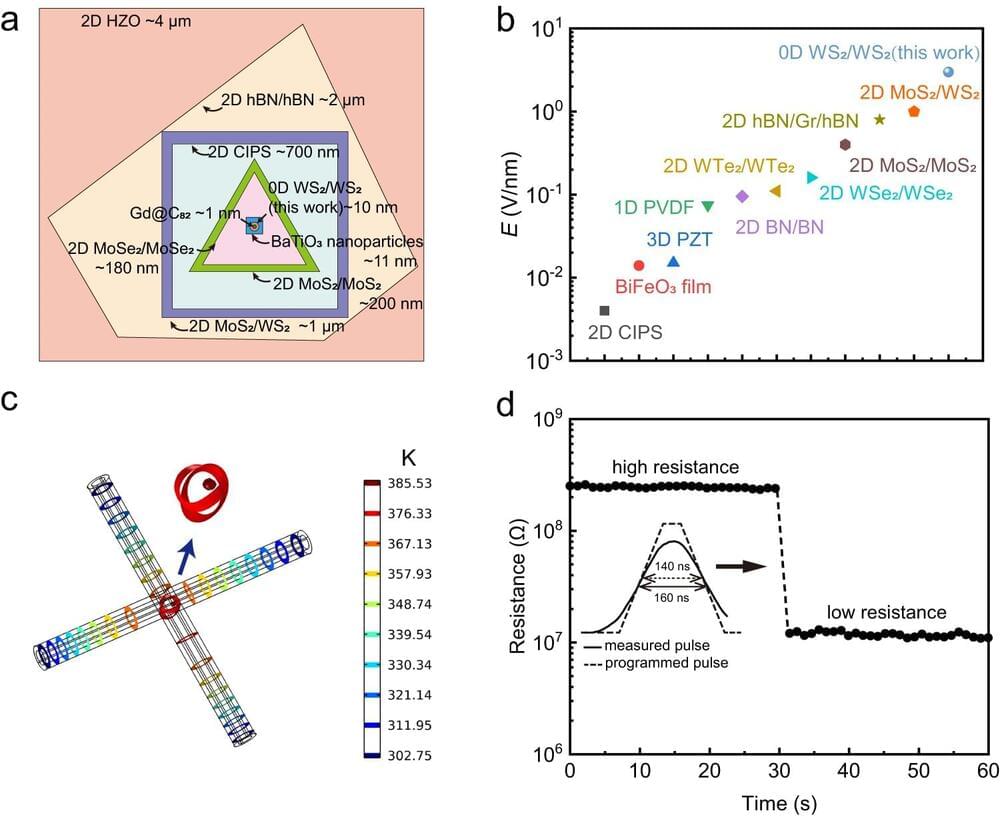

Magnonic devices are being developed to transmit signals, not with electrons, but with magnons—traveling waves in the magnetic ordering of a material. New work provides one of the missing elements of the magnonics toolbox: a voltage-controlled magnon transistor [1]. The device is made up of a magnetic insulator sandwiched between two metal plates. The researchers show that they can control the flow of magnons in the insulator through voltages applied to the plates. The results could lead to more-efficient magnonic devices.

A magnon can be imagined as a row of fixed magnetic elements, or “spins,” that tilt and rotate their orientations in a coordinated pattern. This “spin wave” can carry information through a material without involving the movement of charges, which can cause undesirable heating in a circuit. Magnonics—though still in its infancy—is a potentially energy-efficient alternative to traditional electronics, says Xiu-Feng Han from the Chinese Academy of Sciences. The challenge right now for the magnonics field, he says, is developing practical versions of the four basic components of a magnonic circuit: a generator, a detector, a switch, and a transistor.