The synthesized yeast strain contains over 50% synthetic DNA and survives and replicates much like wild-type S. cerevisiae.

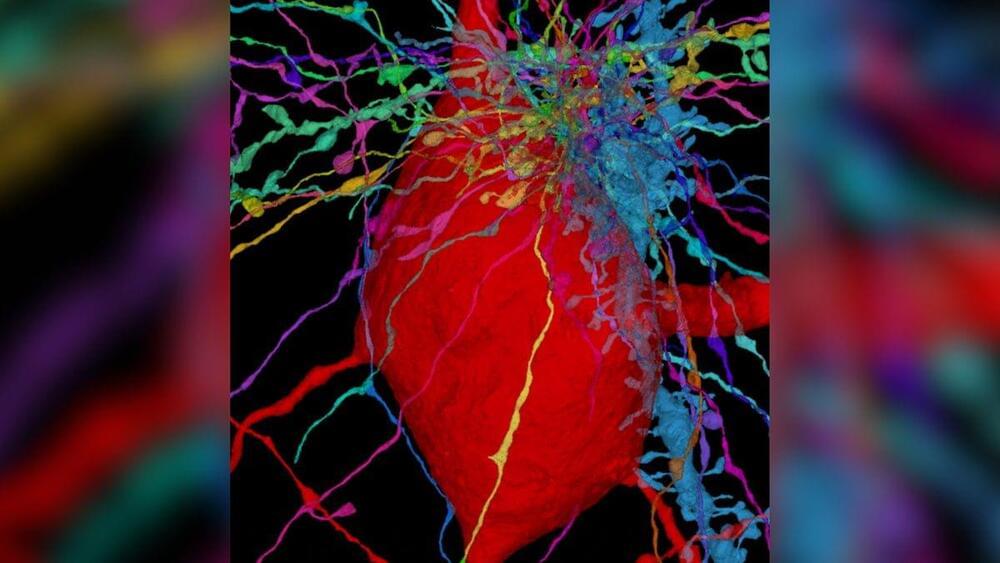

Summary: A new study reveals insights into how general anesthesia affects consciousness and sensory perception.

Using animal models, researchers found that while propofol anesthesia allows sensory information to reach the brain, it disrupts the spread of signals across the cortex. This suggests that consciousness requires synchronized communication throughout the brain, and propofol’s effect of limiting this interconnectivity could explain its role in inducing unconsciousness.

A new map of 56,000 cells in the outer layer of the human brain could inform research into a whole class of diseases.

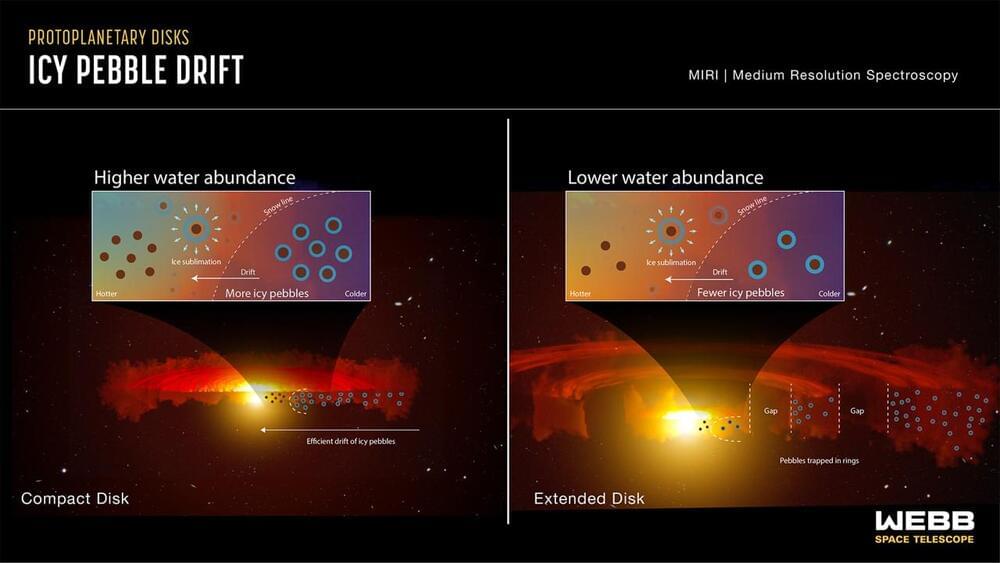

A recent study published in The Astrophysical Journal Letters discusses a groundbreaking discovery using the Mid-Infrared Instrument (MIRI) onboard NASA’s James Webb Space Telescope (JWST) to reveal the processes responsible for planetary formation, specifically the transition of water from the colder, outer regions of a protoplanetary disk to the warmer, inner regions. This study was conducted by an international team of researchers and holds the potential to help astronomers better understand the complex processes behind planetary formation, which could also help us better understand how our own solar system formed billions of years ago.

“Webb finally revealed the connection between water vapor in the inner disk and the drift of icy pebbles from the outer disk,” said Dr. Andrea Banzatti, who is an assistant professor of physics at Texas State University and lead author of the study. “This finding opens up exciting prospects for studying rocky planet formation with Webb!”

Using MIRI, which is sensitive to water vapor in protoplanetary disks, the researchers analyzed four protoplanetary disks orbiting Sun-like stars, although much younger, at only 2–3 million years old, and the four disks analyzed consisted of two compact disks and two extended disks. The compact disks were hypothesized to deliver ice-covered pebbles to a distance equivalent to the orbit of Neptune in our solar system, and the extended disks were hypothesized to deliver ice-covered pebbles as far out as six times Neptune’s orbit. The goal of the study was to determine if the compact disks exhibited a greater amount of water in the inner regions of the disk where rocky planets would theoretically form.

AI startup Hugging Face offers a wide range of data science hosting and development tools, including a GitHub-like portal for AI code repositories, models and datasets, as well as web dashboards to demo AI-powered applications.

But some of Hugging Face’s most impressive — and capable — tools these days come from a two-person team that was formed just in January.

H4, as it’s called — “H4” being short for “helpful, honest, harmless and huggy” — aims to develop tools and “recipes” to enable the AI community to build AI-powered chatbots along the lines of ChatGPT. ChatGPT’s release was the catalyst for H4’s formation, in fact, according to Lewis Tunstall, a machine learning engineer at Hugging Face and one of H4’s two members.

It sounds like a smartphone without a screen, and it will have a $24 / month subscription on top of it.

Humane has been teasing its first device, the AI Pin, for most of this year.

Humane is trying to invent a new way to use your mobile devices.

Meta has joined forces with Hugging Face, an open source community-driven platform that hosts machine learning models and tools, and Scaleway, European cloud leader for AI infrastructures, to launch the “AI Startup Program”, an initiative aimed to accelerate the adoption of open-source artificial intelligence solutions within the French entrepreneurial ecosystem. With the proliferation of foundation models and generative artificial intelligence models, the aim is to bring the economic and technological benefits of open, state-of-the-art models to the French ecosystem.

Located at STATION F in Paris, the world’s largest startup campus, and with the support of the HEC incubator, the programme will support 5 startups in the acceleration phase, from January to June 2024. A panel of experts from Meta, Hugging Face and Scaleway will select projects based on open foundation models and/or demonstrating their willingness to integrate these models into their products and services.

The startups selected will benefit from technical mentoring by researchers, engineers and PhD students from FAIR, Meta’s artificial intelligence research laboratory, access to Hugging Face’s platform and tools, and Scaleway’s computing power in order to develop their services based on open source AI technology bricks. In addition to LLMs – large language models – startups will also be able to draw on foundation and research models in the field of image and sound processing. Applications are open until 1 December 2023.

Soybean (Glycine max) is one of the most economically and societally impactful crops in the world, providing a significant percentage of all protein for animal consumption on a global scale, and playing key roles in oil production, manufacturing, and biofuel applications. In 2022, an estimated 4.3 billion bushels of soybeans were produced in the United States, a decrease of almost 200 million bushels compared to the previous year.

To keep up with the growing demand for soy-based animal feed, the USDA projects soybean acreage will increase by 19.6% by 2032. Hybrid breeding in soybean has the potential to increase the productivity of one of the most planted and consumed crops in the Americas, yet it has remained largely unexplored.

New research by scientists at the Donald Danforth Plant Science Center and Cornell University provides a key enabling technology to produce obligate outcrossing in soybean. The newly published study, “Introduction of barnase/barstar in soybean produces a rescuable male sterility system for hybrid breeding” in the Plant Biotechnology Journal, has revealed that obligate outcrossing with the Barnase/Barstar lines provides a new resource that can be used to amplify hybrid seed sets, enabling large-scale trials for heterosis in this major crop.

The D’Onghia magnetic shielding crew hat, or CREW HaT, is a system that uses electromagnetic coils to deflect cosmic radiation from astronauts. The system consists of:

A ring of electrical coils positioned on arms roughly 5 meters from the spacecraft’s main body A Halbach Torus, a circular array of magnets that creates a stronger field on one side while reducing the field on the other side Superconducting tapes When turned on, the system forms an extended magnetic field outside the spacecraft that deflects the cosmic radiation.