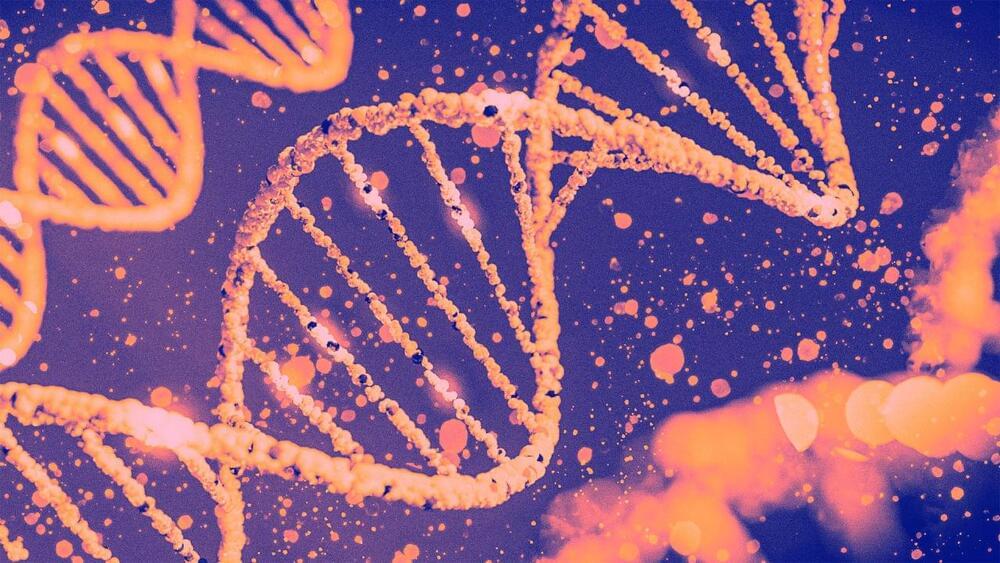

2023 was the year that CRISPR gene-editing sliced its way out of the lab and into the public consciousness—and American medical system. The Food and Drug Administration recently approved the first gene-editing CRISPR therapy, Casgevy (or exa-cel), a treatment from CRISPR Therapeutics and partner Vertex for patients with sickle cell disease. This comes on the heels of a similar green light by U.K. regulators in a historic moment for a gene-editing technology whose foundations were laid back in the 1980s, eventually resulting in a 2020 Nobel Prize in Chemistry for pioneering CRISPR scientists Jennifer Doudna and Emmanuelle Charpentier.

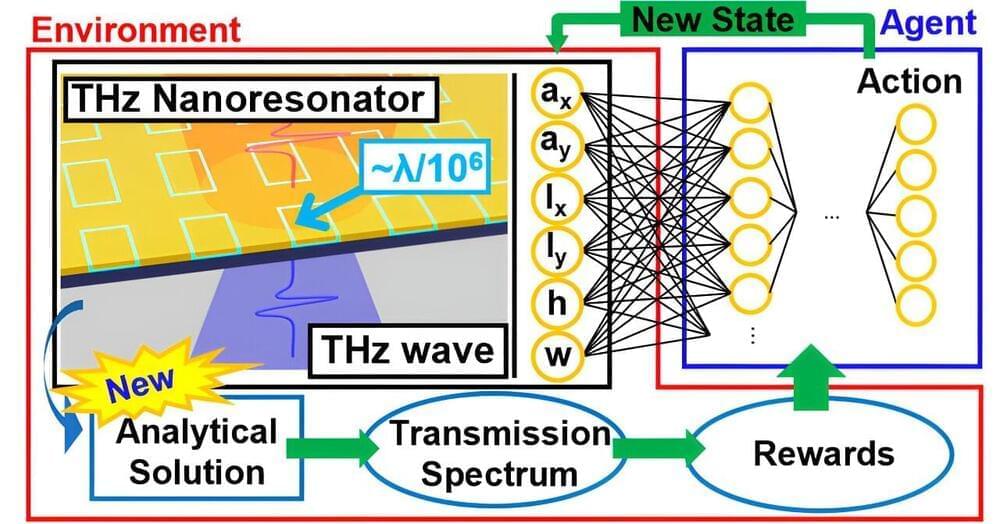

That decades-long gap between initial scientific spark, widespread academic recognition, and now the market entry of a potential cure for blood disorders like sickle cell disease that afflict hundreds of thousands of people around the world is telling. If past is prologue, even newer CRISPR gene-editing approaches being studied today have the potential to treat diseases ranging from cancer and muscular dystrophy to heart disease, birth more resilient livestock and plants that can grapple with climate change and new strains of deadly viruses, and even upend the energy industry by tweaking bacterial DNA to create more efficient biofuels in future decades. And novel uses of CRISPR, with assists from other technologies like artificial intelligence, might fuel even more precise, targeted gene-editing—in turn accelerating future discovery with implications for just about any industry that relies on biological material, from medicine to agriculture to energy.

With new CRISPR discoveries guided by AI, specifically, we can expand the toolbox available for gene editing, which is crucial for therapeutic, diagnostic, and research applications… but also a great way to better understand the vast diversity of microbial defense mechanisms, said Feng Zhang, another CRISPR pioneer, molecular biologist, and core member at the Broad Institute of MIT and Harvard in an emailed statement to Fast Company.