In a recent paper, SFI Professor David Wolpert, SFI Fractal Faculty member Carlo Rovelli, and physicist Jordan Scharnhorst examine a longstanding, paradoxical thought experiment in statistical physics and cosmology known as the “Boltzmann brain” hypothesis—the possibility that our memories, perceptions, and observations could arise from random fluctuations in entropy rather than reflecting the universe’s actual past. The work is published in the journal Entropy.

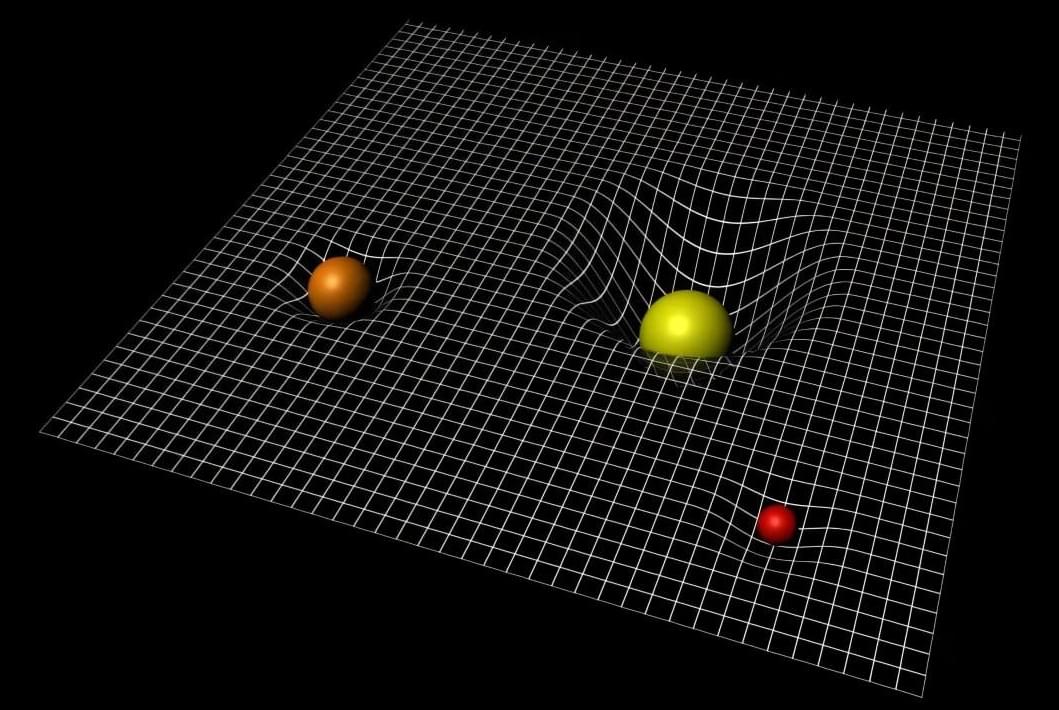

The paradox arises from a tension at the heart of statistical physics. One of the central pillars of our understanding of the time-asymmetric second law of thermodynamics is Boltzmann’s H theorem, a fundamental concept in statistical mechanics. However, paradoxically, the H theorem is itself symmetric in time.

That time-symmetry implies that it is, formally speaking, far more likely for the structures of our memories, perceptions, and observations to arise from random fluctuations in the universe’s entropy than to represent genuine records of our actual external universe in the past. In other words, statistical physics seems to force us to conclude that our memories might be spurious—elaborate illusions produced by chance that tell us nothing about what we think they do. This is the Boltzmann brain hypothesis.