The human brain consumes vast amounts of energy, which is almost exclusively generated from a form of metabolism that requires oxygen. While the efficient and timely delivery of oxygen is known to be critical to healthy brain function, the precise mechanics of this process have largely remained hidden from scientists.

Get the latest international news and world events from around the world.

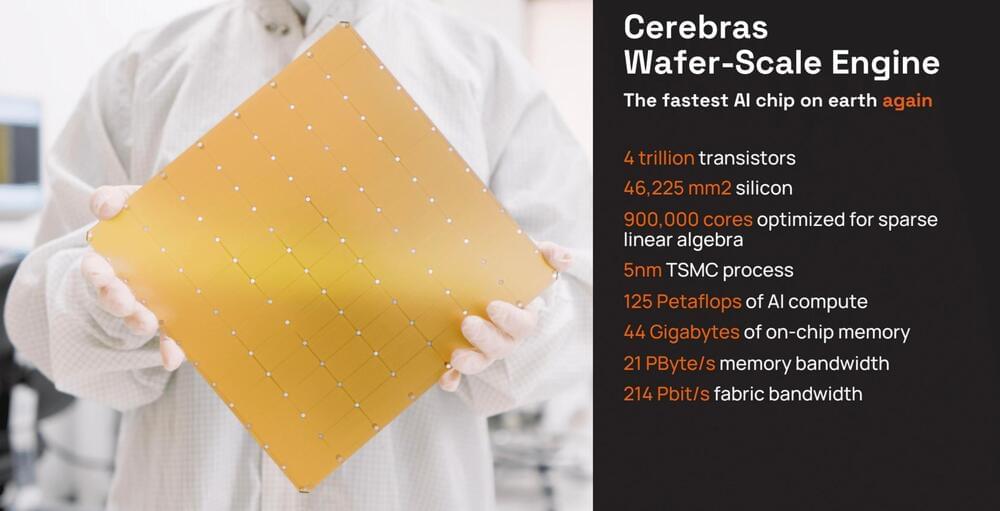

Cerebras Update: The Wafer Scale Engine 3 Is A Door Opener

Cerebras held an AI Day, and in spite of the concurrently running GTC, there wasn’t an empty seat in the house.

As we have noted, Cerebras Systems is one of the very few startups that is actually getting some serious traction in training AI, at least from a handful of clients. They just introduced the third generation of Wafer-Scale Engines, a monster of a chip that can outperform racks of GPUs, as well as a partnership with Qualcomm to provide custom training and Go-To-Market collaboration with the Edge AI leader. Here’s a few take-aways from the AI Day event. Lots of images from Cerebras, but they tell the story quite well! We will cover the challenges this bold startup still faces in the Conclusions at the end.

As the third generation of wafer-scale engines, the new WSE-3 and the system in which it runs, the CS-3, is an engineering marvel. While Cerebras likes to compare it to a single GPU chip, thats really not the point, which is to simplify scaling. Why cut up a a wafer of chips, package each with HBM, put the package on a board, connect to CPUs with a fabric, then tie them all back together with networking chips and cables? Thats a lot of complexity that leads to a lot of programing to distribute the workload via various forms of parallelism then tie them all back together into a supercomputer. Cerebras thinks it has a better idea.

Genetic Variants and Cannabis: Unraveling Risk Factors for CUD

“The increases in THC levels found in cannabis could mimic some of the more pronounced effects that we see for people who are slower metabolizers,” said Dr. Christal Davis.

How can genetics influence cannabis consumption? This is what a recent study published in Addictive Behaviors hopes to address as a team of researchers investigated a link between how genetic variances influence how a person metabolizes THC, which could not only determine future use but also the chances of succumbing to cannabis use disorder, or CUD. This study holds the potential to help cannabis users, medical professionals, legislators, and the public better understand the physiological influences of cannabis use, even at the molecular level.

For the study, the researchers enlisted 54 participants between 18–25 years of age, 38 of whom suffered from CUD while the remaining 16 suffered from non-CUD substance abuse. It has been determined that individuals aged 18–25 have a three times greater likelihood of having CUD compared to individuals over the age of 26. After obtaining blood samples from each participant, the researchers tested them for differences in gene markers, specifically pertaining to THC-metabolizing enzymes. Additionally, each participant was instructed to fill out a questionnaire regarding their experiences with cannabis use and how it makes them feel when they use it.

In the end, the researchers found notable differences between men and women participants, specifically regarding how young women with CUD were found to metabolize THC at slower rates than young women who did not suffer from CUD. For the men, the researchers discovered negative reports from cannabis use with those who also metabolized THC at slower rates, which was the same for both sexes. Additionally, the researchers’ found CUD was more prevalent in individuals who started using cannabis when teenagers, as well. The researchers concluded that proper treatment options for CUD could be proposed due to lack of genetic testing.

Sam Bankman-Fried sentenced to 25 years in prison for orchestrating FTX fraud

Sam Bankman-Fried was sentenced Thursday to 25 years in prison for his role in defrauding users of the collapsed cryptocurrency exchange FTX. In a Lower Manhattan federal courtroom, U.S. District Judge Lewis Kaplan called the defense’s argument misleading, logically flawed, and speculative.

Bankman-Fried, wearing a beige jailhouse jumpsuit, struck an apologetic tone, saying he had made a series of “selfish” decisions while leading FTX and “threw it all away.”

“It haunts me every day,” he said in his statement.

Prosecutors had sought as much as 50 years, while Bankman-Fried’s legal team argued for no more than 6½ years. He was convicted on seven criminal counts in November and had been held at the Metropolitan Detention Center in Brooklyn since.

Is AI’s next big leap understanding emotion? $50M for Hume says yes

At a time when other AI assistants and chatbots are also beefing up their own voice interaction capabilities — as OpenAI just did with ChatGPT — Hume AI may have just set a new standard in mind-blowing human-like interactivity, intonation, and speaking qualities.

One obvious potential customer, rival, or would-be acquirer that comes to mind in this case is Amazon, which remains many people’s preferred voice assistant provider through Alexa, but which has since de-emphasized its voice offerings internally and stated it would reduce headcount on that division.

Asked by VentureBeat: “Have you had discussions with or been approached for partnerships/acquisitions by larger entities such as Amazon, Microsoft, etc? I could imagine Amazon in particular being quite interested in this technology as it seems like a vastly improved voice assistant compared to Amazon’s Alexa,” Cowen responded via email: “No comment.”