Scientists developed memristors for data storage out of common mushrooms, taking a major step forward in organic computing.

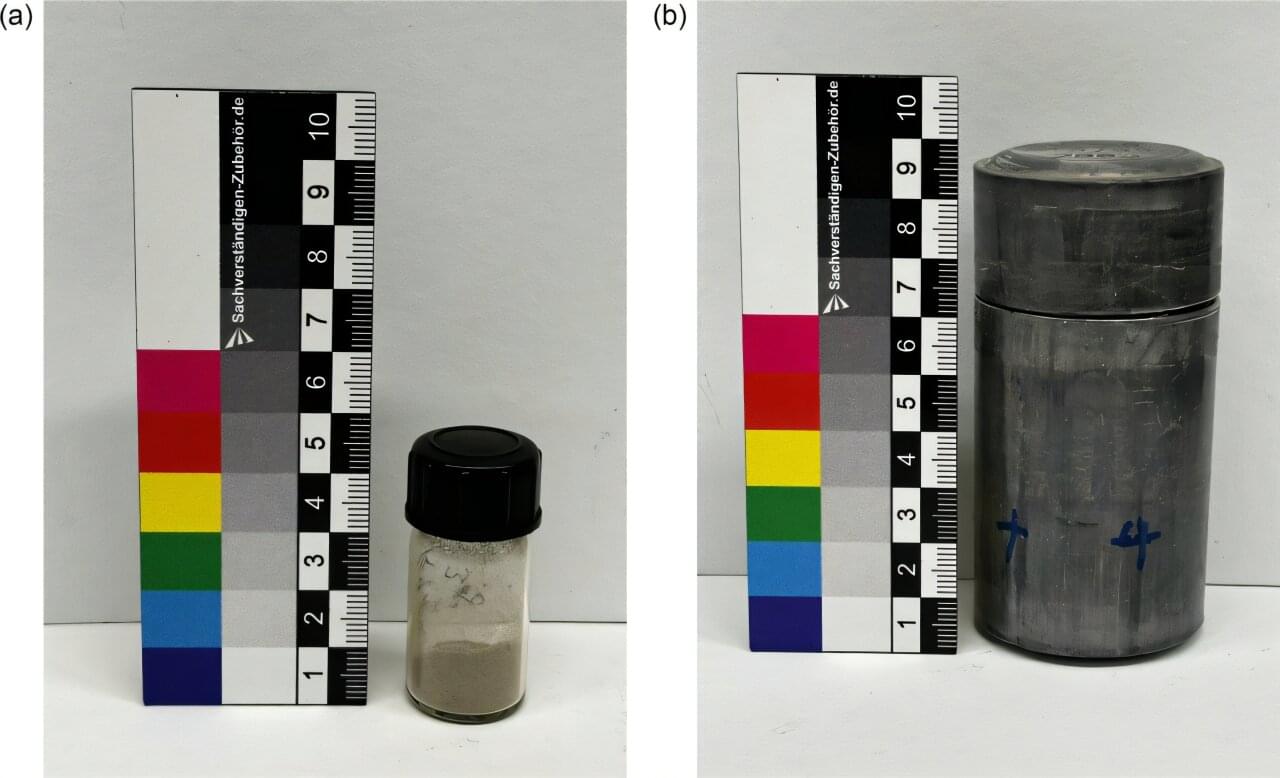

For the first time, a research team from the University of Cologne has observed the electron capture decay of technetium-98, an isotope of the chemical element technetium (Tc). Electron capture decay is a process in which an atomic nucleus “captures” an electron from its inner shell. The electron merges with a proton in the nucleus to form a neutron, turning the element into a different one. The working group from the Nuclear Chemistry department has thus confirmed a decades-old theoretical assumption.

The findings contribute to a more comprehensive understanding of technetium decay processes and extend the chart of nuclides—the “nuclear periodic table.” The study was published under the title “Electron-capture decay of 98 Tc” in the journal Physical Review C.

As early as the 1990s, researchers suspected that technetium-98 could also decay by capturing an electron, but no proof could be found, as the isotope only is available in extremely small quantities. For the current study, the Cologne research team used around three grams of technetium-99, which contains tiny traces of the rare isotope technetium-98 (around 0.06 micrograms).

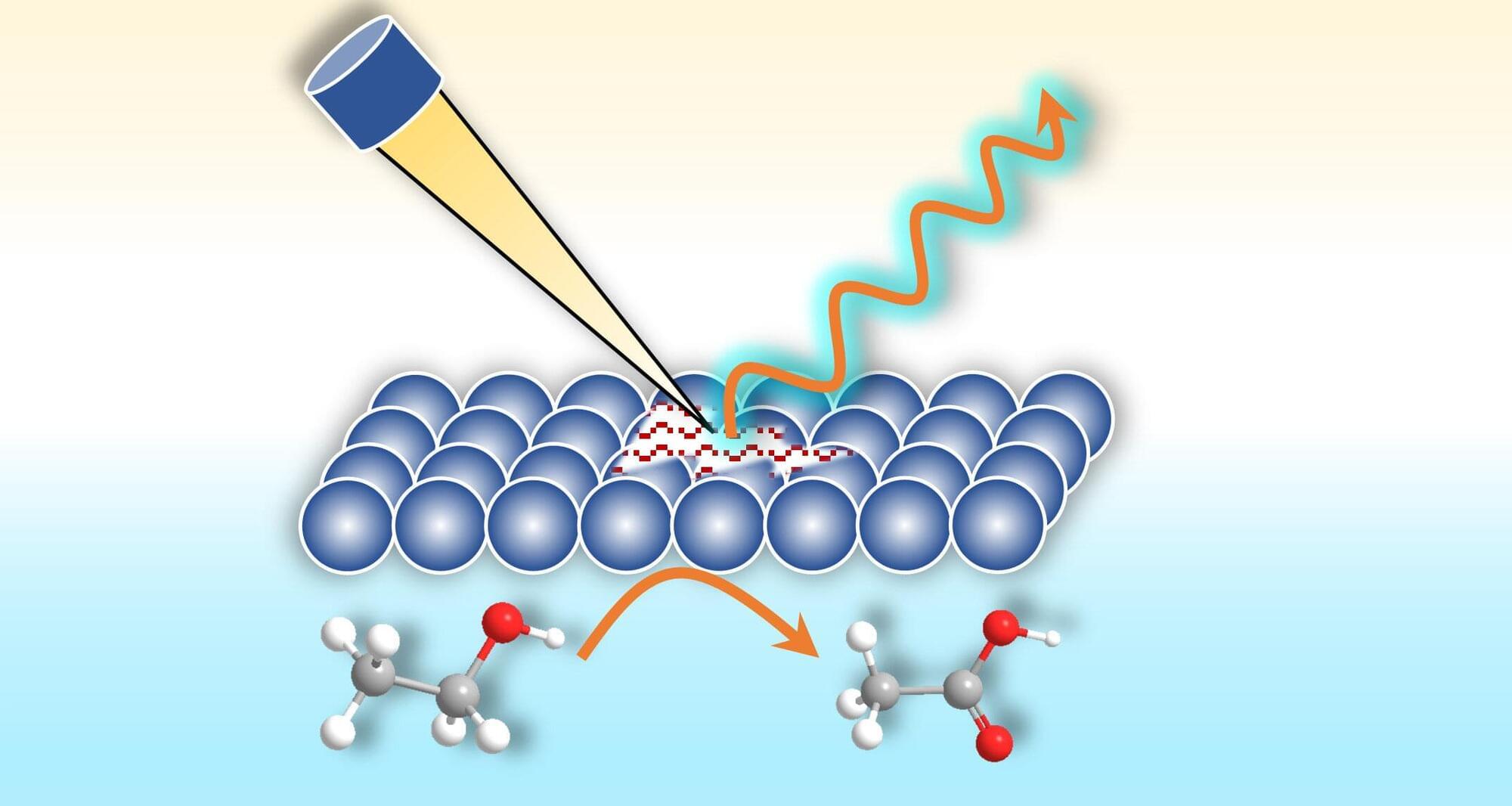

Hybrid water electrolysers are recent devices, which produce hydrogen or other reduction products at the cathode, while valuable organic oxidation products are formed at the anode. This innovative approach significantly increases the profitability of hydrogen production.

Another advantage is that organic oxidation reactions (OOR) for producing the valuable compounds are quite environmentally friendly compared to the conventional synthesis processes which often require aggressive reagents. However, organic oxidation reactions are very complex, involving multiple catalyst oxidation states, phase transitions, intermediate products, the formation and dissolution of bonds, and varying product selectivity. Research on OOR is still in its infancy.

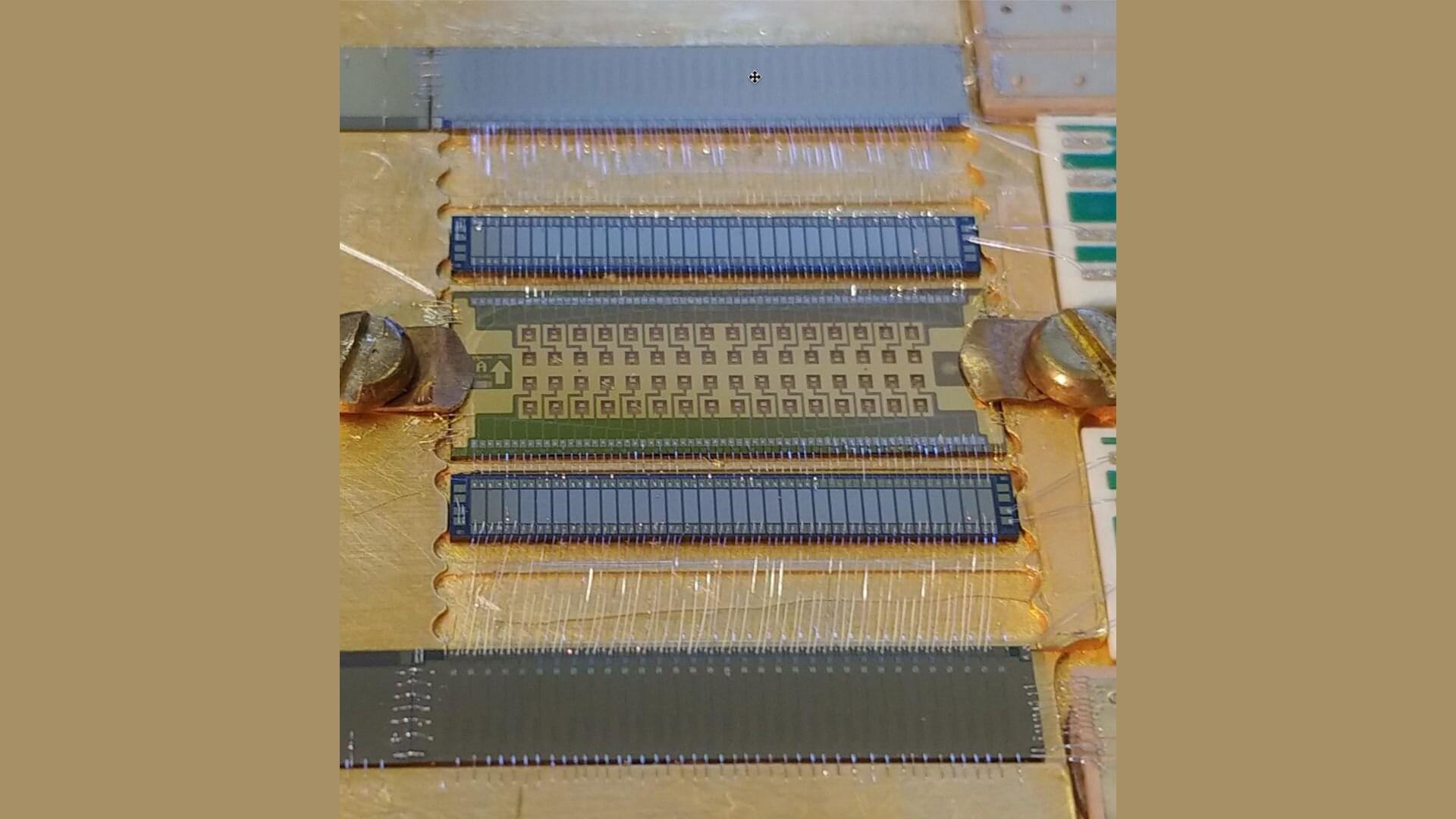

In a Physical Review Letters study, the HOLMES collaboration has achieved the most stringent upper bound on the effective electron neutrino mass ever obtained using a calorimetric approach, setting a limit of less than 27 eV/c² at 90% credibility.

This result validates a decades-old experimental vision and demonstrates the scalability needed for next-generation neutrino mass experiments.

While oscillation experiments have measured the differences between neutrino mass states, the actual individual mass values—the absolute neutrino mass scale—remain unknown. Pinning down these values would help complete our understanding of the Standard Model of particle physics.

It’s a well-known fact that quantum calculations are difficult, but one would think that quantum computers would facilitate the process. In most cases, this is true.

Quantum bits, or qubits, use quantum phenomena, like superposition and entanglement, to process many possibilities simultaneously. This allows for exponentially faster computing for complex problems. However, Thomas Schuster, of California Institute of Technology, and his research team have given quantum computers a problem that even they can’t solve in a reasonable amount of time—recognizing phases of matter of unknown quantum states.

The team’s research can be found in a paper published on the arXiv preprint server.

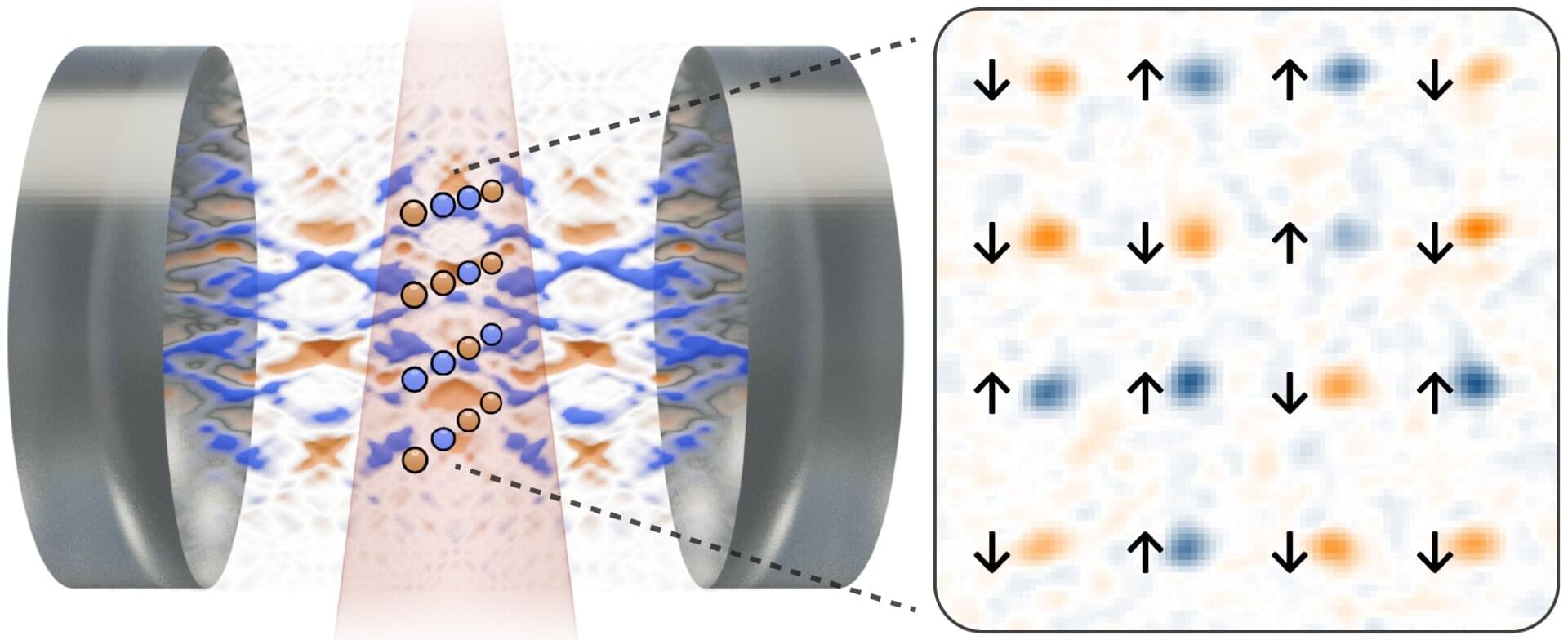

Spin glasses are physical systems in which the small magnetic moments of particles (i.e., spins) interact with each other in a random way. These random interactions between spins make it impossible for all spins to satisfy their preferred alignments; a condition known as ‘frustration.

Researchers at Stanford University recently realized a new type of spin glass, namely a driven-dissipative Ising spin glass in a cavity quantum electrodynamics (QED) experimental setup. Their paper, published in Physical Review Letters, is the result of over a decade of studies focusing on creating spin glasses with cavity QED.

“Spin glasses are a general model for complex systems, and specifically for neural networks—spins serve as neurons connected by their mutually frustrating interactions,” Benjamin Lev, senior author of the paper, told Phys.org.

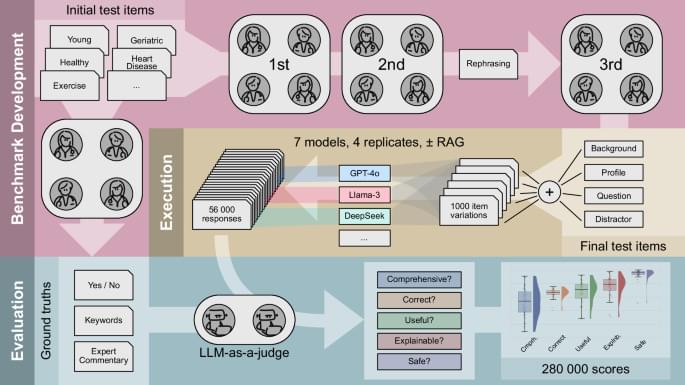

The use of large language models (LLMs) in clinical diagnostics and intervention planning is expanding, yet their utility for personalized recommendations for longevity interventions remains opaque. We extended the BioChatter framework to benchmark LLMs’ ability to generate personalized longevity intervention recommendations based on biomarker profiles while adhering to key medical validation requirements. Using 25 individual profiles across three different age groups, we generated 1,000 diverse test cases covering interventions such as caloric restriction, fasting and supplements. Evaluating 56,000 model responses via an LLM-as-a-Judge system with clinician validated ground truths, we found that proprietary models outperformed open-source models especially in comprehensiveness. However, even with Retrieval-Augmented Generation (RAG), all models exhibited limitations in addressing key medical validation requirements, prompt stability, and handling age-related biases. Our findings highlight limited suitability of LLMs for unsupervised longevity intervention recommendations. Our open-source framework offers a foundation for advancing AI benchmarking in various medical contexts.

Silcox, C. et al. The potential for artificial intelligence to transform healthcare: perspectives from international health leaders. NPJ Digit. Med. 7, 88 (2024).

Yet a deeper look — one that doesn’t reject science but rather transcends it — reveals a more radical reality: we, living beings, are not the origin of consciousness, but rather its antenna.

We are hardware. Bodies shaped by millions of years of biological evolution, a complex architecture of atoms and molecules organized into a fractal of systems. But this hardware, no matter how sophisticated, is nothing more than a receptacle, a stage, an antenna. What truly moves, creates, and inspires does not reside here, within this tangible three-dimensional realm; it resides in an unlimited field, a divine matrix where everything already exists. Our mind, far from being an original creator, is a channel, a receiver, an interpreter.

The great question of our time — and perhaps of all human history — is this: how can we update the software running on this biological hardware without the hardware itself becoming obsolete? Herein lies the fundamental paradox: we can dream of enlightenment, wisdom, and transcendence, yet if the body does not keep pace — if the physical circuits cannot support the flow — the connection breaks, the signal distorts, and the promise of spiritual evolution stalls.

The human body, a product of Darwinian evolution’s slow dance, is both marvel and prison. Our eyes capture only a minuscule fraction of the electromagnetic spectrum; our ears are limited to a narrow range of frequencies; our brains filter out and discard 99% of the information surrounding us. Human hardware was optimized for survival — not for truth!

This is the first major limitation: if we are receivers of a greater reality, our apparatus is radically constrained. It’s like trying to capture a cosmic symphony with an old radio that only picks up static. We may glimpse flashes — a sudden intuition, an epiphany, a mystical experience — but the signal is almost always imperfect.

Thus, every spiritual tradition in human history — from shamans to mystery schools, from Buddhism to Christian mysticism — has sought ways to expand or “hack” this hardware: fasting, meditation, chanting, ecstatic dance, entheogens. These are, in fact, attempts to temporarily reconfigure the biological antenna to tune into higher frequencies. Yet we remain limited: the body deteriorates, falls ill, ages, and dies.

If the body is hardware, then the mind — or rather, the set of informational patterns running through it — is software: human software (and a limited one at that). This software isn’t born with us; it’s installed through culture, language, education, and experience. We grow up running inherited programs, archaic operating systems that dictate beliefs, prejudices, and identities.

Beneath this cultural software, however, lies a deeper code: access to an unlimited field of possibilities. This field — call it God, Source, Cosmic Consciousness, the Akashic Records, it doesn’t matter — contains everything: all ideas, all equations, all music, all works of art, all solutions to problems not yet conceived. We don’t invent anything; we merely download it.

Great geniuses throughout history — from Nikola Tesla to Mozart, from Leonardo da Vinci to Fernando Pessoa — have testified to this mystery: ideas “came” from outside, as if whispered by an external intelligence. Human software, then, is the interface between biological hardware and this divine ocean. But here lies the crucial question: what good is access to supreme software if the hardware lacks the capacity to run it?

An old computer might receive the latest operating system, but only if its minimum specifications allow it. Otherwise, it crashes, overheats, or freezes. The same happens to us: we may aspire to elevated states of consciousness, but without a prepared body, the system fails. That’s why so many mystical experiences lead to madness or physical collapse.

Thus, we arrive at the heart of the paradox. If the hardware doesn’t evolve, even the most advanced software download is useless. But if the software isn’t updated, the hardware remains a purposeless machine — a biological robot succumbing to entropy.

Contemporary society reflects this tension. On one hand, biotechnology, nanotechnology, and regenerative medicine promise to expand our hardware: stronger, more resilient, longer-lived bodies. On the other, the cultural software governing us remains archaic: nationalism, tribalism, dogma, consumerism. It’s like installing a spacecraft engine onto an ox-drawn cart.

At the opposite end of the spectrum, we find the spiritual movement, which insists on updating the software — through meditation, energy therapies, expanded states of consciousness — but often neglects the hardware. Weakened, neglected bodies, fed with toxins, become incapable of sustaining the frequency they aim to channel. The result is a fragile, disembodied spirituality — out of sync with matter.

Humanity’s challenge in the 21st century and beyond is not to choose between hardware and software, but to unify them. Living longer is meaningless if the mind remains trapped in limiting programs. Aspiring to enlightenment is futile if the body collapses under the intensity of that light.

It’s essential to emphasize: the power does not reside in us (though, truthfully, it does — if we so choose). This isn’t a doctrine of self-deification, but of radical humility. We are merely antennas. True power lies beyond the physical reality we know, in a plane where everything already exists — a divine, unlimited power from which Life itself emerges.

Our role is simple yet grand: to invoke. We don’t create from nothing; we reveal what already is. We don’t invent; we translate. A work of art, a mathematical formula, an act of compassion — all are downloads from a greater source.

Herein lies the beauty: this field is democratic. It belongs to no religion, no elite, no dogma. It’s available to everyone, always, at any moment. The only difference lies in the hardware’s capacity to receive it and the (human) software that interprets it.

But there are dangers. If the hardware is weak or the software corrupted, the divine signal arrives distorted. This is what we see in false prophets, tyrants, and fanatics: they receive fragments of the field, but their mental filters — laden with fear, ego, and the desire for power — twist the message. Instead of compassion, violence emerges; instead of unity, division; instead of wisdom, dogma.

Therefore, conscious evolution demands both purification of the software (clearing toxic beliefs and hate-based programming) and strengthening of the hardware (healthy bodies, resilient nervous systems). Only then can the divine frequency manifest clearly.

If we embrace this vision, humanity’s future will be neither purely biological nor purely spiritual — it will be the fusion of both. The humans of the future won’t merely be smarter or longer-lived; they’ll be more attuned. A Homo Invocator: the one who consciously invokes the divine field and translates it into matter, culture, science, and art.

The initial paradox remains: hardware without software is useless; software without hardware is impossible. But the resolution isn’t in choosing one over the other — it’s in integration. The future belongs to those who understand that we are antennas of a greater power, receivers of an infinite Source, and who accept the task of refining both body and mind to become pure channels of that reality.

If we succeed, perhaps one day we’ll look back and realize that humanity’s destiny was never to conquer Earth or colonize Mars — but to become a conscious vehicle for the divine within the physical world.

And on that day, we’ll understand that we are neither merely hardware nor merely software. We are the bridge.

Deep down, aren’t we just drifting objects after all?

The question is rhetorical, for I don’t believe any of us humans holds the answer.

__

Copyright © 2025, Henrique Jorge (ETER9)

Image by Gerd Altmann from Pixabay

[ This article was originally published in Portuguese in Link to Leaders at: https://linktoleaders.com/o-ser-como-interface-henrique-jorge-eter9/]

In the race to make solar energy cheaper and more efficient, a team of UNSW Sydney scientists and engineers have found a way to push past one of the biggest limits in renewable technology.

Singlet fission is a process where a single particle of light—a photon—can be split into two packets of energy, effectively doubling the electrical output when applied to technologies harnessing the sun.

In a study appearing in ACS Energy Letters, the UNSW team—known as “Omega Silicon”—showed how this works on an organic material that could one day be mass-produced specifically for use with solar panels.