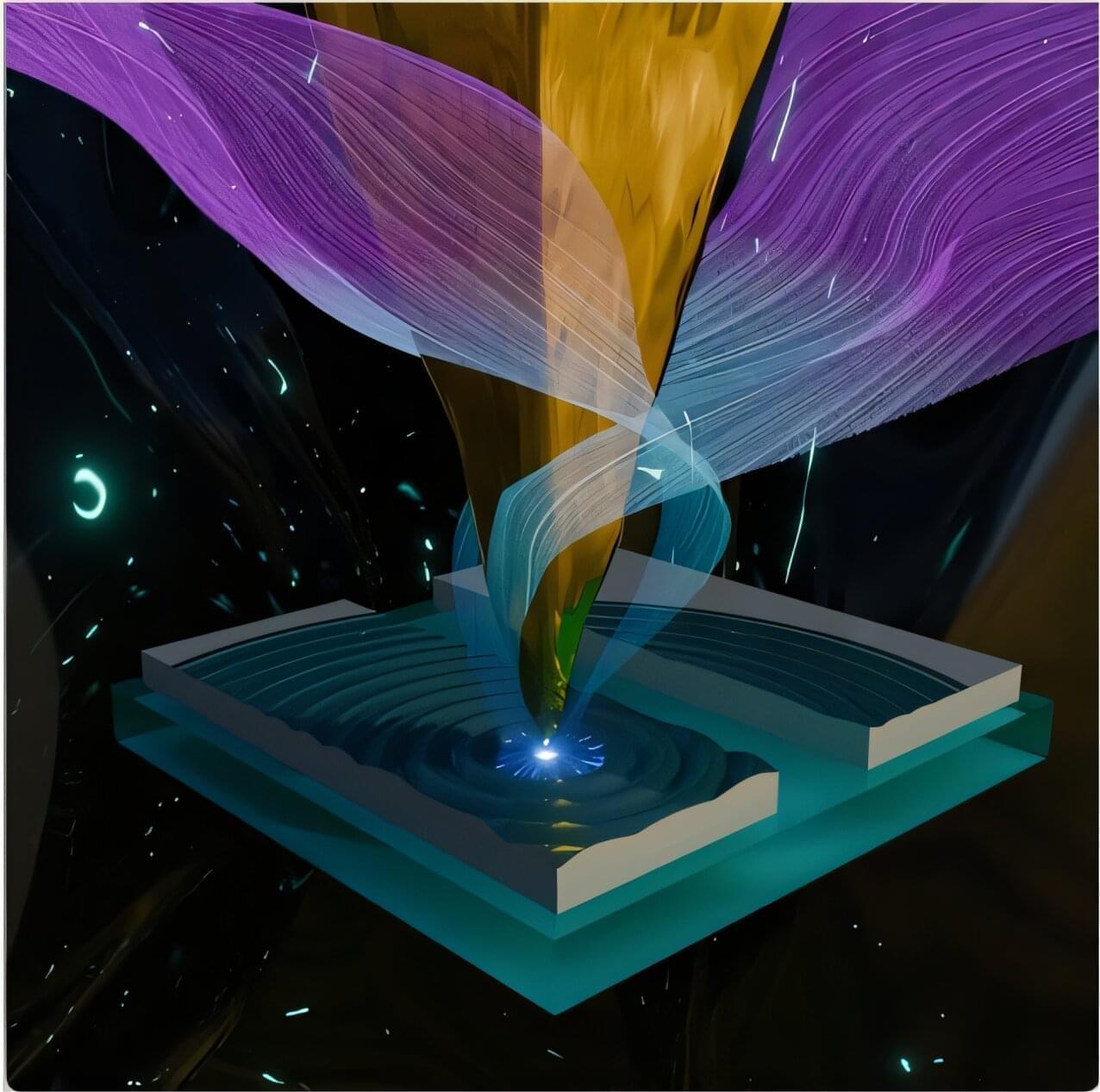

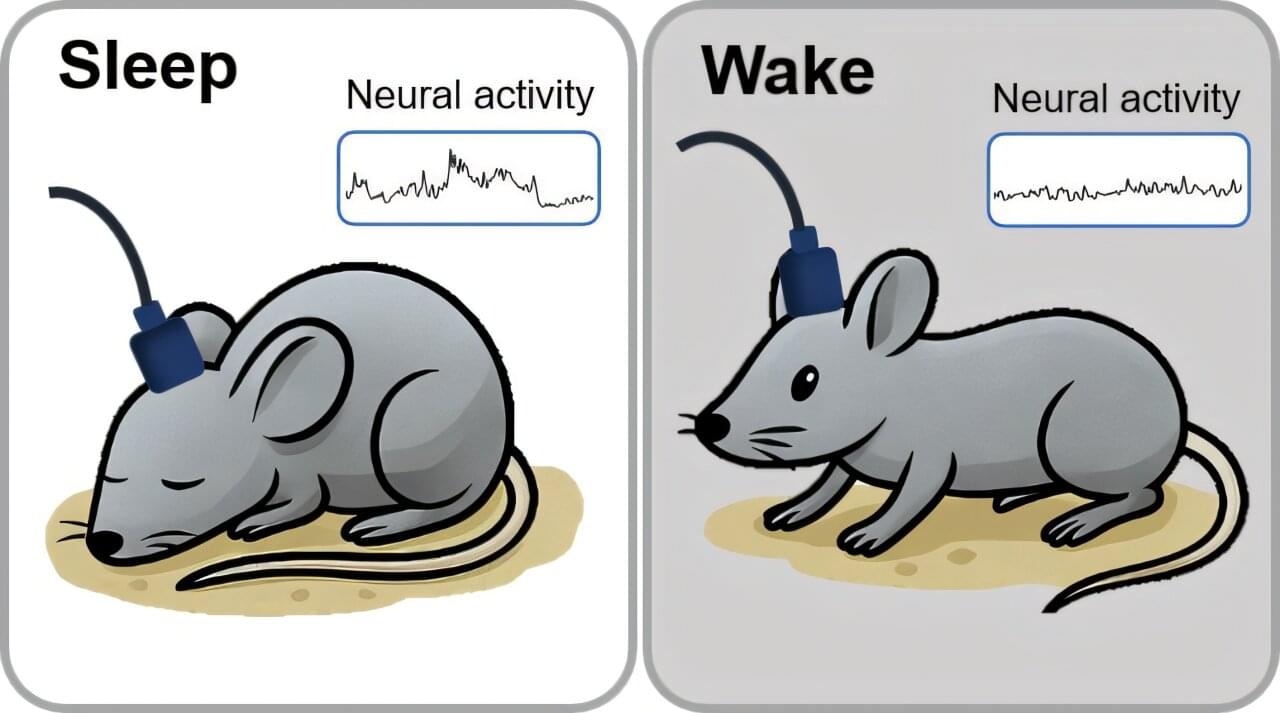

Researchers have developed a material that can sense tiny changes within the body, such as during an arthritis flareup, and release drugs exactly where and when they are needed.

The squishy material can be loaded with anti-inflammatory drugs that are released in response to small changes in pH in the body. During an arthritis flareup, a joint becomes inflamed and slightly more acidic than the surrounding tissue.

The material, developed by researchers at the University of Cambridge, has been designed to respond to this natural change in pH. As acidity increases, the material becomes softer and more jelly-like, triggering the release of drug molecules that can be encapsulated within its structure. Since the material is designed to respond only within a narrow pH range, the team says that drugs could be released precisely where and when they are needed, potentially reducing side effects.